filmov

tv

#43 Bayes Optimal Classifier with Example & Gibs Algorithm |ML|

Показать описание

#43 Bayes Optimal Classifier with Example & Gibs Algorithm |ML|

ML # 43 - Bayes Optimal Classifier, Gibbs Classifier

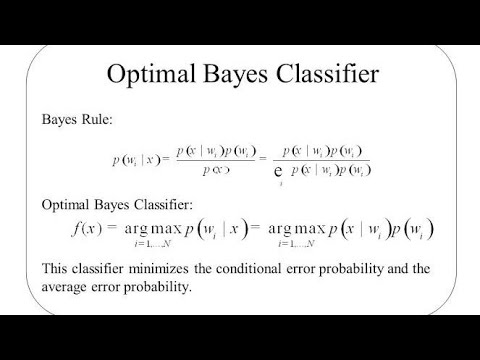

3.4 Bayes Optimal Classifier

Bayes Classifier - Machine Learning

Bayes Optimal Classifier

Bayes Optimal Classifier-Machine Learning-Unit-2-Evaluating Hypotheses & Bayesian Learning-15A05...

Bayes optimal classifier-Machine Learning-20A05602T-Unit-3-Bayesian Concept Learning-JNTUA-CSE

29 Bayes Optimal Classifier

Bayes optimal classifier

Bayes Optimal Classifier & Gibbs Algorithm

Bayes Optimal Classifier

Lec-8: Naive Bayes Classification Full Explanation with examples | Supervised Learning

Naive Bayes Classifier - Machine Learning

BAYES OPTIMAL CLASSIFIER

THE BAYES OPTIMAL CLASSIFIER BY JANHVI GUPTA 2000290130084

Probability and Bayes Learning - # 3 - Bayes Optimal Classifier

#44 Naive Bayes Classifier With Example |ML|

Bayes Optimal Classifier In Hindi|| Machine Learning Lectures

ISE Department - Bayes Optimal Classifier and Naive Bayes Algorithm

Solved Example Use Naive Bayes Classifier to classify the color legs height smelly by Mahesh Huddar

Naive Bayes Classifier ll Data Mining And Warehousing Explained with Solved Example in Hindi

Optimal Bayesian Classification Tutorial

Yair Weiss - A Bayes Optimal View of Adversarial Examples

Lecture 2.3 | Bayesian Learning | Concept Learning | Bayes Optimal Classifier | Bayes theorem #mlt

Комментарии

0:11:52

0:11:52

0:50:06

0:50:06

0:08:50

0:08:50

0:06:47

0:06:47

0:09:38

0:09:38

0:09:44

0:09:44

0:08:18

0:08:18

0:07:10

0:07:10

0:08:20

0:08:20

0:12:46

0:12:46

0:07:10

0:07:10

0:13:31

0:13:31

0:06:46

0:06:46

0:07:57

0:07:57

0:05:41

0:05:41

0:29:45

0:29:45

0:08:22

0:08:22

0:17:16

0:17:16

0:33:54

0:33:54

0:06:25

0:06:25

0:10:48

0:10:48

0:12:26

0:12:26

1:01:48

1:01:48

0:23:37

0:23:37