filmov

tv

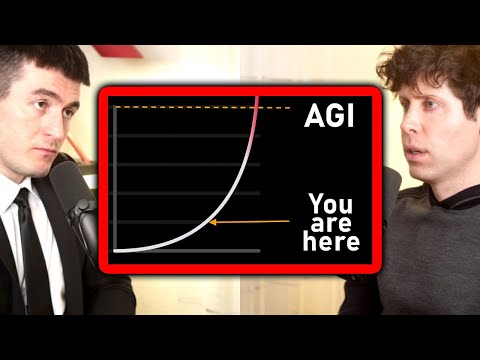

AGI is COMING and OpenAI Knows How to Achieve it!

Показать описание

Sam Altman predicts AGI will arrive in 2025! He then explains that OpenAI knows how to get there and there is a clear path towards ASI (Artificial Superintelligence).

0:00 - Intro

0:23 - Superintelligence in a few thousand days

1:15 - The path to ASI is clear

2:33 - New scaling paradigm with o1

3:00 - Is scaling slowing down?

3:51 - OpenAI knows how to achieve AGI

5:01 - OpenAI’s Levels of AGI progress

8:00 - Outro

Today’s Sources :

Sam Altman FULL Interview with Gary Tan, Y Combinator

Sam Altman "The Age of Intelligence" Blogpost 🤯

TheInformation article GPT scaling slowing down

referenced tweets: (or X's wtv)

#ainews #chatgpt #openai #samaltman

0:00 - Intro

0:23 - Superintelligence in a few thousand days

1:15 - The path to ASI is clear

2:33 - New scaling paradigm with o1

3:00 - Is scaling slowing down?

3:51 - OpenAI knows how to achieve AGI

5:01 - OpenAI’s Levels of AGI progress

8:00 - Outro

Today’s Sources :

Sam Altman FULL Interview with Gary Tan, Y Combinator

Sam Altman "The Age of Intelligence" Blogpost 🤯

TheInformation article GPT scaling slowing down

referenced tweets: (or X's wtv)

#ainews #chatgpt #openai #samaltman

OpenAI CEO: When will AGI arrive? | Sam Altman and Lex Fridman

AGI IS COMING… but #agi #ai #openai #chatgpt

Ex-OpenAI Employee: 'The World is Not Ready For AGI'

How Sam Altman Defines AGI

OpenAI Unveils o3! AGI ACHIEVED!

OpenAI Just Revealed They ACHIEVED AGI (OpenAI o3 Explained)

OpenAI Finally Admits It 'We've Achieved AGI'

OpenAI's New AI BROKE FREE and No One Can STOP IT!

Artificial General Intelligence (AGI) will continue to disappoint. #ai #agi #openai #chatgpt #gemini

Why next-token prediction is enough for AGI - Ilya Sutskever (OpenAI Chief Scientist)

AGI ACHIEVED | OpenAI Drops the BOMBSHELL that ARC AGI is beat by the o3 model

AGI is COMING and OpenAI Knows How to Achieve it!

OpenAI Just Changed The AGI Definition

OpenAI Unveils SHIPMAS & AGI Coming in Months (Not What You Expect)

This Google AI Model Just SHOCKED OpenAI—Is It the End of ChatGPT?

OpenAI's 5 level path to AGI

Did OpenAI achieve AGI (then FIre Sam Altman)?

Sam Altman: We Are Close to AGI at OpenAI #ai #agi #openai #microsoft

Chinese Researchers Just Cracked OpenAI's AGI Secrets

O3 Just BROKE the AI Ceiling 🤯 (AGI is HERE - This Changes Everything)

August AI Update Human Brained Robots, AGI, and OpenAI News

Chinese Researchers Just CRACKED OpenAI's AGI Secrets!

The Difference Between AI and AGI 🤖

The OpenAI 'business plan.' #ai #agi #elonmusk #samaltman #openai #microsoft #business #te...

Комментарии

0:04:37

0:04:37

0:00:42

0:00:42

0:24:41

0:24:41

0:00:33

0:00:33

0:26:24

0:26:24

0:12:05

0:12:05

0:10:08

0:10:08

0:09:24

0:09:24

0:00:35

0:00:35

0:02:08

0:02:08

0:17:36

0:17:36

0:09:38

0:09:38

0:08:37

0:08:37

0:11:52

0:11:52

0:01:00

0:01:00

0:00:51

0:00:51

0:00:22

0:00:22

0:15:53

0:15:53

0:33:48

0:33:48

0:00:45

0:00:45

0:36:32

0:36:32

0:00:44

0:00:44

0:00:15

0:00:15