filmov

tv

Converting Spark RDD to DataFrame in Python

Показать описание

Disclaimer/Disclosure: Some of the content was synthetically produced using various Generative AI (artificial intelligence) tools; so, there may be inaccuracies or misleading information present in the video. Please consider this before relying on the content to make any decisions or take any actions etc. If you still have any concerns, please feel free to write them in a comment. Thank you.

---

Summary: Explore the techniques for converting Spark RDDs to DataFrames in Python, understand the nuances, and determine when to use RDDs versus DataFrames in Apache Spark.

---

Converting Spark RDD to DataFrame in Python: A Comprehensive Guide

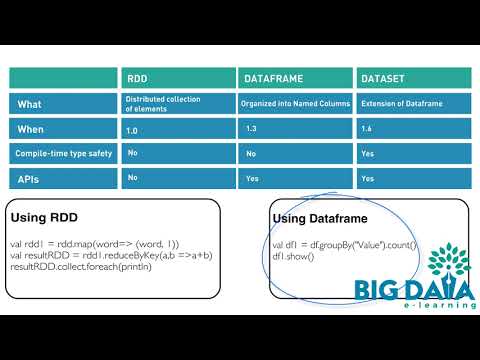

Apache Spark is a versatile and powerful platform for big data processing. It provides various abstractions such as RDDs (Resilient Distributed Datasets) and DataFrames, each catering to different use cases. In this guide, we will delve into the process of converting Spark RDDs to DataFrames in Python, explain the approach to converting RDDs to Pandas DataFrames, and discuss when to use RDDs versus DataFrames.

Converting Spark RDD to DataFrame in Python

One of the common tasks in Spark is to convert an RDD to a DataFrame. DataFrames offer significant improvements over RDDs in terms of performance and ease of use. They are optimized for advanced analytics and SQL queries, making them ideal for many data processing tasks.

Here's a step-by-step guide to converting an RDD to a DataFrame in Python:

Step 1: Initializing SparkContext and SparkSession

First, ensure that you initialize SparkContext and SparkSession:

[[See Video to Reveal this Text or Code Snippet]]

Step 2: Create an RDD

Next, create an RDD using the parallelize method or by loading data from a file:

[[See Video to Reveal this Text or Code Snippet]]

Step 3: Define the Schema

Define the schema using a list of StructField objects and StructType.

[[See Video to Reveal this Text or Code Snippet]]

Step 4: Convert RDD to DataFrame

Convert the RDD to a DataFrame using the createDataFrame method of the SparkSession:

[[See Video to Reveal this Text or Code Snippet]]

Converting Spark RDD to Pandas DataFrame

There are instances where you might need to convert an RDD to a Pandas DataFrame for local processing in Python. This requires a conversion via a Spark DataFrame, followed by the conversion to a Pandas DataFrame.

Step 1: Convert RDD to Spark DataFrame

Following the steps mentioned earlier, first convert the RDD to a Spark DataFrame.

[[See Video to Reveal this Text or Code Snippet]]

Step 2: Convert Spark DataFrame to Pandas DataFrame

Convert the Spark DataFrame to a Pandas DataFrame using the toPandas method:

[[See Video to Reveal this Text or Code Snippet]]

Spark: When to Use RDD vs DataFrame

Choosing between RDDs and DataFrames depends on the requirements of your application. Here are some considerations:

Ease of Use: DataFrames provide a higher-level API, making them easier to use especially for those familiar with SQL.

Performance: DataFrames are optimized and can leverage Spark’s Catalyst optimizer to perform complex query optimizations. They generally provide better performance over RDDs.

Type Safety: RDDs are type-safe, ensuring that errors are caught at compile-time. DataFrames are not type-safe as they rely on runtime annotations.

Functional Programming: If your use case demands extensive use of functional programming paradigms like map, reduce, and filter, RDDs might be more suitable.

Unstructured Data Processing: For unstructured data transformations, RDDs may offer more flexibility compared to the schema-based approach of DataFrames.

In conclusion, the choice between RDDs and DataFrames should be guided by your specific use case requirements, performance considerations, and ease of use.

We hope this guide has helped you understand the process of converting Spark RDDs to DataFrames and offered clarity on when to choose RDDs or DataFrames in your Spark applications. Happy coding!

---

Summary: Explore the techniques for converting Spark RDDs to DataFrames in Python, understand the nuances, and determine when to use RDDs versus DataFrames in Apache Spark.

---

Converting Spark RDD to DataFrame in Python: A Comprehensive Guide

Apache Spark is a versatile and powerful platform for big data processing. It provides various abstractions such as RDDs (Resilient Distributed Datasets) and DataFrames, each catering to different use cases. In this guide, we will delve into the process of converting Spark RDDs to DataFrames in Python, explain the approach to converting RDDs to Pandas DataFrames, and discuss when to use RDDs versus DataFrames.

Converting Spark RDD to DataFrame in Python

One of the common tasks in Spark is to convert an RDD to a DataFrame. DataFrames offer significant improvements over RDDs in terms of performance and ease of use. They are optimized for advanced analytics and SQL queries, making them ideal for many data processing tasks.

Here's a step-by-step guide to converting an RDD to a DataFrame in Python:

Step 1: Initializing SparkContext and SparkSession

First, ensure that you initialize SparkContext and SparkSession:

[[See Video to Reveal this Text or Code Snippet]]

Step 2: Create an RDD

Next, create an RDD using the parallelize method or by loading data from a file:

[[See Video to Reveal this Text or Code Snippet]]

Step 3: Define the Schema

Define the schema using a list of StructField objects and StructType.

[[See Video to Reveal this Text or Code Snippet]]

Step 4: Convert RDD to DataFrame

Convert the RDD to a DataFrame using the createDataFrame method of the SparkSession:

[[See Video to Reveal this Text or Code Snippet]]

Converting Spark RDD to Pandas DataFrame

There are instances where you might need to convert an RDD to a Pandas DataFrame for local processing in Python. This requires a conversion via a Spark DataFrame, followed by the conversion to a Pandas DataFrame.

Step 1: Convert RDD to Spark DataFrame

Following the steps mentioned earlier, first convert the RDD to a Spark DataFrame.

[[See Video to Reveal this Text or Code Snippet]]

Step 2: Convert Spark DataFrame to Pandas DataFrame

Convert the Spark DataFrame to a Pandas DataFrame using the toPandas method:

[[See Video to Reveal this Text or Code Snippet]]

Spark: When to Use RDD vs DataFrame

Choosing between RDDs and DataFrames depends on the requirements of your application. Here are some considerations:

Ease of Use: DataFrames provide a higher-level API, making them easier to use especially for those familiar with SQL.

Performance: DataFrames are optimized and can leverage Spark’s Catalyst optimizer to perform complex query optimizations. They generally provide better performance over RDDs.

Type Safety: RDDs are type-safe, ensuring that errors are caught at compile-time. DataFrames are not type-safe as they rely on runtime annotations.

Functional Programming: If your use case demands extensive use of functional programming paradigms like map, reduce, and filter, RDDs might be more suitable.

Unstructured Data Processing: For unstructured data transformations, RDDs may offer more flexibility compared to the schema-based approach of DataFrames.

In conclusion, the choice between RDDs and DataFrames should be guided by your specific use case requirements, performance considerations, and ease of use.

We hope this guide has helped you understand the process of converting Spark RDDs to DataFrames and offered clarity on when to choose RDDs or DataFrames in your Spark applications. Happy coding!

0:07:50

0:07:50

0:06:11

0:06:11

0:01:58

0:01:58

0:09:01

0:09:01

0:05:10

0:05:10

0:03:26

0:03:26

0:36:59

0:36:59

0:13:17

0:13:17

0:00:56

0:00:56

0:00:44

0:00:44

0:33:06

0:33:06

0:31:19

0:31:19

0:05:15

0:05:15

0:00:54

0:00:54

0:02:57

0:02:57

0:06:07

0:06:07

0:05:01

0:05:01

0:00:34

0:00:34

0:08:24

0:08:24

0:05:49

0:05:49

0:11:45

0:11:45

0:06:46

0:06:46

0:12:41

0:12:41

0:14:14

0:14:14