filmov

tv

14. Causal Inference, Part 1

Показать описание

MIT 6.S897 Machine Learning for Healthcare, Spring 2019

Instructor: David Sontag

Prof. Sontag discusses causal inference, examples of causal questions, and how these guide treatment decisions. He explains the Rubin-Neyman causal model as a potential outcome framework.

License: Creative Commons BY-NC-SA

Instructor: David Sontag

Prof. Sontag discusses causal inference, examples of causal questions, and how these guide treatment decisions. He explains the Rubin-Neyman causal model as a potential outcome framework.

License: Creative Commons BY-NC-SA

14. Causal Inference, Part 1

Causal Inference - EXPLAINED!

14. Inferencia Causal, Parte 1

14 - Counterfactuals and Mediation

15. Causal Inference, Part 2

Statistical Causal Inference L14 (1/4)

Causal Inference in Deep Learning (Podcast Overview with Brady Neal)

1.6 - Course Information (Introduction to Causal Inference)

Causal Inference, Semiparametric Statistics & Machine Learning in the Age of Data Science

1 - A Brief Introduction to Causal Inference (Course Preview)

Introduction to causal inference

1.1 - Intro and Outline of A Brief Introduction to Causal Inference

Causal Inference with Machine Learning - EXPLAINED!

Causal Inference

Exchangability: Part 1 - Causal Inference

Causality (and the difference to correlation) Part 2/4 #shorts #dataanalysis #datascience #datatab

2023 09 14 Causal Inference Symposium Causality Overview

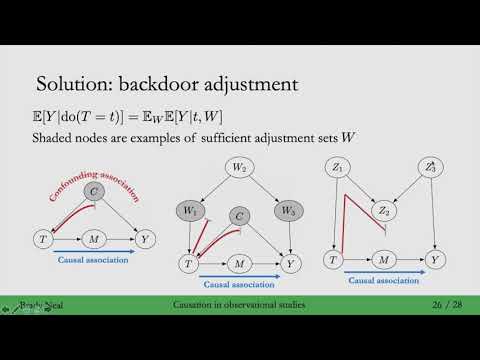

1.5 - Causation in Observational Studies

Regression Discontinuity Design and Instrumental Variables | Causal Inference in Data Science Part 4

4.2 - Intervening, the do-operator, and Identifiability

Causal Inference and AB testing - November 2019

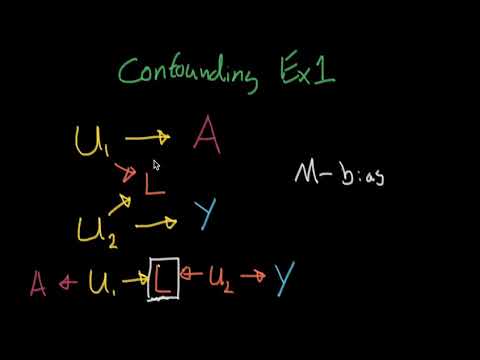

Confounding Example 1 - Causal Inference

Common Issues in Experiments: Causal Inference Bootcamp

2023 09 14 Causal Inference Symposium Responsible and Reliable Data Science

Комментарии

1:18:43

1:18:43

0:15:32

0:15:32

1:18:44

1:18:44

0:34:36

0:34:36

1:02:17

1:02:17

0:13:18

0:13:18

0:27:39

0:27:39

0:08:14

0:08:14

1:15:21

1:15:21

0:42:11

0:42:11

0:05:44

0:05:44

0:02:47

0:02:47

0:16:09

0:16:09

0:29:10

0:29:10

0:05:46

0:05:46

0:00:54

0:00:54

0:19:47

0:19:47

0:11:58

0:11:58

0:21:55

0:21:55

0:06:23

0:06:23

1:06:38

1:06:38

0:04:03

0:04:03

0:03:21

0:03:21

0:20:20

0:20:20