filmov

tv

Deep Learning Lecture 5: Regularization, model complexity and data complexity (part 2)

Показать описание

Course taught in 2015 at the University of Oxford by Nando de Freitas with great help from Brendan Shillingford.

Deep Learning Lecture 5: Regularization, model complexity and data complexity (part 2)

Deep Learning | S23 | Lecture 5: Regularization in Deep Learning, Convolutional Neural Network

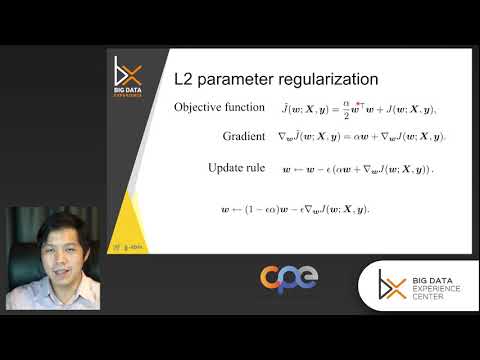

Deep Learning - Lecture 5.1 (Regularization: Parameter Penalties)

Deep Learning: Regularization - Part 5

[MXDL-5-01] Regularization [1/2] - Weights and Biases Regularization

Lecture 5 - Regularization

Regularization (C2W1L04)

Deep Learning - Lecture 5.3 (Regularization: Ensemble Methods)

ResNet Explained - Vanishing Gradients, Skip Connections, and Code Implementation | Computer Vision

Regularization of Deep Learning | Lecture 7 | Deep Learning

Regularization in a Neural Network | Dealing with overfitting

Lecture 5 - Recurrent Neural Networks | Deep Learning on Computational Accelerators

Deep Learning Lecture 4: Regularization, model complexity and data complexity (part 1)

Lecture 5 - Regularization, Early Stopping, Dropout.

Deep Learning - Keras - Multi Layer Perceptron Part 5 Regularization

Regularization in Machine and Deep Learning (Ridge, Lasso, and Layer weight regularizers)

Lecture 9 - Normalization and Regularization

Lecture 7 - Regularization for deep learning

Regularization

Overfitting and Regularization For Deep Learning | Two Minute Papers #56

Deep Learning: Regularization - Part 5 (WS 20/21)

Regularization: Deep Learning and Arificial Neural Networks 1.6

Lecture 8: Training Neural Networks: Normalization, Regularization, etc

2022 ML100: Lecture 5 - Regularization

Комментарии

0:58:58

0:58:58

2:34:54

2:34:54

0:44:13

0:44:13

0:06:55

0:06:55

![[MXDL-5-01] Regularization [1/2]](https://i.ytimg.com/vi/WhN0ppyFLq0/hqdefault.jpg) 0:17:47

0:17:47

0:21:06

0:21:06

0:09:43

0:09:43

0:11:07

0:11:07

0:38:26

0:38:26

0:54:28

0:54:28

0:11:40

0:11:40

1:35:25

1:35:25

0:40:27

0:40:27

1:25:13

1:25:13

0:13:33

0:13:33

0:29:34

0:29:34

1:19:30

1:19:30

1:20:45

1:20:45

0:00:17

0:00:17

0:04:34

0:04:34

0:06:49

0:06:49

0:13:26

0:13:26

1:24:15

1:24:15

2:24:40

2:24:40