filmov

tv

Understanding and Applying XGBoost Classification Trees in R

Показать описание

===== Likes: 78 👍: Dislikes: 1 👎: 98.734% : Updated on 01-21-2023 11:57:17 EST =====

Ever wonder if you can score in the top leaderboards of any kaggle competition? Look on further! Check out the XGBoost Model, an ensemble boosting method, known for its powerful robustness. This is an industry grade model that is heavily applied in many industries. Knowing this algorithm can get your foot in the door in many industries containing Data Science!!!

Github link:

Data Set Link:

--------------------------------------------------

Additional Material to check out !

Ensemble Method Boosting:

Ensemble Method Stacking:

Ensemble Method Bagging:

Methods of Sampling:

--------------------------------------------------

0:00 - In-depth explanation of XGBoost

5:00 - Summary of XGBoost Algorithm

6:03 - Understanding Data & Applying XGBoost

11:20 - Explaining XGBoost Gridsearch Parameters

14:25 - TrainControl & Final model & Evaluation

Ever wonder if you can score in the top leaderboards of any kaggle competition? Look on further! Check out the XGBoost Model, an ensemble boosting method, known for its powerful robustness. This is an industry grade model that is heavily applied in many industries. Knowing this algorithm can get your foot in the door in many industries containing Data Science!!!

Github link:

Data Set Link:

--------------------------------------------------

Additional Material to check out !

Ensemble Method Boosting:

Ensemble Method Stacking:

Ensemble Method Bagging:

Methods of Sampling:

--------------------------------------------------

0:00 - In-depth explanation of XGBoost

5:00 - Summary of XGBoost Algorithm

6:03 - Understanding Data & Applying XGBoost

11:20 - Explaining XGBoost Gridsearch Parameters

14:25 - TrainControl & Final model & Evaluation

Understanding and Applying XGBoost Classification Trees in R

Xgboost Classification Indepth Maths Intuition- Machine Learning Algorithms🔥🔥🔥🔥

Visual Guide to Gradient Boosted Trees (xgboost)

Gradient Boosting and XGBoost in Machine Learning: Easy Explanation for Data Science Interviews

XGBoost Part 1 (of 4): Regression

How to train XGBoost models in Python

XGBoost in Python from Start to Finish

XGBoost Part 2 (of 4): Classification

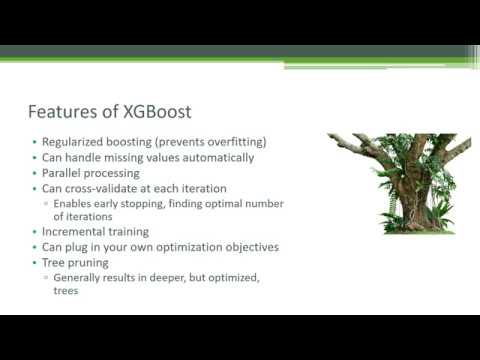

XGBoost Overview| Overview Of XGBoost algorithm in ensemble learning

XGBoost Made Easy | Extreme Gradient Boosting | AWS SageMaker

Gradient Boosting : Data Science's Silver Bullet

XGBoost: How it works, with an example.

Hyperparameter Optimization for Xgboost

XGBoost Skills: Applied Classification with XGBoost Course Preview

Boosting Machine Learning Tutorial | Adaptive Boosting, Gradient Boosting, XGBoost | Edureka

Xgboost Regression In-Depth Intuition Explained- Machine Learning Algorithms 🔥🔥🔥🔥

196 - What is Light GBM and how does it compare against XGBoost?

Maths behind XGBoost|XGBoost algorithm explained with Data Step by Step

Time Series Forecasting with XGBoost - Use python and machine learning to predict energy consumption

LightGBM algorithm explained | Lightgbm vs xgboost | lightGBM regression| LightGBM model

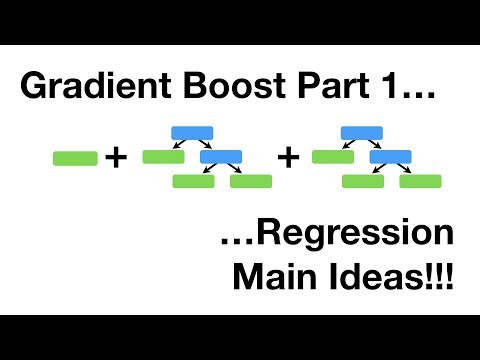

Gradient Boost Part 1 (of 4): Regression Main Ideas

What is XGBoost? - DIY-8 (Watch the next video #9 for XGBoost example)

Andrew Ng's Secret to Mastering Machine Learning - Part 1 #shorts

SHAP values for beginners | What they mean and their applications

Комментарии

0:17:46

0:17:46

0:23:59

0:23:59

0:04:06

0:04:06

0:11:16

0:11:16

0:25:46

0:25:46

0:18:57

0:18:57

0:56:43

0:56:43

0:25:18

0:25:18

0:08:20

0:08:20

0:21:38

0:21:38

0:15:48

0:15:48

0:15:30

0:15:30

0:14:55

0:14:55

0:01:44

0:01:44

0:21:18

0:21:18

0:19:30

0:19:30

0:19:05

0:19:05

0:16:40

0:16:40

0:23:09

0:23:09

0:10:48

0:10:48

0:15:52

0:15:52

0:02:58

0:02:58

0:00:48

0:00:48

0:07:07

0:07:07