filmov

tv

PyTorch Geometric tutorial: Graph Autoencoders & Variational Graph Autoencoders

Показать описание

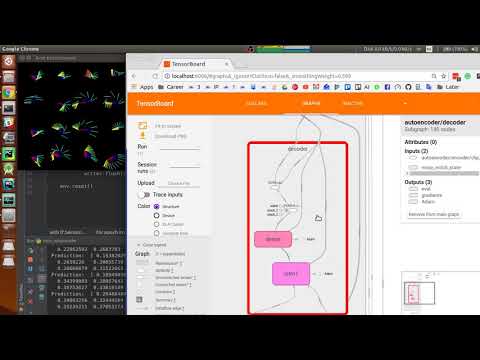

In this tutorial, we present Graph Autoencoders and Variational Graph Autoencoders from the paper:

Later, we show an example taken from the official PyTorch geometric repository:

Download the slides and the Jupyter-notebook from the official web site:

Later, we show an example taken from the official PyTorch geometric repository:

Download the slides and the Jupyter-notebook from the official web site:

PyTorch Geometric tutorial: Graph Autoencoders & Variational Graph Autoencoders

PyTorch Geometric tutorial: Adversarial Regularizer (Variational) Graph Autoencoders

graph autoencoder pytorch geometric

Heterogeneous graph learning [Advanced PyTorch Geometric Tutorial 4]

GNN Project #4.1 - Graph Variational Autoencoders

Graph Neural Networks (GNN) using Pytorch Geometric | Stanford University

Pytorch Geometric tutorial: Special Guest: Matthias Fey

Pytorch Geometric tutorial: Convolutional Layers - Spectral methods

Pytorch Geometric tutorial: Edge analysis

Self-/Unsupervised GNN Training

PyTorch Geometric tutorial: Graph Generation

Pytorch Geometric tutorial: Aggregation Functions in GNNs

Graph Machine Learning for VC | Part 4 | Building GNNs with Pytorch Geometric | CVPR 2022 Tutorial

Diversity Autoencoder Graph

Pytorch Geometric tutorial: Graph pooling DIFFPOOL

Tutorial: PyTorch Geometric (Jianxuan You, Rex Ying)

Multi-head Variational Graph Autoencoder Constrained by Sum-product Networks

Pytorch Geometric tutorial: Graph attention networks (GAT) implementation

Pytorch Geometric tutorial: Metapath2Vec

Converting a Tabular Dataset to a Graph Dataset for GNNs

Machine Learning on Graphs with PyTorch Geometric, NVIDIA Triton, and ArangoDB

Continuous Representation Of Molecules using Graph Variational Autoencoder by Mohammadamin Tavakoli

SGVAE: Sequential Graphical Variational Autoencoder

GraphGym and PyG [Advanced PyTorch Geometric Tutorial 2]

Комментарии

0:46:21

0:46:21

0:49:48

0:49:48

0:03:00

0:03:00

0:33:17

0:33:17

0:12:53

0:12:53

1:14:23

1:14:23

0:46:19

0:46:19

0:37:37

0:37:37

0:38:30

0:38:30

0:12:09

0:12:09

0:27:51

0:27:51

0:42:06

0:42:06

0:36:23

0:36:23

0:01:03

0:01:03

0:30:52

0:30:52

1:22:36

1:22:36

0:02:50

0:02:50

0:46:40

0:46:40

0:32:25

0:32:25

0:15:22

0:15:22

2:31:48

2:31:48

0:37:44

0:37:44

0:03:34

0:03:34

0:42:20

0:42:20