filmov

tv

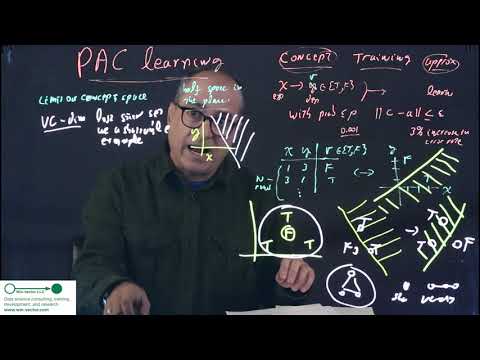

Ali Ghodsi, Lec 19: PAC Learning

Показать описание

Description

Ali Ghodsi, Lec 19: PAC Learning

Ali Ghodsi, Lec 16 : Ali Ghodsi-Boosting

Ali Ghodsi, Lec 6: Logistic Regression, Perceptron

Ali Ghodsi, Lec [5.1]: Deep Learning, Recurrent neural network

Ali Ghodsi, Lec 15: t-SNE

Ali Ghodsi, Lec 13: Dual PCA, Supervised PCA

Ali Ghodsi, Lec 7: Backpropagation

Ali Ghodsi, Lec 1: Principal Component Analysis

Ali Ghodsi, Lec 17: Bagging, Convolutional Networks (part 1)

Ali Ghodsi, Lec 18: Convolutional neural network (Part 2)

Lecture 19 (EECS4404E) - PAC Learning

Ali Ghodsi, Lec [3,1]: Deep Learning, Word2vec

Ali Ghodsi Lec5 Model selection, Neural Networks

Ali Ghodsi, Lec [1,1]: Deep Learning, Introduction

Ali Ghodsi, Lec [7], Deep Learning , Restricted Boltzmann Machines (RBMs)

Ali Ghodsi, Lec [2,2]: Deep Learning, Regularization

AliGhodsi Lec 18, Bagging

Ali Ghodsi, Lec 9: Stein’s unbiased risk estimator (sure)

Agnostic PAC-Learning | Lê Nguyên Hoang

Ali Ghodsi, Lec [2,1]: Deep Learning, Regularization

Ali Ghodsi, Lec10: Weight decay

Lecture 25 - P.A.C Learning - Part I - 2019

PAC Learning and VC Dimension

Ali Ghodsi, Lec 15: Decision Tree, KNN

Комментарии

0:28:01

0:28:01

1:12:40

1:12:40

1:15:25

1:15:25

1:27:14

1:27:14

0:57:21

0:57:21

1:12:51

1:12:51

1:21:08

1:21:08

1:11:42

1:11:42

0:42:24

0:42:24

0:50:09

0:50:09

0:57:34

0:57:34

1:13:29

1:13:29

1:20:39

1:20:39

0:56:49

0:56:49

1:13:13

1:13:13

0:46:05

0:46:05

0:18:07

0:18:07

1:14:35

1:14:35

0:05:28

0:05:28

1:29:01

1:29:01

1:16:07

1:16:07

0:45:17

0:45:17

0:17:17

0:17:17

1:14:31

1:14:31