filmov

tv

MATLAB SVM tutorial (fitcsvm)

Показать описание

I am sorry for everyone that I did not actually write code in the description.

--

clear; close all; clc;

%% preparing dataset

load fisheriris

species_num = grp2idx(species);

%%

% binary classification 형태로 만들기 위해 100개만...

X = randn(100,10);

X(:,[1,3,5,7]) = meas(1:100,:); % 1, 3, 5, 7 번 feature가 분류에 유용한 feature일 것임.

y = species_num(1:100);

rand_num = randperm(size(X,1));

X_train = X(rand_num(1:round(0.8*length(rand_num))),:);

y_train = y(rand_num(1:round(0.8*length(rand_num))),:);

X_test = X(rand_num(round(0.8*length(rand_num))+1:end),:);

y_test = y(rand_num(round(0.8*length(rand_num))+1:end),:);

%% CV partition

c = cvpartition(y_train,'k',5);

%% feature selection

opts = statset('display','iter');

classf = @(train_data, train_labels, test_data, test_labels)...

sum(predict(fitcsvm(train_data, train_labels,'KernelFunction','rbf'), test_data) ~= test_labels);

[fs, history] = sequentialfs(classf, X_train, y_train, 'cv', c, 'options', opts,'nfeatures',2);

%% Best hyperparameter

X_train_w_best_feature = X_train(:,fs);

Md1 = fitcsvm(X_train_w_best_feature,y_train,'KernelFunction','rbf','OptimizeHyperparameters','auto',...

'HyperparameterOptimizationOptions',struct('AcquisitionFunctionName',...

'expected-improvement-plus','ShowPlots',true)); % Bayes' Optimization 사용.

%% Final test with test set

X_test_w_best_feature = X_test(:,fs);

test_accuracy_for_iter = sum((predict(Md1,X_test_w_best_feature) == y_test))/length(y_test)*100

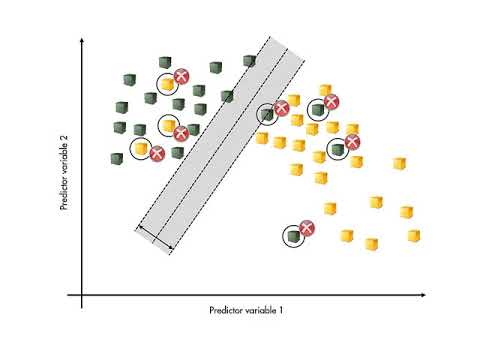

%% hyperplane 확인

figure;

hgscatter = gscatter(X_train_w_best_feature(:,1),X_train_w_best_feature(:,2),y_train);

hold on;

h_sv=plot(Md1.SupportVectors(:,1),Md1.SupportVectors(:,2),'ko','markersize',8);

% test set의 data를 하나 하나씩 넣어보자.

gscatter(X_test_w_best_feature(:,1),X_test_w_best_feature(:,2),y_test,'rb','xx')

% decision plane

XLIMs = get(gca,'xlim');

YLIMs = get(gca,'ylim');

[xi,yi] = meshgrid([XLIMs(1):0.01:XLIMs(2)],[YLIMs(1):0.01:YLIMs(2)]);

dd = [xi(:), yi(:)];

pred_mesh = predict(Md1, dd);

redcolor = [1, 0.8, 0.8];

bluecolor = [0.8, 0.8, 1];

pos = find(pred_mesh == 1);

h1 = plot(dd(pos,1), dd(pos,2),'s','color',redcolor,'Markersize',5,'MarkerEdgeColor',redcolor,'MarkerFaceColor',redcolor);

pos = find(pred_mesh == 2);

h2 = plot(dd(pos,1), dd(pos,2),'s','color',bluecolor,'Markersize',5,'MarkerEdgeColor',bluecolor,'MarkerFaceColor',bluecolor);

uistack(h1,'bottom');

uistack(h2,'bottom');

legend([hgscatter;h_sv],{'setosa','versicolor','support vectors'})

--

clear; close all; clc;

%% preparing dataset

load fisheriris

species_num = grp2idx(species);

%%

% binary classification 형태로 만들기 위해 100개만...

X = randn(100,10);

X(:,[1,3,5,7]) = meas(1:100,:); % 1, 3, 5, 7 번 feature가 분류에 유용한 feature일 것임.

y = species_num(1:100);

rand_num = randperm(size(X,1));

X_train = X(rand_num(1:round(0.8*length(rand_num))),:);

y_train = y(rand_num(1:round(0.8*length(rand_num))),:);

X_test = X(rand_num(round(0.8*length(rand_num))+1:end),:);

y_test = y(rand_num(round(0.8*length(rand_num))+1:end),:);

%% CV partition

c = cvpartition(y_train,'k',5);

%% feature selection

opts = statset('display','iter');

classf = @(train_data, train_labels, test_data, test_labels)...

sum(predict(fitcsvm(train_data, train_labels,'KernelFunction','rbf'), test_data) ~= test_labels);

[fs, history] = sequentialfs(classf, X_train, y_train, 'cv', c, 'options', opts,'nfeatures',2);

%% Best hyperparameter

X_train_w_best_feature = X_train(:,fs);

Md1 = fitcsvm(X_train_w_best_feature,y_train,'KernelFunction','rbf','OptimizeHyperparameters','auto',...

'HyperparameterOptimizationOptions',struct('AcquisitionFunctionName',...

'expected-improvement-plus','ShowPlots',true)); % Bayes' Optimization 사용.

%% Final test with test set

X_test_w_best_feature = X_test(:,fs);

test_accuracy_for_iter = sum((predict(Md1,X_test_w_best_feature) == y_test))/length(y_test)*100

%% hyperplane 확인

figure;

hgscatter = gscatter(X_train_w_best_feature(:,1),X_train_w_best_feature(:,2),y_train);

hold on;

h_sv=plot(Md1.SupportVectors(:,1),Md1.SupportVectors(:,2),'ko','markersize',8);

% test set의 data를 하나 하나씩 넣어보자.

gscatter(X_test_w_best_feature(:,1),X_test_w_best_feature(:,2),y_test,'rb','xx')

% decision plane

XLIMs = get(gca,'xlim');

YLIMs = get(gca,'ylim');

[xi,yi] = meshgrid([XLIMs(1):0.01:XLIMs(2)],[YLIMs(1):0.01:YLIMs(2)]);

dd = [xi(:), yi(:)];

pred_mesh = predict(Md1, dd);

redcolor = [1, 0.8, 0.8];

bluecolor = [0.8, 0.8, 1];

pos = find(pred_mesh == 1);

h1 = plot(dd(pos,1), dd(pos,2),'s','color',redcolor,'Markersize',5,'MarkerEdgeColor',redcolor,'MarkerFaceColor',redcolor);

pos = find(pred_mesh == 2);

h2 = plot(dd(pos,1), dd(pos,2),'s','color',bluecolor,'Markersize',5,'MarkerEdgeColor',bluecolor,'MarkerFaceColor',bluecolor);

uistack(h1,'bottom');

uistack(h2,'bottom');

legend([hgscatter;h_sv],{'setosa','versicolor','support vectors'})

Комментарии

0:24:49

0:24:49

0:03:55

0:03:55

0:06:53

0:06:53

0:14:29

0:14:29

0:05:51

0:05:51

0:06:44

0:06:44

0:06:43

0:06:43

0:01:12

0:01:12

0:10:09

0:10:09

0:10:28

0:10:28

0:03:57

0:03:57

0:10:45

0:10:45

0:15:04

0:15:04

0:09:01

0:09:01

0:06:50

0:06:50

0:04:48

0:04:48

0:10:55

0:10:55

0:03:01

0:03:01

0:05:47

0:05:47

0:11:42

0:11:42

0:17:22

0:17:22

0:07:08

0:07:08

1:15:28

1:15:28

0:01:22

0:01:22