filmov

tv

Huffman Coding Explained: Optimal Lossless Data Compression in Information Theory #algorithm

Показать описание

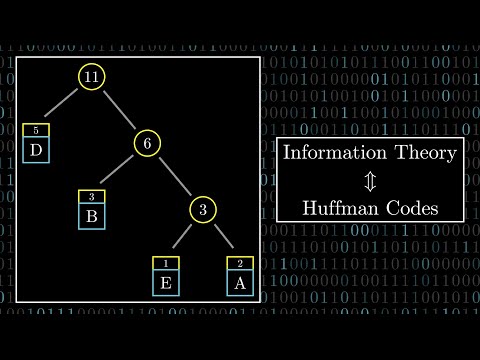

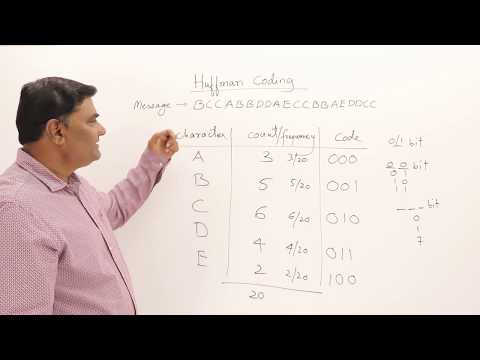

Discover the fundamentals of Huffman Coding, one of the most efficient Lossless Data Compression Algorithms in Information Theory. In this video, we explain how Huffman coding works by assigning variable-length codes based on data frequency, optimizing data transmission and storage. Perfect for bachelor’s and master’s degree engineering students in fields like Electrical Engineering, Telecommunications, Electronics, IT, and Network Models, this tutorial covers key concepts such as binary trees, prefix-free codes, and practical applications of Huffman coding in real-world communication systems. - Huffman Coding - Lossless Data Compression - Information Theory - Data Compression Algorithms - Binary Tree Coding - Prefix-Free Codes - Efficient Data Transmission - Digital Communications - Communication Systems - Huffman Algorithm - Engineering Tutorials - Electrical Engineering - Telecommunications - Electronics - IT Engineering - Network Models #ict #HuffmanCoding #InformationTheory #LosslessDataCompression #DataCompression #BinaryTreeCoding #PrefixFreeCodes #EngineeringEssentials #DigitalCommunications #Telecommunications #ElectricalEngineering #ITEngineering #NetworkModels Huffman coding is a data compression technique that uses variable-length codes to represent data. The codes are assigned to the data elements based on their frequency of occurrence. The more frequently an element occurs, the shorter its code. This results in a compressed data stream that is smaller than the original data stream. Huffman coding was developed by David A. Huffman in 1952. It is a lossless data compression algorithm, which means that the original data can be reconstructed from the compressed data without any loss of information. Huffman coding is used in a wide variety of applications, including: Image compression, Audio compression, Video compression, Text compression, Web compression. Huffman coding is also used in some error-correcting codes. #electronics #computerscience #eranand

Lossless Data Compression - Prefix Codes - Instantaneous Codes - Kraft Inequality - Information Theory - Data Compression Algorithms - Efficient Data Transmission - Communication Systems - Digital Communications - Prefix-Free Codes - Engineering Tutorials - Shannon's Theorem - Electrical Engineering - Telecommunications - Electronics - IT Engineering - Network Models #InformationTheory #LosslessDataCompression #PrefixCodes #InstantaneousCodes #KraftInequality #DataCompression #EngineeringEssentials #DigitalCommunications #Telecommunications #ShannonsTheorem #ElectricalEngineering #ITEngineering #NetworkModels

Shannon’s First Theorem - Source Coding Theorem - Information Theory - Data Compression Limits - Lossless Compression - Digital Communications - Efficient Data Encoding - Shannon’s Theorem - Communication Systems - Engineering Tutorials - Electrical Engineering - Telecommunications - Electronics - IT Engineering - Network Models ### Suggested Hashtags: #ShannonsFirstTheorem #SourceCodingTheorem #InformationTheory #DataCompression #DigitalCommunications #EngineeringEssentials #Telecommunications #ShannonsTheorem #ElectricalEngineering #ITEngineering #NetworkModels

Information Theory - Source Encoding - Data Compression - Entropy Encoding - Efficient Data Transmission - Shannon’s Theorem - Communication Systems - Engineering Tutorials - Electrical Engineering - Digital Communications - Data Redundancy - Lossless Data Compression - Telecommunications - Electronics - IT Engineering - Network Models #InformationTheory #SourceEncoding #DataCompression #EntropyEncoding #ShannonsTheorem #DigitalCommunications #EngineeringEssentials #Telecommunications #ElectricalEngineering #ITEngineering #NetworkModels Source encoding is the process of converting information waveforms (text, audio, image, video, etc.) into bits, the universal currency of information in the digital world. The goal of source encoding is to reduce the number of bits required to represent the information, without losing any of the information. This can be done by removing redundancy from the information. There are many different source encoding algorithms, each with its own strengths and weaknesses.Network Models #InformationTheory #Entropy #DataUncertainty #DigitalCommunications #ShannonsEntropy #DataCompression #EngineeringEssentials #Telecommunications #ElectricalEngineering #CodingTheory #NetworkModels

#InformationTheory #ShannonsTheorem #SourceEncoding #DataAlgorithms #HuffmanCoding #EngineeringEssentials #DigitalCommunications #Telecommunications #ElectricalEngineering #ITEngineering #NetworkModels #DataCompression#electronics #digitalcommunication #informationtechnology

Lossless Data Compression - Prefix Codes - Instantaneous Codes - Kraft Inequality - Information Theory - Data Compression Algorithms - Efficient Data Transmission - Communication Systems - Digital Communications - Prefix-Free Codes - Engineering Tutorials - Shannon's Theorem - Electrical Engineering - Telecommunications - Electronics - IT Engineering - Network Models #InformationTheory #LosslessDataCompression #PrefixCodes #InstantaneousCodes #KraftInequality #DataCompression #EngineeringEssentials #DigitalCommunications #Telecommunications #ShannonsTheorem #ElectricalEngineering #ITEngineering #NetworkModels

Shannon’s First Theorem - Source Coding Theorem - Information Theory - Data Compression Limits - Lossless Compression - Digital Communications - Efficient Data Encoding - Shannon’s Theorem - Communication Systems - Engineering Tutorials - Electrical Engineering - Telecommunications - Electronics - IT Engineering - Network Models ### Suggested Hashtags: #ShannonsFirstTheorem #SourceCodingTheorem #InformationTheory #DataCompression #DigitalCommunications #EngineeringEssentials #Telecommunications #ShannonsTheorem #ElectricalEngineering #ITEngineering #NetworkModels

Information Theory - Source Encoding - Data Compression - Entropy Encoding - Efficient Data Transmission - Shannon’s Theorem - Communication Systems - Engineering Tutorials - Electrical Engineering - Digital Communications - Data Redundancy - Lossless Data Compression - Telecommunications - Electronics - IT Engineering - Network Models #InformationTheory #SourceEncoding #DataCompression #EntropyEncoding #ShannonsTheorem #DigitalCommunications #EngineeringEssentials #Telecommunications #ElectricalEngineering #ITEngineering #NetworkModels Source encoding is the process of converting information waveforms (text, audio, image, video, etc.) into bits, the universal currency of information in the digital world. The goal of source encoding is to reduce the number of bits required to represent the information, without losing any of the information. This can be done by removing redundancy from the information. There are many different source encoding algorithms, each with its own strengths and weaknesses.Network Models #InformationTheory #Entropy #DataUncertainty #DigitalCommunications #ShannonsEntropy #DataCompression #EngineeringEssentials #Telecommunications #ElectricalEngineering #CodingTheory #NetworkModels

#InformationTheory #ShannonsTheorem #SourceEncoding #DataAlgorithms #HuffmanCoding #EngineeringEssentials #DigitalCommunications #Telecommunications #ElectricalEngineering #ITEngineering #NetworkModels #DataCompression#electronics #digitalcommunication #informationtechnology

0:12:17

0:12:17

0:06:30

0:06:30

0:04:43

0:04:43

0:04:36

0:04:36

0:06:10

0:06:10

0:10:03

0:10:03

0:14:26

0:14:26

0:29:11

0:29:11

0:17:44

0:17:44

0:18:23

0:18:23

0:17:31

0:17:31

0:02:18

0:02:18

0:03:09

0:03:09

0:10:01

0:10:01

1:43:48

1:43:48

0:10:52

0:10:52

0:15:48

0:15:48

0:09:49

0:09:49

0:05:23

0:05:23

0:15:17

0:15:17

0:06:35

0:06:35

0:13:23

0:13:23

0:41:26

0:41:26

0:43:47

0:43:47