filmov

tv

The Evolution of Gradient Descent

Показать описание

Which optimizer should we use to train our neural network? Tensorflow gives us lots of options, and there are way too many acronyms. We'll go over how the most popular ones work and in the process see how gradient descent has evolved over the years.

Code from this video (with coding challenge):

Please subscribe! And like. And comment. Thats what keeps me going.

More learning resources:

Join us in the Wizards Slack channel:

Follow me:

Signup for my newsletter for exciting updates in the field of AI:

Code from this video (with coding challenge):

Please subscribe! And like. And comment. Thats what keeps me going.

More learning resources:

Join us in the Wizards Slack channel:

Follow me:

Signup for my newsletter for exciting updates in the field of AI:

The Evolution of Gradient Descent

Gradient Descent vs Evolution | How Neural Networks Learn

STOCHASTIC Gradient Descent (in 3 minutes)

Gradient Descent vs Evolution

Who's Adam and What's He Optimizing? | Deep Dive into Optimizers for Machine Learning!

Gradient flow

NN in Arabic | The Evolution of Gradient Descent (Week 03)

'Correspondence between neuroevolution and gradient descent,' by S. Whitelam et al.

Natural Evolution Strategy (NES) part 1: Evolution gradient; the log-likelihood trick

Journey to the depths of the Loss Landscape | Deep Learning Gradient Descent Visualizations

WHAT IS A NEURAL NETWORK?? - Gradient Descent

VISUALISER L'EVOLUTION DE SON MODÈLE DE MACHINE LEARNING

DESCENTE DE GRADIENT (GRADIENT DESCENT) - ML#4

Trajectory of the gradient descent: a 2D illustration

Gradient descent, Rosenbrock function (Nelder)

An RNA evolutionary algorithm based on gradient descent for function optimization

Gradient descent, Rosenbrock function (TNC)

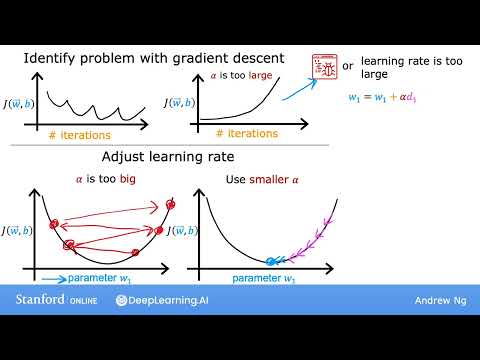

#28 Machine Learning Specialization [Course 1, Week 2, Lesson 2]

Optimization Algorithms

Analyzing Optimization and Generalization in Deep Learning via Trajectories of Gradient Descent

Trajectory of the stochastic gradient descent: a 2D illustration

Avi Wigderson 'Optimization, Complexity and Math (or, can we prove P ≠ NP using gradient descen...

Stochastic Gradient Descent: boosting its performances in R

Analyzing Optimization and Generalization in Deep Learning via Trajectories of Gradient Descent

Комментарии

0:09:19

0:09:19

0:23:46

0:23:46

0:03:34

0:03:34

0:00:56

0:00:56

0:23:20

0:23:20

0:00:27

0:00:27

2:19:34

2:19:34

0:30:51

0:30:51

0:14:29

0:14:29

0:00:36

0:00:36

0:15:04

0:15:04

0:07:36

0:07:36

0:13:44

0:13:44

0:00:18

0:00:18

0:00:18

0:00:18

0:03:04

0:03:04

0:00:06

0:00:06

0:06:07

0:06:07

0:00:16

0:00:16

0:46:27

0:46:27

0:00:26

0:00:26

1:41:58

1:41:58

0:17:03

0:17:03

0:19:44

0:19:44