filmov

tv

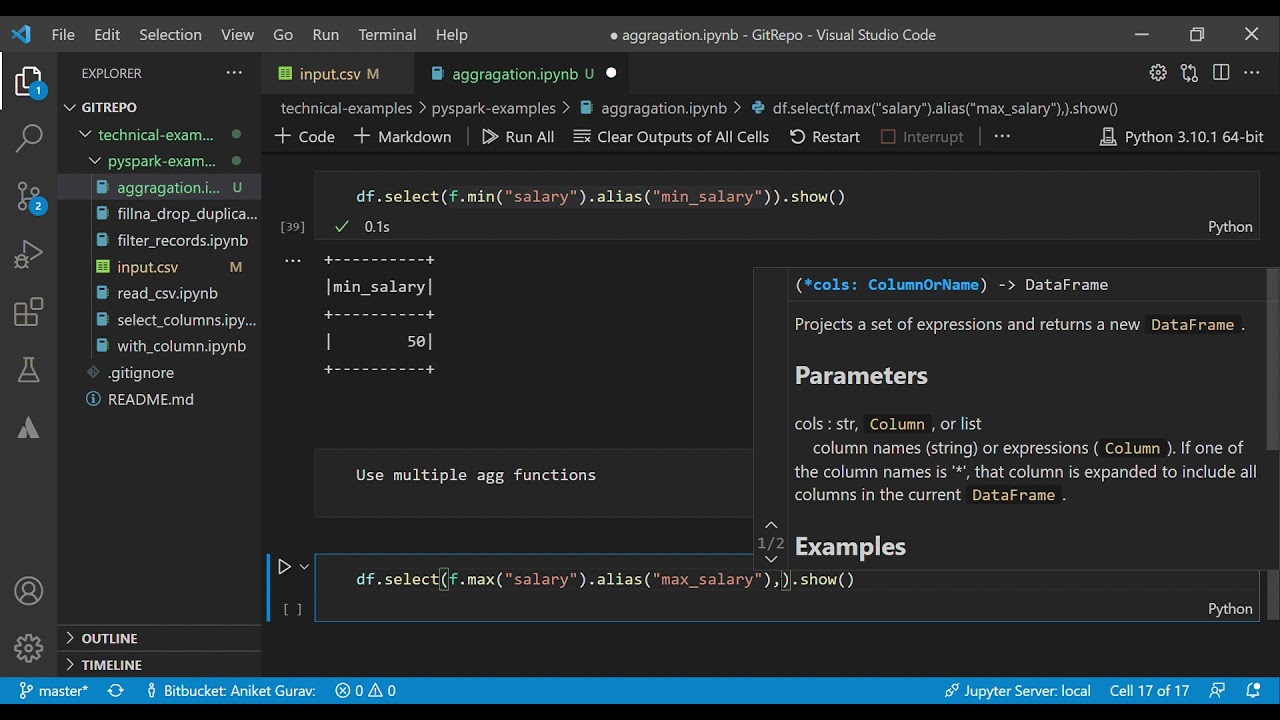

PySpark Examples - How to use Aggregation Functions DataFrame (sum,mean,max,min,groupBy) - Spark SQL

Показать описание

Spark SQL Aggregation Functions

- groupBy : It is used to group records based on

columns.

- count : It is used to count number of records

- sum : It is used to calculate sum of all records for a particular column.

- mean : It is used to calculate mean of all records for a particular column.

- min : It is used to calculate minimum of all records for a particular column.

- max : It is used to calculate maximum of all records for a particular column.

#pyspark #spark #python #sparksql #dataframe #aggregation #groupBy #sum #mean #avg #max #min

- groupBy : It is used to group records based on

columns.

- count : It is used to count number of records

- sum : It is used to calculate sum of all records for a particular column.

- mean : It is used to calculate mean of all records for a particular column.

- min : It is used to calculate minimum of all records for a particular column.

- max : It is used to calculate maximum of all records for a particular column.

#pyspark #spark #python #sparksql #dataframe #aggregation #groupBy #sum #mean #avg #max #min

Apache Spark / PySpark Tutorial: Basics In 15 Mins

PySpark Tutorial

The five levels of Apache Spark - Data Engineering

PySpark Tutorial: Spark SQL & DataFrame Basics

PySpark Examples - How to handle String in spark - Spark SQL

What is PySpark | Introduction to PySpark For Beginners | Intellipaat

PySpark Explode function and all its variances with examples

PySpark Examples - How to drop duplicate records from spark data frame

Python Interview Questions: PandasAI, AWS Data Wrangler, Siuba, PyGraphistry & pandas-on-Spark! ...

34. sample() function in PySpark | Azure Databricks #pyspark #spark #azuredatabricks

How to Build ETL Pipelines with PySpark? | Build ETL pipelines on distributed platform | Spark | ETL

Understanding how to Optimize PySpark Job | Cache | Broadcast Join | Shuffle Hash Join #interview

Some Techniques to Optimize Pyspark Job | Pyspark Interview Question| Data Engineer

Firing SQL Queries on DataFrame. #shorts #Pyspark #hadoop

PySpark Examples - How to handle Array type column in spark data frame - Spark SQL

PySpark in Databricks - Part 1: 15 Essential Examples for Big Data Processing.

PySpark Example - Select columns from Spark DataFrame

PySpark Examples - How to handle Date and Time in spark - Spark SQL

PySpark Examples - How to use window function row number, rank, dense rank over dataframe- Spark SQL

PySpark Wordcount Example

Understanding How to Handle Data Skewness in PySpark #interview

PySpark Interview Questions | Azure Data Engineer #azuredataengineer #databricks #pyspark

How To Call API In Python

PySpark Interview Questions | Azure Data Engineer #azuredataengineer #databricks #pyspark

Комментарии

0:17:16

0:17:16

1:49:02

1:49:02

0:03:00

0:03:00

0:17:13

0:17:13

0:15:53

0:15:53

0:02:53

0:02:53

0:11:44

0:11:44

0:10:37

0:10:37

0:00:10

0:00:10

0:08:28

0:08:28

0:08:32

0:08:32

0:00:48

0:00:48

0:00:43

0:00:43

0:00:23

0:00:23

0:15:37

0:15:37

0:05:49

0:05:49

0:04:48

0:04:48

0:15:04

0:15:04

0:11:42

0:11:42

0:07:52

0:07:52

0:00:58

0:00:58

0:00:25

0:00:25

0:00:41

0:00:41

0:00:34

0:00:34