filmov

tv

Working with Nested JSON Using Spark | Parsing Nested JSON File in Spark | Hadoop Training Videos #2

Показать описание

Working with Nested JSON Using Spark | Parsing Nested JSON Files in Spark | Hadoop Training Videos #2

Hello and welcome to Big Data and Hadoop tutorial series powered by ACADGILD. In the previous session Hadoop tutorial series, we learned about Hive and Spark Integration. In this video, we will learn, how to work with nested JSON using Spark and also learn the process of parsing nested JSON in spark.

As structured data is very much easier to query, in this tutorial we will see an approach to convert nested JSON files which is semi-structured data into a tabular format. Kindly check out the execution part on the video.

Go through the complete video and learn how to work on nested JSON using spark and parsing the nested JSON files in integration and become a data scientist by enrolling the course.

Please like and share the video and kindly give your feedbacks and subscribe the channel for more tutorial videos.

#bigdatatutorials, #hadooptutorials, #nestedjson, #hadoop, #bigdata

For more updates on courses and tips follow us on:

Hello and welcome to Big Data and Hadoop tutorial series powered by ACADGILD. In the previous session Hadoop tutorial series, we learned about Hive and Spark Integration. In this video, we will learn, how to work with nested JSON using Spark and also learn the process of parsing nested JSON in spark.

As structured data is very much easier to query, in this tutorial we will see an approach to convert nested JSON files which is semi-structured data into a tabular format. Kindly check out the execution part on the video.

Go through the complete video and learn how to work on nested JSON using spark and parsing the nested JSON files in integration and become a data scientist by enrolling the course.

Please like and share the video and kindly give your feedbacks and subscribe the channel for more tutorial videos.

#bigdatatutorials, #hadooptutorials, #nestedjson, #hadoop, #bigdata

For more updates on courses and tips follow us on:

Working with Nested JSON Using Spark | Parsing Nested JSON File in Spark | Hadoop Training Videos #2

PARSING EXTREMELY NESTED JSON: USING PYTHON | RECURSION

Parse JSON in PHP | How to validate and process nested JSON data

Python - Accessing Nested Dictionary Keys

How to Reads Nested JSON in Pandas Python

127. How to Flatten A Complex Nested JSON Structure in ADF | Azure Data Factory Flatten Transform

JSON: Nested JSON

How To Visualize JSON Files

Python Tutorial: Working with JSON Data using the json Module

Converting Complex JSON to Pandas DataFrame

Exploring Nested JSON Objects | JSON Tutorial

15. Databricks| Spark | Pyspark | Read Json| Flatten Json

Convert Nested JSON To Simple JSON In JavaScript !

Learn JSON in 10 Minutes

Python Guide to Flatten Nested JSON with PySpark

C# JSON Deserialization | Serialization and Deserialization| Nested Json #4

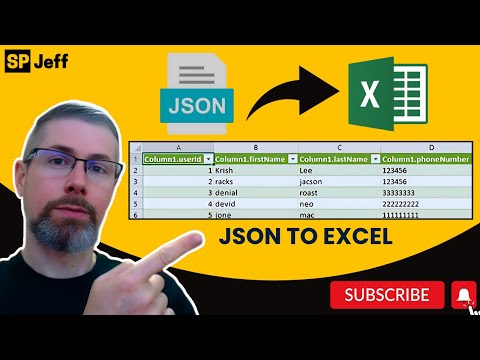

JSON into Excel

How to parse dynamic and nested JSON in java? - Rest assured API automation framework

HOW TO PARSE DIFFERENT TYPES OF NESTED JSON USING PYTHON | DATA FRAME | TRICKS

Flatten Nested Json in PySpark

Ch. 7.14 Dealing with Complex JSON - Nested JSON and JSON Arrays

how to parse highly nested json in power query

Array : Creating nested JSON using PHP MySQL

Reading Nested Json Data into Dataframe Part - 1

Комментарии

0:03:49

0:03:49

0:12:23

0:12:23

0:12:20

0:12:20

0:24:48

0:24:48

0:00:31

0:00:31

0:19:33

0:19:33

0:11:37

0:11:37

0:00:28

0:00:28

0:20:34

0:20:34

0:04:48

0:04:48

0:01:48

0:01:48

0:09:35

0:09:35

0:06:17

0:06:17

0:12:00

0:12:00

0:02:03

0:02:03

0:12:18

0:12:18

0:03:57

0:03:57

0:27:01

0:27:01

0:15:07

0:15:07

0:09:22

0:09:22

0:17:43

0:17:43

0:01:50

0:01:50

0:01:22

0:01:22

0:08:52

0:08:52