filmov

tv

Gibbs sampling

Показать описание

A minilecture describing Gibbs sampling.

Gibbs Sampling : Data Science Concepts

Gibbs sampling

An introduction to Gibbs sampling

15 Gibbs sampling

Advanced Bayesian Methods: Gibbs Sampling

Gibbs sampler algorithm with code

Topic Models: Gibbs Sampling (13c)

How to derive a Gibbs sampling routine in general

[FREE FOR PROFIT] Westside Gunn x Freddie Gibbs Sample Type Beat - 'PRAISE THE A'

Bayesian Networks: MCMC with Gibbs Sampling

38 - Gibbs sampling

GIBBS SAMPLING BMM

Code With Me : Gibbs Sampling

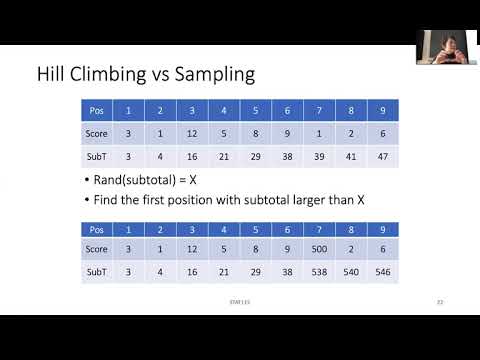

STAT115 Chapter 10.3 Gibbs Sampling for Motif Finding

Training Latent Dirichlet Allocation: Gibbs Sampling (Part 2 of 2)

Markov Chain Monte Carlo | Gibbs Sampler Algorithm

Markov Chain Monte Carlo (MCMC) : Data Science Concepts

MCMC and the Gibbs Sampling Example

Topic Model । Latent Dirichlet Allocation । Collapsed Gibbs Sampling । BBC news dataset । python...

Gibbs Sampling of Continuous Potentials on a Quantum Computer -- ICML 2024

Latent Dirichlet Allocation (LDA) with Gibbs Sampling Explained

[Gibbs sampler and MCMC] Gamma-Gamma-Poisson Exercise

Metropolis-Hastings, the Gibbs Sampler, and MCMC

Inference 4: Gibbs sampling

Комментарии

0:08:49

0:08:49

0:08:38

0:08:38

0:18:58

0:18:58

0:01:36

0:01:36

0:19:22

0:19:22

0:08:37

0:08:37

0:12:55

0:12:55

0:15:07

0:15:07

![[FREE FOR PROFIT]](https://i.ytimg.com/vi/ML7jkUmZGb0/hqdefault.jpg) 0:02:16

0:02:16

0:13:52

0:13:52

0:23:01

0:23:01

0:15:02

0:15:02

0:08:18

0:08:18

0:12:07

0:12:07

0:26:31

0:26:31

0:05:59

0:05:59

0:12:11

0:12:11

0:08:17

0:08:17

0:00:42

0:00:42

0:07:40

0:07:40

0:33:09

0:33:09

0:15:22

0:15:22

0:41:37

0:41:37

0:15:18

0:15:18