filmov

tv

Modified Newton method | Exact Line Search | Theory and Python Code | Optimization Algorithms #4

Показать описание

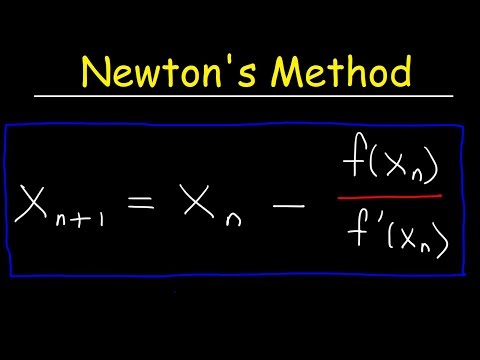

In this one, I will show you what the modified newton algorithm is and how to use it with the exact line search method. We will approach both methods from intuitive and animated perspectives. The difference between Damped and its modified newton method is that the Hessian may run into singularities at some iterations, and so we apply diagonal loading, or Tikhonov regularization at each iteration. As a reminder, Damped newton, just like newton’s method, makes a local quadratic approximation of the function based on information from the current point, and then jumps to the minimum of that approximation. Just imagine fitting a little quadratic surface in higher dimensions to your surface at the current point, and then going to the minimum of the approximation to find the next point. Finding the direction towards the minimum of the quadratic approximation is what you are doing. As a matter of fact, this animation shows you why in certain cases, Newton's method can converge to a saddle or a maximum. If the eigenvalues of the Hessian are non positive - in those cases the local quadratic approximation is an upside down paraboloid.

⏲Outline⏲

00:00 Introduction

00:55 Modified Newton Method

03:41 Exact line search

04:55 Python Implementation

18:46 Animation Module

34:14 Animating Iterations

37:02 Outro

📚Related Courses:

🔴 Subscribe for more videos on CUDA programming

👍 Smash that like button, in case you find this tutorial useful.

👁🗨 Speak up and comment, I am all ears.

💰 If you are able to, donate to help the channel

#python #optimization #algorithm

Комментарии

0:37:29

0:37:29

0:43:57

0:43:57

0:02:36

0:02:36

0:44:09

0:44:09

0:38:46

0:38:46

0:33:15

0:33:15

0:10:41

0:10:41

0:26:50

0:26:50

0:00:20

0:00:20

0:11:04

0:11:04

0:18:37

0:18:37

0:02:18

0:02:18

0:08:57

0:08:57

0:42:28

0:42:28

0:10:27

0:10:27

0:10:01

0:10:01

0:34:59

0:34:59

0:43:59

0:43:59

0:48:50

0:48:50

0:07:32

0:07:32

0:24:13

0:24:13

0:12:28

0:12:28

0:27:24

0:27:24

0:00:38

0:00:38