filmov

tv

Sanjeev Arora: Toward Theoretical Understanding of Deep Learning (ICML 2018 tutorial)

Показать описание

Audio starts at 1:46

Abstract:

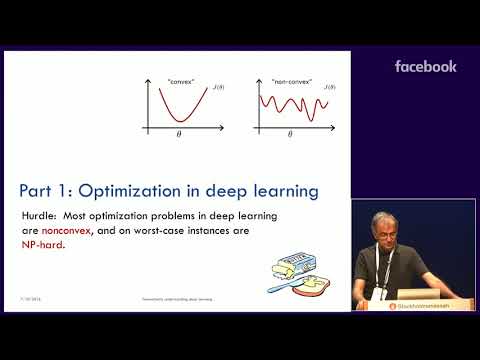

We survey progress in recent years toward developing a theory of deep learning. Works have started addressing issues such as: (a) the effect of architecture choices on the optimization landscape, training speed, and expressiveness (b) quantifying the true "capacity" of the net, as a step towards understanding why nets with hugely more parameters than training examples nevertheless do not overfit (c) understanding inherent power and limitations of deep generative models, especially (various flavors of) generative adversarial nets (GANs) (d) understanding properties of simple RNN-style language models and some of their solutions (word embeddings and sentence embeddings)

While these are early results, they help illustrate what kind of theory may ultimately arise for deep learning.

Presented by Sanjeev Arorau (Princeton U., Inst. For Advanced Study)

Abstract:

We survey progress in recent years toward developing a theory of deep learning. Works have started addressing issues such as: (a) the effect of architecture choices on the optimization landscape, training speed, and expressiveness (b) quantifying the true "capacity" of the net, as a step towards understanding why nets with hugely more parameters than training examples nevertheless do not overfit (c) understanding inherent power and limitations of deep generative models, especially (various flavors of) generative adversarial nets (GANs) (d) understanding properties of simple RNN-style language models and some of their solutions (word embeddings and sentence embeddings)

While these are early results, they help illustrate what kind of theory may ultimately arise for deep learning.

Presented by Sanjeev Arorau (Princeton U., Inst. For Advanced Study)

Sanjeev Arora: Toward Theoretical Understanding of Deep Learning

Sanjeev Arora: Toward Theoretical Understanding of Deep Learning

Sanjeev Arora: Toward Theoretical Understanding of Deep Learning (ICML 2018 tutorial)

Toward theoretical understanding of deep learning (Lecture 2) by Sanjeev Arora

Sanjeev Arora | Opening the black box: Toward mathematical understanding of deep learning

Theoretical analysis of unsupervised learning (Lecture 3) by Sanjeev Arora

Sanjeev Arora: Why do deep nets generalize, that is, predict well on unseen data

Sanjeev Arora on 'A theoretical approach to semantic representations'

Mathematics of Machine Learning: An introduction (Lecture - 01) by Sanjeev Arora

Sanjeev Arora - Is Optimization the Right Language to Understand Deep Learning?

Is Optimization the Right Language to Understand Deep Learning? - Sanjeev Arora

Brief introduction to deep learning and the 'Alchemy' controversy - Sanjeev Arora

Sanjeev Arora on the future of computing.

A Theory for Emergence of Complex Skills in Language Models

6th HLF – Laureate interview: Sanjeev Arora

Sanjeev Arora - Universality Phenomena in Machine Learning, and Their Applications (May 13, 2016)

Sanjeev Arora - When and How Can We Compute Approximately Optimal Solutions? (Feb 9, 2011)

A Theoretical Approach to Semantic Coding and Hashing

Some things you need to know about machine learning but didn't know... - Sanjeev Arora

Sanjeev Arora: What is Machine Learning?

Sanjeev Arora | Provable Bounds for Machine Learning

What is Machine Learning and Deep Learning? PROF.SANJEEV ARORA Princeton University, USA

IDSS Distinguished Speaker Seminar: Sanjeev Arora | March 5, 2019

Can machines learn without supervision? by Sanjeev Arora

Комментарии

1:05:37

1:05:37

0:31:06

0:31:06

2:13:57

2:13:57

1:21:06

1:21:06

0:57:58

0:57:58

1:17:44

1:17:44

0:56:17

0:56:17

1:04:44

1:04:44

1:43:20

1:43:20

1:03:01

1:03:01

0:32:35

0:32:35

0:39:54

0:39:54

0:01:58

0:01:58

1:04:45

1:04:45

0:09:25

0:09:25

0:56:47

0:56:47

1:08:32

1:08:32

0:43:38

0:43:38

1:05:48

1:05:48

1:02:18

1:02:18

0:58:07

0:58:07

1:02:17

1:02:17

1:01:46

1:01:46

0:32:20

0:32:20