filmov

tv

Part 9: Gradient Boosting Implementation in Python

Показать описание

Gradient Boosting is a powerful machine learning technique used for both classification and regression tasks, known for its ability to build highly accurate predictive models. The core idea of Gradient Boosting is to create an ensemble of weak learners, typically shallow decision trees, where each new tree attempts to correct the errors made by the previous trees. By sequentially adding trees, Gradient Boosting effectively builds a model that improves with each iteration, focusing more closely on difficult-to-predict instances over time. This iterative process results in a strong overall model that combines the strengths of all individual learners.

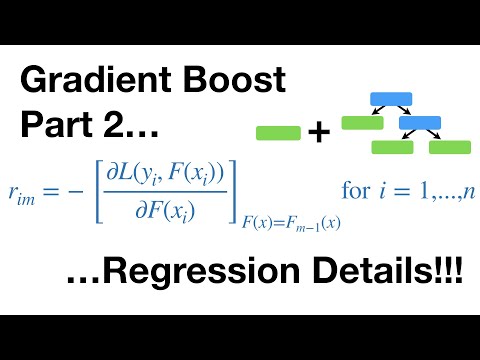

The algorithm works by minimizing a specified loss function, such as mean squared error for regression or log loss for classification, using a method called gradient descent. In each iteration, Gradient Boosting calculates the gradient, or the direction in which the model’s predictions should change, to reduce the loss. A new decision tree is then fitted to this gradient, learning from the residual errors or the differences between the actual values and the predictions. By adjusting predictions incrementally based on the gradients, Gradient Boosting fine-tunes the model in a way that captures complex patterns in the data.

Gradient Boosting is highly customizable, offering various parameters to control its performance and complexity. Key parameters include the learning rate, which determines the contribution of each tree to the overall model, and the number of trees, which controls the depth of learning. A lower learning rate combined with a larger number of trees usually results in better generalization but at a higher computational cost. Regularization techniques, such as limiting tree depth and adding constraints on individual trees, are often applied to prevent overfitting and enhance the model’s ability to generalize to new data.

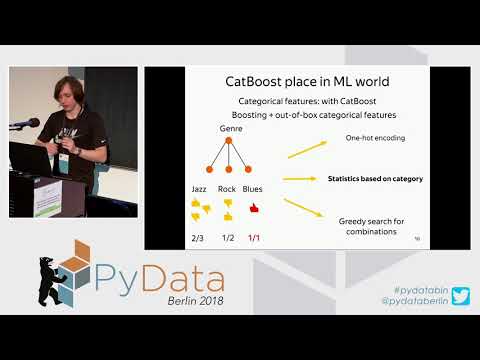

Despite its strengths, Gradient Boosting is computationally intensive, especially with large datasets, due to the sequential nature of training trees. This can make it slower than other ensemble methods like Random Forests. However, optimized implementations, such as XGBoost, LightGBM, and CatBoost, have made Gradient Boosting more efficient and scalable for real-world applications. Gradient Boosting is widely used in fields like finance, healthcare, and marketing for tasks ranging from fraud detection and credit scoring to customer segmentation, where its high predictive accuracy is particularly valuable.

The algorithm works by minimizing a specified loss function, such as mean squared error for regression or log loss for classification, using a method called gradient descent. In each iteration, Gradient Boosting calculates the gradient, or the direction in which the model’s predictions should change, to reduce the loss. A new decision tree is then fitted to this gradient, learning from the residual errors or the differences between the actual values and the predictions. By adjusting predictions incrementally based on the gradients, Gradient Boosting fine-tunes the model in a way that captures complex patterns in the data.

Gradient Boosting is highly customizable, offering various parameters to control its performance and complexity. Key parameters include the learning rate, which determines the contribution of each tree to the overall model, and the number of trees, which controls the depth of learning. A lower learning rate combined with a larger number of trees usually results in better generalization but at a higher computational cost. Regularization techniques, such as limiting tree depth and adding constraints on individual trees, are often applied to prevent overfitting and enhance the model’s ability to generalize to new data.

Despite its strengths, Gradient Boosting is computationally intensive, especially with large datasets, due to the sequential nature of training trees. This can make it slower than other ensemble methods like Random Forests. However, optimized implementations, such as XGBoost, LightGBM, and CatBoost, have made Gradient Boosting more efficient and scalable for real-world applications. Gradient Boosting is widely used in fields like finance, healthcare, and marketing for tasks ranging from fraud detection and credit scoring to customer segmentation, where its high predictive accuracy is particularly valuable.

0:06:12

0:06:12

0:02:30

0:02:30

0:26:46

0:26:46

0:06:26

0:06:26

0:14:48

0:14:48

0:36:29

0:36:29

0:24:06

0:24:06

0:10:44

0:10:44

0:17:31

0:17:31

1:27:14

1:27:14

0:43:25

0:43:25

0:41:28

0:41:28

0:40:09

0:40:09

0:11:03

0:11:03

1:32:50

1:32:50

0:50:59

0:50:59

0:23:37

0:23:37

![[MXML-11-03] Extreme Gradient](https://i.ytimg.com/vi/Ms_xxQFrTWc/hqdefault.jpg) 0:16:05

0:16:05

0:05:59

0:05:59

0:08:50

0:08:50

0:16:30

0:16:30

0:05:07

0:05:07

![[MXML-11-06] Extreme Gradient](https://i.ytimg.com/vi/oKLBon15bTc/hqdefault.jpg) 0:18:32

0:18:32

0:09:38

0:09:38