filmov

tv

How to build a Simple Neural Network in Python (One Layer) Part I

Показать описание

Example is from the book "Machine Learning for Finance" by Jannes Klaas.

In this video we are building a simple one layer Neural Network from scratch in Python. In specific we are setting up the input layer and initialize random weights and feed this data to the activation function (sigmoid).

The output is then compared with the actual output (y) and measured with the binary cross entropy loss.

If you found this interesting I will continue with optimizing the network using Gradient Descent and Parameter update using Backpropagation and provide details on how to proceed.

Link to Book (Not affiliated btw)

Mentioned articles:

Bias and weights

Cross Entropy Loss:

00:00 - 01:36 Introduction / Resources

01:36 - 02:31 Input Layer and output (y)

02:31 - 04:20 Initialize random weights and bias

04:20 - 04:50 Getting z (Summation + bias)

04:50 - 05:53 Sigmoid function and getting A (Output of layer)

05:53 - 06:16 Comparing layer output with y

06:16 - 10:20 Binary Cross Entropy Loss

10:20 - 10:45 What needs to be done / outlook

#Python #NeuralNetwork

In this video we are building a simple one layer Neural Network from scratch in Python. In specific we are setting up the input layer and initialize random weights and feed this data to the activation function (sigmoid).

The output is then compared with the actual output (y) and measured with the binary cross entropy loss.

If you found this interesting I will continue with optimizing the network using Gradient Descent and Parameter update using Backpropagation and provide details on how to proceed.

Link to Book (Not affiliated btw)

Mentioned articles:

Bias and weights

Cross Entropy Loss:

00:00 - 01:36 Introduction / Resources

01:36 - 02:31 Input Layer and output (y)

02:31 - 04:20 Initialize random weights and bias

04:20 - 04:50 Getting z (Summation + bias)

04:50 - 05:53 Sigmoid function and getting A (Output of layer)

05:53 - 06:16 Comparing layer output with y

06:16 - 10:20 Binary Cross Entropy Loss

10:20 - 10:45 What needs to be done / outlook

#Python #NeuralNetwork

Minecraft | How to Build a Simple Survival House | Starter House

Minecraft: How To Build a Simple Survival House

Complete Backyard Shed Build In 3 Minutes - iCreatables Shed Plans

Minecraft: How To Build a Simple Survival House

Simple off grid Cabin that anyone can build & afford

Minecraft: How To Build Simple Underwater Starter House! (6 Minutes!)

Minecraft: How To Build A Survival Starter House Tutorial (#4)

How to Build a Simple, Sturdy Greenhouse from 2x4's | Modern Builds | EP. 58

how to build a wooden house simple survival farm house tutorial

How to Build a Simple Dovetailed Box | Paul Sellers

Minecraft: 20+ Simple Build Hacks!

Minecraft: How To Build a Simple MOUNTAIN BASE!

How to Build a Tiny Pole Barn in -5 MINUTES- | Chicken House Plans

Build a Pair of Simple Sawhorses! Strong, Cheap, Stackable

How To Build A Simple DIY Dresser | Free Woodworking Project Plan

Minecraft | How to Build A Simple Japanese Starter House Tutorial

How to Build a Simple Bed Frame on a Budget - EASY DIY

Complete build a simple house

Minecraft | How to Build a Simple Survival House | Starter House

How To Build a Simple Cheap Work Bench

Minecraft: How To Build Simple Underwater Starter House! (5 Minutes!)

Minecraft: How To Build a Simple Starter House

How to Build a SIMPLE Dresser (Basic Tools!)

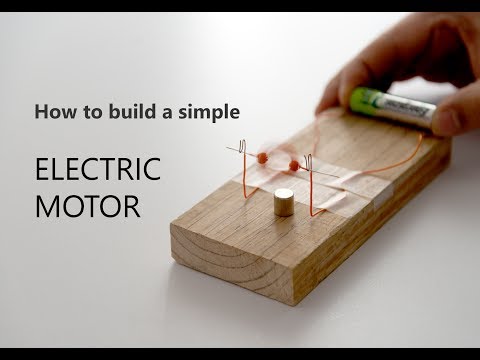

HOW TO BUILD A SIMPLE ELECTRIC MOTOR

Комментарии

0:00:30

0:00:30

0:12:00

0:12:00

0:02:57

0:02:57

0:17:10

0:17:10

0:28:38

0:28:38

0:06:31

0:06:31

0:14:25

0:14:25

0:08:46

0:08:46

0:07:01

0:07:01

2:09:06

2:09:06

0:08:26

0:08:26

0:07:20

0:07:20

0:10:42

0:10:42

0:07:18

0:07:18

0:14:36

0:14:36

0:08:55

0:08:55

0:04:57

0:04:57

0:04:04

0:04:04

0:15:09

0:15:09

0:04:14

0:04:14

0:08:07

0:08:07

0:10:09

0:10:09

0:19:56

0:19:56

0:07:56

0:07:56