filmov

tv

Gregory Schwartzman - SGD Through the Lens of Kolmogorov Complexity

Показать описание

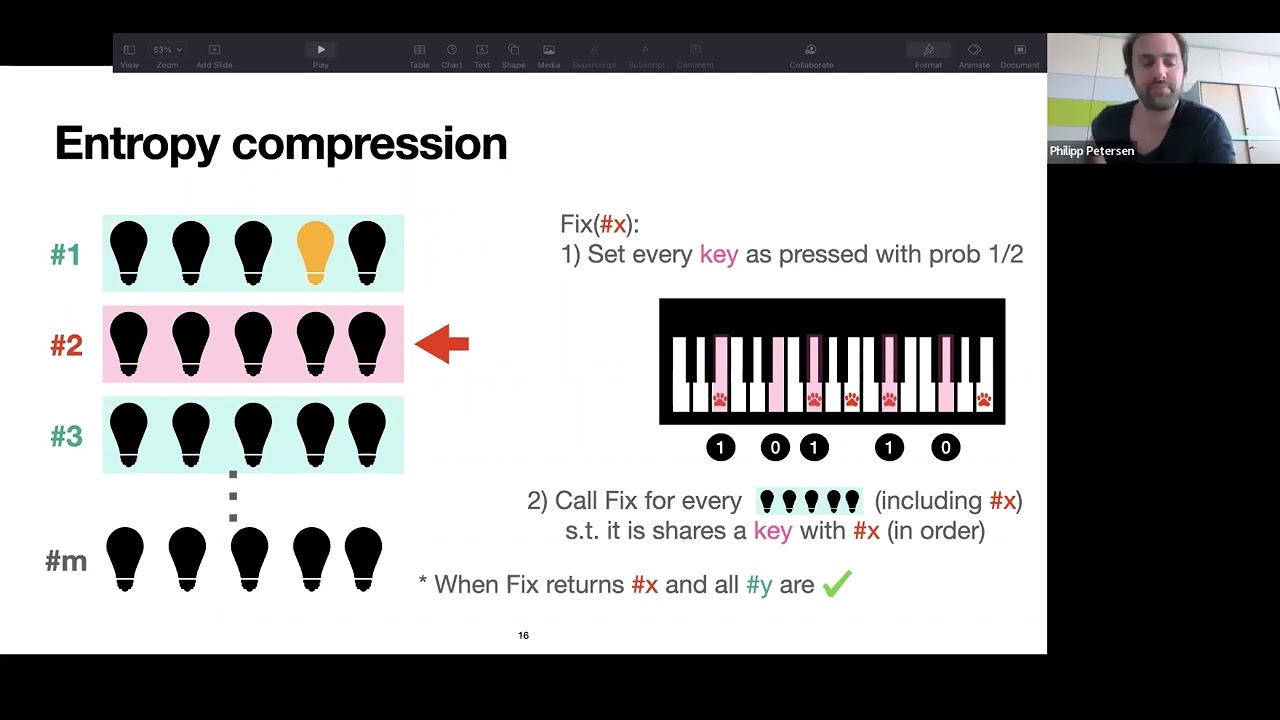

Abstract: In this talk, we present global convergence guarantees for stochastic gradient descent (SGD) via an entropy compression argument. We do so under two main assumptions: (1. Local progress) The model accuracy improves on average over batches. (2. Models compute simple functions) The function computed by the model has low Kolmogorov complexity. It is sufficient that these assumptions hold only for a tiny fraction of the epochs. Intuitively, our results imply that intermittent local progress of SGD implies global progress. Assumption 2 trivially holds for underparameterized models, hence, our work gives the first convergence guarantee for general, underparameterized models. Furthermore, this is the first result that is completely model agnostic - we don't require the model to have any specific architecture or activation function, it may not even be a neural network.

0:57:19

0:57:19

0:50:28

0:50:28

1:04:56

1:04:56

0:06:54

0:06:54

0:44:45

0:44:45

0:01:21

0:01:21

0:25:23

0:25:23

0:42:26

0:42:26

0:05:31

0:05:31

0:08:10

0:08:10

0:22:20

0:22:20

1:05:14

1:05:14

1:39:56

1:39:56

0:46:38

0:46:38

0:57:48

0:57:48

0:31:30

0:31:30