filmov

tv

WAY Better Than Stable Diffusion! - GAN AI Art Models

Показать описание

Nord’s 30-day money-back guarantee! ✌ Don’t forget to use my discount code: mattvidproai

▼ Link(s) From Today’s Video:

-------------------------------------------------

▼ Extra Links of Interest:

-------------------------------------------------

Thanks for watching Matt Video Productions! I make all sorts of videos here on Youtube! Technology, Tutorials, and Reviews! Enjoy Your stay here, and subscribe!

All Suggestions, Thoughts And Comments Are Greatly Appreciated… Because I Actually Read Them.

-------------------------------------------------

▼ Link(s) From Today’s Video:

-------------------------------------------------

▼ Extra Links of Interest:

-------------------------------------------------

Thanks for watching Matt Video Productions! I make all sorts of videos here on Youtube! Technology, Tutorials, and Reviews! Enjoy Your stay here, and subscribe!

All Suggestions, Thoughts And Comments Are Greatly Appreciated… Because I Actually Read Them.

-------------------------------------------------

WAY Better Than Stable Diffusion! - GAN AI Art Models

ComfyUI - Getting Started : Episode 1 - Better than AUTO1111 for Stable Diffusion AI Art generation

Wake up babe, a dangerous new open-source AI model is here

Why Does Diffusion Work Better than Auto-Regression?

Better than Sora!? FREE AI video generator: Dream Machine #ai #lumaai #sora

Stable Diffusion explained (in less than 10 minutes)

Using # #midjourney vs #Stable Diffusion

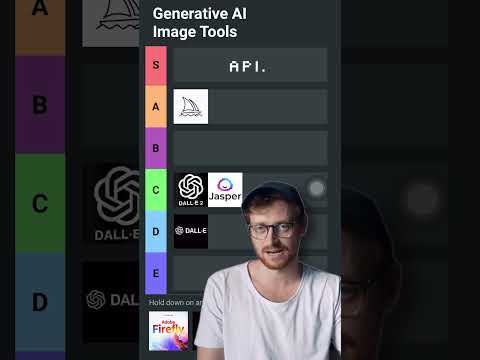

Ranking The Best AI Image Generation Tools

Text to Image AI: Generate Unlimited 4K Photos (No Limits!)

HOW much 💵💰💵 did Stable Diffusion COST to Train?

This NEW AI Creates Videos Better Than Reality – By Far the Best AI Tool of 2025!

Stable diffusion VS Midjourney: All you need to know

Testing Stable Diffusion inpainting on video footage #shorts

Is Stable Diffusion Actually Better Than Dall-e 2?

Why ComfyUI is The BEST UI for Stable Diffusion!

Midjourney and Leonardo.ai are NO longer NEEDED | How to make UNLIMITED high quality AI images

Why Hugging Face is WAY more than the Stable Diffusion Host | The Ai Nexus

Is Kandinsky-2 is better than Stable Diffusion?

Diffusion Models versus Generative Adversarial Networks (GANs) | AI Image Generation Models

Kling vs. Runway Gen 3 vs. Luma Dream Machine vs. Pixverse

OK. Now I'm Scared... AI Better Than Reality!

Stable Diffusion 2.0: Better Than Midjourney? 🤯🚀

Stable Diffusion XL 0.9 IS HERE! Better than Midjourney?

64GB RAM is Slower Than 32GB, Here's Why! 🐌 #gamingpc #pcbuildtips #ram #corsair #pcbuild

Комментарии

0:13:59

0:13:59

0:19:01

0:19:01

0:04:45

0:04:45

0:20:18

0:20:18

0:00:31

0:00:31

0:09:56

0:09:56

0:00:28

0:00:28

0:00:57

0:00:57

0:05:37

0:05:37

0:00:32

0:00:32

0:06:54

0:06:54

0:08:19

0:08:19

0:00:16

0:00:16

0:09:30

0:09:30

0:19:27

0:19:27

0:02:13

0:02:13

0:09:09

0:09:09

0:27:59

0:27:59

0:02:29

0:02:29

0:20:16

0:20:16

0:08:10

0:08:10

0:03:49

0:03:49

0:08:55

0:08:55

0:00:35

0:00:35