filmov

tv

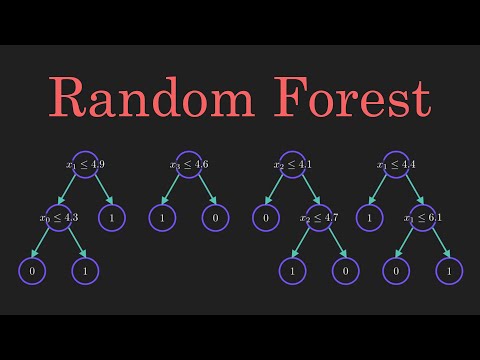

Random Forest in Machine Learning: Easy Explanation for Data Science Interviews

Показать описание

Random Forest is one of the most useful pragmatic algorithms for fast, simple, flexible predictive modeling. In this video, I dive into how Random Forest works, how you can use it to reduce variance, what makes it “random,” and the most common pros and cons associated with using this method.

🟢Get all my free data science interview resources

// Comment

Got any questions? Something to add?

Write a comment below to chat.

// Let's connect on LinkedIn:

====================

Contents of this video:

====================

00:00 Introduction

01:09 What Is Random Forest?

02:10 How Random Forest Works

03:53 Why Is Random Forest Random?

04:20 Random Forest vs. Bagging

04:57 Hyperparameters

06:18 Variance Reduction

09:04 Pros and Cons of Random Forest

🟢Get all my free data science interview resources

// Comment

Got any questions? Something to add?

Write a comment below to chat.

// Let's connect on LinkedIn:

====================

Contents of this video:

====================

00:00 Introduction

01:09 What Is Random Forest?

02:10 How Random Forest Works

03:53 Why Is Random Forest Random?

04:20 Random Forest vs. Bagging

04:57 Hyperparameters

06:18 Variance Reduction

09:04 Pros and Cons of Random Forest

What is Random Forest?

Random Forest Algorithm Clearly Explained!

StatQuest: Random Forests Part 1 - Building, Using and Evaluating

Random Forest Algorithm - Random Forest Explained | Random Forest in Machine Learning | Simplilearn

Machine Learning Tutorial Python - 11 Random Forest

Random Forest in 7 minutes

Tutorial 43-Random Forest Classifier and Regressor

Random Forest 🌳 in Machine Learning 🧑💻👩💻

Random Forest Algorithms

Random Forest in Machine Learning: Easy Explanation for Data Science Interviews

Visual Guide to Random Forests

Random Forest Algorithm | Random Forest Complete Explanation | Data Science Training | Edureka

Random Forest Algorithm Explained with Python and scikit-learn

Random Forest Ensemble Learning Algorithm | Random Forest Learning in Machine Learning Mahesh Huddar

Random Forest Algorithm | Machine Learning Algorithm | Tutorialspoint

Machine Learning | Random Forest

Random Forest Explained | Random Forest Algorithm in Machine Learning | Data Science | Intellipaat

Random Forest Classification | Machine Learning | Python

Introduction to Random Forest | Intuition behind the Algorithm

All Learning Algorithms Explained in 14 Minutes

Random Forest Explained | Random Forest Python | Machine Learning Python | Python Training | Edureka

How Random Forest Work|How Random Forest Algorithm Works|Random Forest Machine Learning

Random Forest Step-Wise Explanation ll Machine Learning Course Explained in Hindi

Lecture 18-Random Forest Algorithm

Комментарии

0:05:21

0:05:21

0:08:01

0:08:01

0:09:54

0:09:54

0:45:35

0:45:35

0:12:48

0:12:48

0:07:38

0:07:38

0:10:18

0:10:18

0:08:33

0:08:33

0:06:36

0:06:36

0:11:02

0:11:02

0:05:12

0:05:12

0:34:10

0:34:10

0:11:49

0:11:49

0:09:06

0:09:06

0:06:49

0:06:49

0:10:35

0:10:35

0:54:34

0:54:34

0:17:29

0:17:29

0:33:55

0:33:55

0:14:10

0:14:10

0:48:17

0:48:17

0:13:01

0:13:01

0:11:27

0:11:27

0:10:23

0:10:23