filmov

tv

How to use local resources in google colab for AI ML Model training

Показать описание

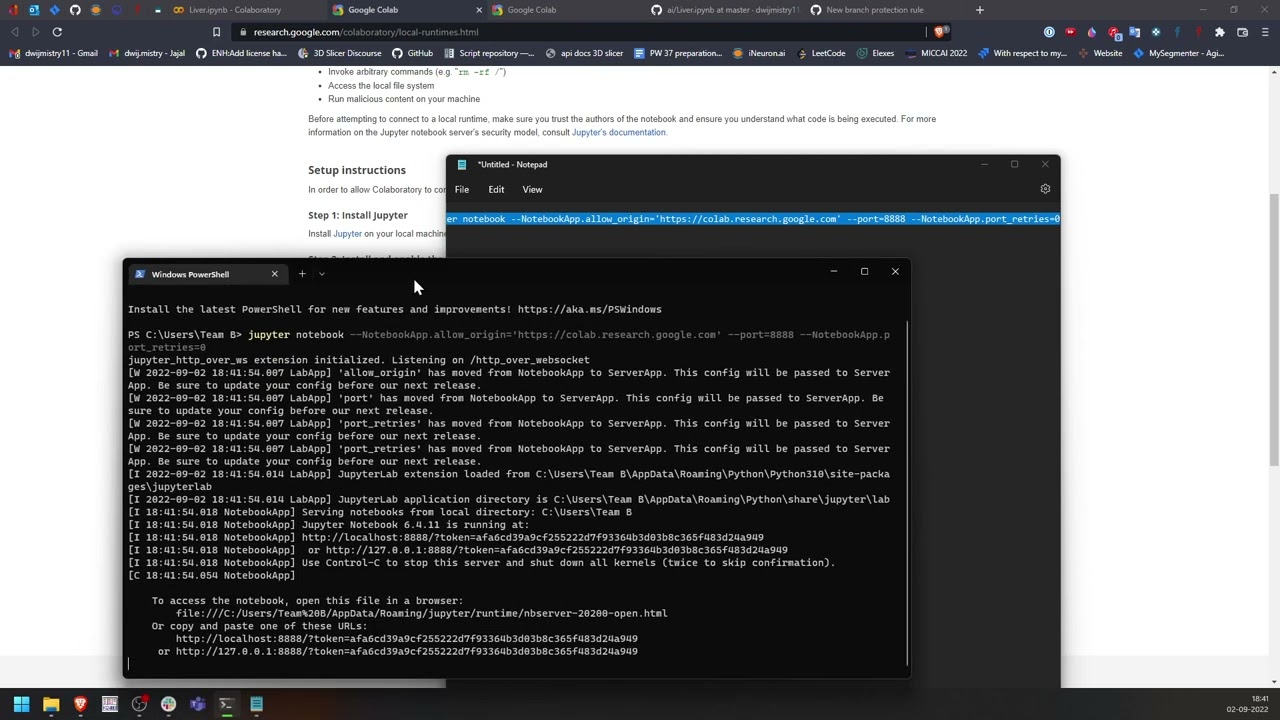

Setup instructions

In order to allow Colaboratory to connect to your locally running Jupyter server, you'll need to perform the following steps.

Step 1: Install Jupyter

Install Jupyter on your local machine.

Step 2: Install and enable the jupyter_http_over_ws jupyter extension (one-time)

The jupyter_http_over_ws extension is authored by the Colaboratory team and available on GitHub.

pip install jupyter_http_over_ws

jupyter serverextension enable --py jupyter_http_over_ws

Step 3: Start server and authenticate

New notebook servers are started normally, though you will need to set a flag to explicitly trust WebSocket connections from the Colaboratory frontend.

jupyter notebook \

--port=8888 \

Once the server has started, it will print a message with the initial backend URL used for authentication. Make a copy of this URL as you'll need to provide this in the next step.

Step 4: Connect to the local runtime

In Colaboratory, click the "Connect" button and select "Connect to local runtime...". Enter the URL from the previous step in the dialog that appears and click the "Connect" button. After this, you should now be connected to your local runtime.

use local branch

#ai #aiml #learnai #learning #monai #google #googlecolab

How to use local resources in google colab for AI ML Model training

Use your local resources

Use Local Resources | Genealogy Pro Tips #shorts

Curriculum..Use of local resources and Local need

Tips to Use Local Resources to Benefit Your Homestead

'Use local resources on Mars' - Neil deGrasse Tyson

HOW TO USE LOCAL COMPUTER RESOURCES ON REMOTE COMPUTER

It's a Matter of changing our mindset and use local resources to earn a living.

Cataclismo Gameplay (PC UHD) [4K60FPS]

Study Tips: Local resources. How to succeed with little funds. Use your Library.@ZebralterMedical

Construction: How to use local resources to build a nest by Fereshte and Mahdi

Making Use Of Free Local Resources, Part I: Tulip Poplars

Use local resources, technology to develop the country: President Mnangagwa

Use your local resources!!!

FREE! FREE! FREE! MAKE USE OF YOUR LOCAL RESOURCES. HOMESTEADING 101

Making use of local resources, #adventure and #manifesting ✨️ #free #books

Collection & Use of Local Resources TLMs

Fashion Vs Reality 😆 Use of local Resources|Modern Life| 479Only/-😛

AI Unleashed: Install and Use Local LLMs with Ollama – ChatGPT on Steroids! (FREE)

Use Free Resources For Your Certs

China - The Use of Local Resources

Disaster management session 20: use of Appropriate technology and local resources in DM

How to Use String Resources In a ViewModel - Android Studio Tutorial

Bamboo Material Construction - We Need To Use Local Resources More!!

Комментарии

0:03:05

0:03:05

0:01:04

0:01:04

0:00:40

0:00:40

0:09:35

0:09:35

0:07:42

0:07:42

0:00:50

0:00:50

0:10:15

0:10:15

0:03:43

0:03:43

0:55:57

0:55:57

0:00:56

0:00:56

0:23:59

0:23:59

0:04:09

0:04:09

0:02:25

0:02:25

0:01:11

0:01:11

0:00:15

0:00:15

0:01:01

0:01:01

0:03:00

0:03:00

0:00:21

0:00:21

0:09:45

0:09:45

0:00:16

0:00:16

0:05:01

0:05:01

0:23:44

0:23:44

0:11:57

0:11:57

0:09:40

0:09:40