filmov

tv

GPU-accelerating UDFs in PySpark with Numba and PyGDF

Показать описание

AnacondaCon 2018. Keith Kraus & Joshua Patterson. With advances in computer hardware such as 10 gigabit network cards, infiniband, and solid state drives all becoming commodity offerings, the new bottleneck in big data technologies is very commonly the processing power of the CPU. In order to meet the computational demand desired by users, enterprises have had to resort to extreme scale out approaches just to get the processing power they need. One of the most well known technologies in this space, Apache Spark, has numerous enterprises publicly talking about the challenges in running multiple 1000+ node clusters to give their users the processing power they need. This talk is based on work completed by NVIDIA’s Applied Solutions Engineering team. Attendees will learn how they were able to GPU-accelerate UDFs in PySpark using open source technologies such as Numba and PyGDF, the le

GPU-accelerating UDFs in PySpark with Numba and PyGDF

Speed up UDFs with GPUs using the RAPIDS Accelerator

Advancing GPU Analytics with RAPIDS Accelerator for Apache Spark and Alluxio

Accelerating Apache Spark by Several Orders of Magnitude with GPUs

GPU Computing With Apache Spark And Python

Accelerating Apache Spark by Several Orders of Magnitude with GPUs and RAPIDS Library continues

GPU-Accelerated Data Analytics in Python |SciPy 2020| Joe Eaton

Accelerate Python Analytics on GPUs with RAPIDS

Hands-On GPU Computing with Python | 11. GPU Acceleration for Scientific Applications Using DeepChem

Accelerated Transactions processing leveraging Virtualized Apache Spark and NVIDIA GPUs

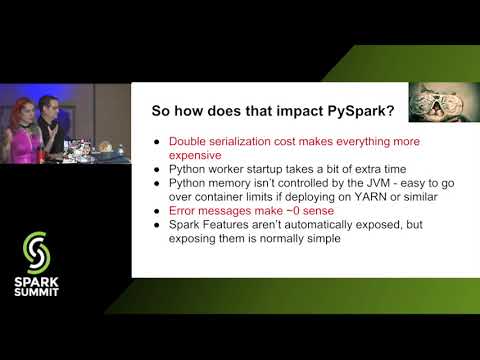

Making PySpark Amazing—From Faster UDFs to Graphing! (Holden Karau and Bryan Cutler)

NVIDIA: Accelerate Spark With RAPIDS For Cost Savings

Nvidia Rapids Dask & CUDA Dataframe Issues

Determining When to Use GPU for Your ETL Pipelines at Scale

Improving Python and Spark Performance and Interoperability with Apache Arrow

AIM MasterClass on 'Performance Boosting ETL Workloads Using RAPIDS On Spark 3.0' by NVIDI...

NVIDIA RAPIDS Accelerator for Apache Spark ML

Deep Dive into GPU Support in Apache Spark 3.x

Accelerating Data Science with RAPIDS - Mike Wendt

Modernize Your Analytics Workloads for Apache Spark 3.0 and Beyond

GPU Support In Spark And GPU:CPU Mixed Resource Scheduling At Production Scale

Scalable XGBoost on GPU Clusters

PandasUDFs: One Weird Trick to Scaled Ensembles

GPU Hackathons Experience

Комментарии

0:30:16

0:30:16

0:25:57

0:25:57

0:27:22

0:27:22

0:35:41

0:35:41

0:17:35

0:17:35

0:41:54

0:41:54

0:28:23

0:28:23

1:06:40

1:06:40

0:05:04

0:05:04

0:04:59

0:04:59

0:30:50

0:30:50

0:48:36

0:48:36

0:03:41

0:03:41

0:23:02

0:23:02

0:28:14

0:28:14

1:01:07

1:01:07

0:54:34

0:54:34

0:47:13

0:47:13

0:40:33

0:40:33

0:25:29

0:25:29

0:31:39

0:31:39

0:36:05

0:36:05

0:37:32

0:37:32

0:01:36

0:01:36