filmov

tv

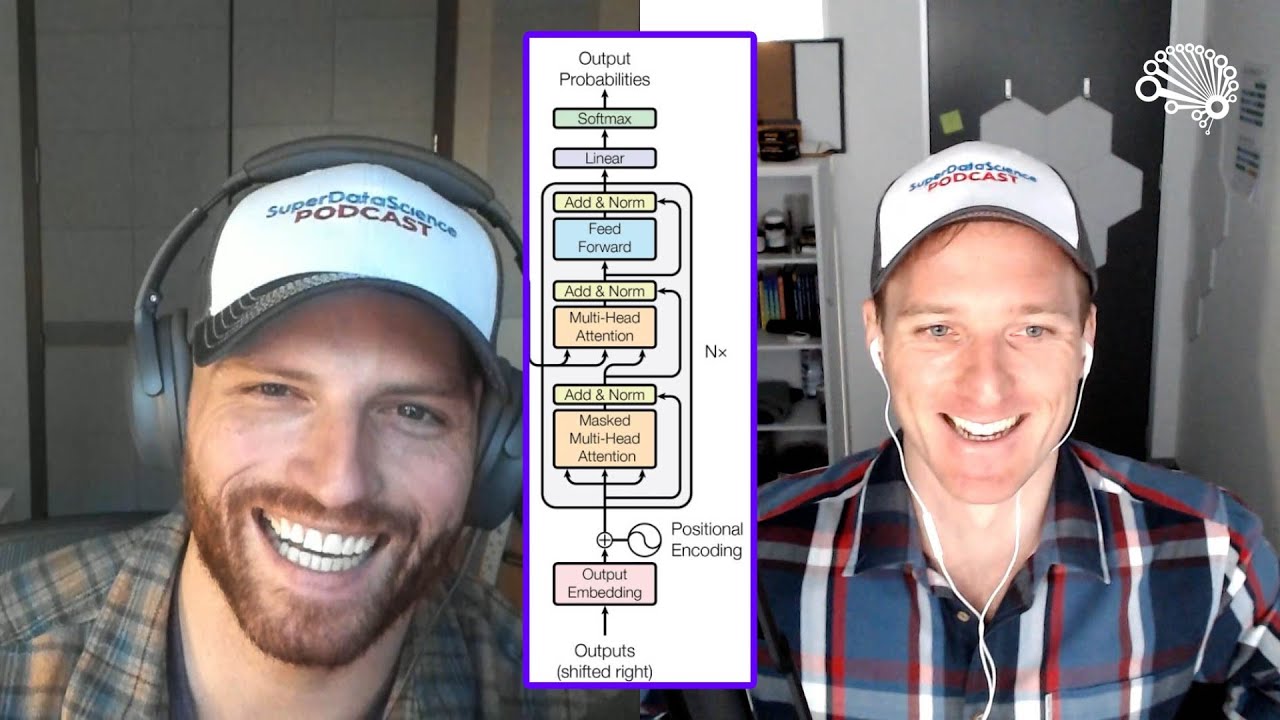

How Decoder-Only Transformers (like GPT) Work

Показать описание

Learn about encoders, cross attention and masking for LLMs as SuperDataScience Founder Kirill Eremenko returns to the SuperDataScience podcast, to speak with @JonKrohnLearns about transformer architectures and why they are a new frontier for generative AI. If you’re interested in applying LLMs to your business portfolio, you’ll want to pay close attention to this episode!

Decoder-Only Transformers, ChatGPTs specific Transformer, Clearly Explained!!!

How Decoder-Only Transformers (like GPT) Work

Which transformer architecture is best? Encoder-only vs Encoder-decoder vs Decoder-only models

Transformer models: Decoders

MeshGPT: Generating Triangle Meshes with Decoder-Only Transformers

Encoder-Only Transformers (like BERT) for RAG, Clearly Explained!!!

LLM Transformers Encoder Only vs Decoder Only vs Encoder Decoder Models

Confused which Transformer Architecture to use? BERT, GPT-3, T5, Chat GPT? Encoder Decoder Explained

BERT and GPT in Language Models like ChatGPT or BLOOM | EASY Tutorial on Large Language Models LLM

Coding a ChatGPT Like Transformer From Scratch in PyTorch

Illustrated Guide to Transformers Neural Network: A step by step explanation

Transformer Explainer- Learn About Transformer With Visualization

Transformer models: Encoder-Decoders

Let's build GPT: from scratch, in code, spelled out.

Tutorial 14: Encoder only and Decoder only Transformers in HINDI | BERT, BART, GPT

Transformer Neural Networks, ChatGPT's foundation, Clearly Explained!!!

Encoder-Decoder Transformers vs Decoder-Only vs Encoder-Only: Pros and Cons

Decoder architecture in 60 seconds

Transformer models: Encoders

GPT vs T5 #NLP #AI #MachineLearning #T5 #GPT

Why masked Self Attention in the Decoder but not the Encoder in Transformer Neural Network?

Decoder training with transformers

Attention in transformers, step-by-step | Deep Learning Chapter 6

Introduction to LLMs: Encoder Vs Decoder Models

Комментарии

0:36:45

0:36:45

0:18:56

0:18:56

0:07:38

0:07:38

0:04:27

0:04:27

0:03:48

0:03:48

0:18:52

0:18:52

0:00:58

0:00:58

0:15:30

0:15:30

0:02:58

0:02:58

0:31:11

0:31:11

0:15:01

0:15:01

0:06:49

0:06:49

0:06:47

0:06:47

1:56:20

1:56:20

0:04:09

0:04:09

0:36:15

0:36:15

0:08:45

0:08:45

0:00:49

0:00:49

0:04:46

0:04:46

0:00:56

0:00:56

0:00:45

0:00:45

0:00:59

0:00:59

0:26:10

0:26:10

0:02:58

0:02:58