filmov

tv

Holden Karau: A brief introduction to Distributed Computing with PySpark

Показать описание

PyData Seattle 2015

Apache Spark is a fast and general engine for distributed computing & big data processing with APIs in Scala, Java, Python, and R. This tutorial will briefly introduce PySpark (the Python API for Spark) with some hands-on-exercises combined with a quick introduction to Spark's core concepts. We will cover the obligatory wordcount example which comes in with every big-data tutorial, as well as discuss Spark's unique methods for handling node failure and other relevant internals. Then we will briefly look at how to access some of Spark's libraries (like Spark SQL & Spark ML) from Python. While Spark is available in a variety of languages this workshop will be focused on using Spark and Python together.

Materials available here:

00:10 Help us add time stamps or captions to this video! See the description for details.

Apache Spark is a fast and general engine for distributed computing & big data processing with APIs in Scala, Java, Python, and R. This tutorial will briefly introduce PySpark (the Python API for Spark) with some hands-on-exercises combined with a quick introduction to Spark's core concepts. We will cover the obligatory wordcount example which comes in with every big-data tutorial, as well as discuss Spark's unique methods for handling node failure and other relevant internals. Then we will briefly look at how to access some of Spark's libraries (like Spark SQL & Spark ML) from Python. While Spark is available in a variety of languages this workshop will be focused on using Spark and Python together.

Materials available here:

00:10 Help us add time stamps or captions to this video! See the description for details.

Holden Karau: A brief introduction to Distributed Computing with PySpark

A very brief introduction to extending Spark ML for custom models - Holden Karau

Introduction to Spark Datasets by Holden Karau

Berlin Buzzwords 2017: Holden Karau - A Brief Tour of the Magic Behind Apache Spark #bbuzz

Interview - Holden Karau

Data Science in 30 Minutes - A Quick Introduction to PySpark with Holden Karau

Kubeflow for Machine Learning • Holden Karau & Adi Polak • GOTO 2022

Holden Karau - The Magic Behind PySpark, how it impacts perf & the 'future'

Scaling Python for Machine Learning: Beyond Data Parallelism • Holden Karau • GOTO 2023

Holden Karau and Paco Nathan discuss PySpark and the future of Python

The magic of distributed systems: when it all breaks and why / Holden Karau

Holden Karau - Interview Engineering and Data Tools

Kubeflow for Machine Learning (Teaser) • Holden Karau & Adi Polak • GOTO 2022

scala.bythebay.io: Holden Karau Interview

BeeScala 2016: Holden Karau - Ignite your data with Spark 2.0

Holden Karau - Keynote: Distributed Computing 4 Kids -- with Spark | PyData Seattle 2023

Scaling Machine Learning with Spark (Teaser) • Adi Polak & Holden Karau • GOTO 2023

Holden Karau and Cheburashka interview at JOTB2018

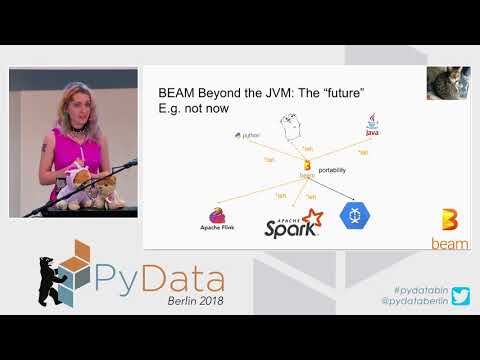

Keynote: Making the Big Data ecosystem work together with Python - Holden Karau

OSCON 2016 - Getting started contributing to Apache Spark by Holden Karau (IBM)

Holden Karau at Beyond the Code

Holden Karau, IBM | BigDataNYC 2016

Simplifying Training Deep & Serving Learning Models...using Tensorflow - Holden Karau

Extending Spark ML for Custom Models - Holden Karau

Комментарии

0:53:32

0:53:32

0:10:48

0:10:48

0:43:19

0:43:19

0:24:03

0:24:03

0:09:47

0:09:47

0:47:13

0:47:13

0:47:33

0:47:33

0:54:59

0:54:59

0:39:19

0:39:19

0:06:57

0:06:57

0:35:21

0:35:21

0:04:06

0:04:06

0:02:32

0:02:32

0:02:48

0:02:48

0:48:56

0:48:56

0:43:49

0:43:49

0:03:35

0:03:35

0:05:41

0:05:41

0:41:02

0:41:02

0:38:00

0:38:00

0:57:47

0:57:47

0:14:42

0:14:42

0:36:40

0:36:40

0:32:01

0:32:01