filmov

tv

Delta Lake in Spark | Schema Evaluation using Delta | Session - 1 | LearntoSpark

Показать описание

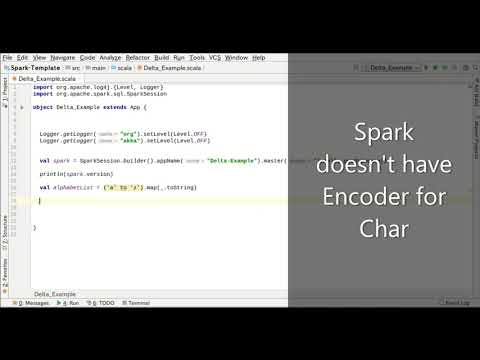

In this video, we will learn how schema evaluation problem is handled in Delta lake from Spark 3.0. We will have a Demo on the existing problem and Delta lake solution using PySpark.

Blog link to learn more on Spark:

Linkedin profile:

FB page:

Blog link to learn more on Spark:

Linkedin profile:

FB page:

Making Apache Spark™ Better with Delta Lake

What is this delta lake thing?

Delta Lake for apache Spark | How does it work | How to use delta lake | Delta Lake for Spark ACID

What is Delta Lake? #shorts #databricks #deltalake #spark #dataengineering

DELTA LAKE w/ Delta Spark code for Streaming /Business Analytics/BI

How Apache Spark 3 0 and Delta Lake Enhances Data Lake Reliability

Delta Lake for Apache Spark - Why do we need Delta Lake for Spark?

Tips and Tricks- Delta Lake Table in Apache Spark - Azure Data Engineering Interview Question

Do Excel ao Data Mesh | Dados: Uma Introdução Executiva

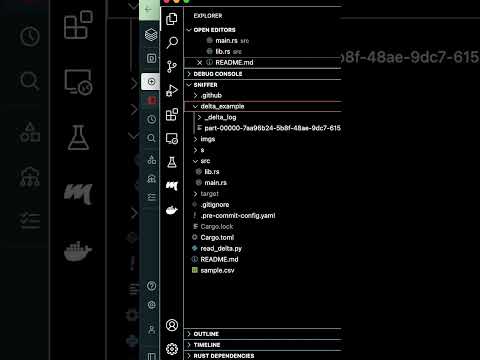

Delta Tutorial #1 | Create Delta lake table using Apache Spark(IDE and Spark shell)

Delta Lake: Reliability and Data Quality for Data Lakes and Apache Spark by Michael Armbrust

Spark ETL with Lakehouse | Delta Lake

Delta Lake in Spark | How Delta Lake Works in Spark | Optimize Delta Table | Session 4| LearntoSpark

Delta Lake | Spark 3 | Apache Spark New Features

What is a Delta Lake? [Introduction to Delta Lake - Ep. 1]

Building the Petcare Data Platform using Delta Lake and 'Kyte': Our Spark ETL Pipeline

Building Data Quality pipelines with Apache Spark and Delta Lake

Delta Lake 0.7.0 + Spark 3.0 AMA

Delta Lake Deep Dive: Streaming Delta Lake with Apache Spark Structured Streaming

Getting started with Delta Lake & Apache Spark SQL [Datasets, write, load, append, add column]

Getting started with Variant Data Type in Delta Lake and Apache Spark

Delta Lake - EXPLAINED - Full Tutorial

Seattle Spark + AI Meetup: How Apache Spark™ 3.0 and Delta Lake Enhance Data Lake Reliability

Advancing Spark - Give your Delta Lake a boost with Z-Ordering

Комментарии

0:58:10

0:58:10

0:06:58

0:06:58

0:23:06

0:23:06

0:00:20

0:00:20

0:17:36

0:17:36

0:58:21

0:58:21

0:18:57

0:18:57

0:14:40

0:14:40

2:45:04

2:45:04

0:11:24

0:11:24

0:31:30

0:31:30

0:14:41

0:14:41

0:12:46

0:12:46

0:21:44

0:21:44

0:10:23

0:10:23

0:26:47

0:26:47

0:26:38

0:26:38

0:54:19

0:54:19

0:59:38

0:59:38

0:20:20

0:20:20

0:05:26

0:05:26

2:54:23

2:54:23

0:56:43

0:56:43

0:20:31

0:20:31