filmov

tv

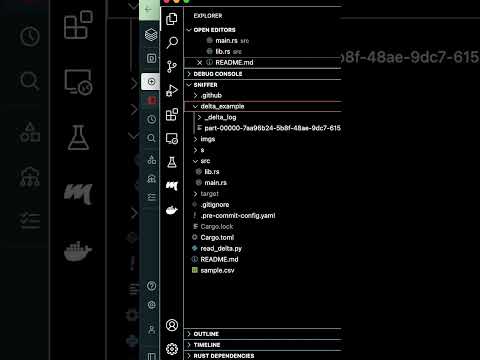

DELTA LAKE w/ Delta Spark code for Streaming /Business Analytics/BI

Показать описание

Delta lake is an open-source project that enables building a Lakehouse architecture on top of these storage systems: S3, ADLS, GCS, HDFS.

Delta Lake supports some statements to facilitate deleting data from and updating data in Delta tables. Delete, Update, insert and Merge are presented in this video, plus a code example of Streaming to a Delta Table.

Delta Lakes are kind of a metadata layer on top of your data lakes and support finally ACID transactions, a fast SQL engine and direct data access for your ML, AI or Business Intelligence / Analytics task.

Delta Lakes are kind of Data Lakes 2.0. Standard Apache Parquet file format with a transaction log, as presented in this video, with time travel to recover older versions and history.

Real-time coding. SQL and Python APIs. PySpark and Delta Lake. New SQL Engine.

ACID transactions and time travel for your data management.

Artificial intelligence, machine learning and Business Intelligence with advanced analytics.

Data Engineering. Data Scientist. Data Science. Jupyter. JupyterLab.

00:00 Install Delta Spark

01:54 Delta Table

03:57 Parquet file format

06:24 Operations on a Delta Lake /table

08:05 Update and merge Delta Tables

10:10 Delta Table Streaming / reads and writes

11:13 Automatic Schema Evolution

11:25 Code Examples

#datalake

#deltalake

#lakehouse

#pyspark

#databricks

#datascience

#datascientist

#sql

#dataengineering

#artificialintelligence

#businessanalytics

Delta Lake supports some statements to facilitate deleting data from and updating data in Delta tables. Delete, Update, insert and Merge are presented in this video, plus a code example of Streaming to a Delta Table.

Delta Lakes are kind of a metadata layer on top of your data lakes and support finally ACID transactions, a fast SQL engine and direct data access for your ML, AI or Business Intelligence / Analytics task.

Delta Lakes are kind of Data Lakes 2.0. Standard Apache Parquet file format with a transaction log, as presented in this video, with time travel to recover older versions and history.

Real-time coding. SQL and Python APIs. PySpark and Delta Lake. New SQL Engine.

ACID transactions and time travel for your data management.

Artificial intelligence, machine learning and Business Intelligence with advanced analytics.

Data Engineering. Data Scientist. Data Science. Jupyter. JupyterLab.

00:00 Install Delta Spark

01:54 Delta Table

03:57 Parquet file format

06:24 Operations on a Delta Lake /table

08:05 Update and merge Delta Tables

10:10 Delta Table Streaming / reads and writes

11:13 Automatic Schema Evolution

11:25 Code Examples

#datalake

#deltalake

#lakehouse

#pyspark

#databricks

#datascience

#datascientist

#sql

#dataengineering

#artificialintelligence

#businessanalytics

0:06:58

0:06:58

0:17:36

0:17:36

0:58:10

0:58:10

0:18:57

0:18:57

0:20:20

0:20:20

0:23:06

0:23:06

0:14:41

0:14:41

0:12:46

0:12:46

0:54:19

0:54:19

0:00:20

0:00:20

0:26:47

0:26:47

0:05:26

0:05:26

0:59:38

0:59:38

0:26:04

0:26:04

0:31:30

0:31:30

0:58:21

0:58:21

0:21:44

0:21:44

0:10:23

0:10:23

0:25:52

0:25:52

0:20:31

0:20:31

1:03:17

1:03:17

0:04:54

0:04:54

0:19:19

0:19:19

0:58:22

0:58:22