filmov

tv

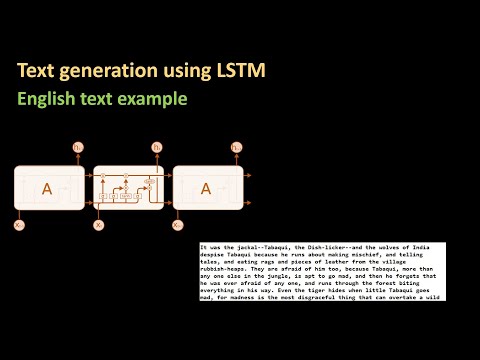

167 - Text prediction using LSTM (English text)

Показать описание

LSTMs are great for timeseries forecasting and sequence predictions. This makes them appropriate for natural language prediction. This tutorial explains the process of training an LSTM network on English text and predicting letters based on the training.

Code generated in the video can be downloaded from here:

Code generated in the video can be downloaded from here:

167 - Text prediction using LSTM (English text)

168 - Text prediction using LSTM - Non-English example using Hindi and Telugu letters.

AI write poetry | Word prediction using LSTM | Deep Learning beginners

How to Predict that Next Word

How to use Predictive Text in Windows 10 #shorts

Text Generation AI - Next Word Prediction in Python

Project 2: Next word prediction using LSTM | Complete project with source code

LSTM next word prediction in Python | LSTM python TensorFlow | LSTM python Keras | LSTM python

Next Word Prediction using Neural Language Model in NLP and Python

Typewise Text Prediction API Demo

167 - Self-supervised 4D Spatio-temporal Feature Learning via Order Prediction of Sequential Point

Text Classification using Lazy - Text - Predict library with python code

Next Word Prediction using Customized Dataset

LSTM | Part 3 | Next Word Predictor Using | CampusX

Phonetic Word Prediction in Google Apps with Input Tools

Improving text prediction accuracy using neurophysiology

Hiori betrayed Isagi 😭 manga Chapter 235 #shorts #bluelock #bluelockmanga

How to Predict with BERT models

Word prediction on the web browser

Stoic Philosophy Text Generation with TensorFlow

Next Word Prediction

Typewise Text Prediction API teaser (free beta)

This ONE Bible Verse Exposes Satan’s Weakness 🤯 #Shorts

Lightkey's Predictive Typing Technology - Introduction

Комментарии

0:29:09

0:29:09

0:18:55

0:18:55

1:34:09

1:34:09

0:00:53

0:00:53

0:00:39

0:00:39

0:36:12

0:36:12

0:22:19

0:22:19

0:17:31

0:17:31

0:04:18

0:04:18

0:06:59

0:06:59

0:04:52

0:04:52

0:08:41

0:08:41

0:14:39

0:14:39

1:00:05

1:00:05

0:01:41

0:01:41

1:00:39

1:00:39

0:00:15

0:00:15

0:02:04

0:02:04

0:02:16

0:02:16

0:30:59

0:30:59

0:03:15

0:03:15

0:00:52

0:00:52

0:01:00

0:01:00

0:01:41

0:01:41