filmov

tv

How to build stream data pipeline with Apache Kafka and Spark Structured Streaming - PyCon SG 2019

Показать описание

Speaker: Takanori Aoki, Data Scientist, HOOQ

Objective: Main purpose of this session is to help audience be familiar with how to develop stream data processing application by Apache Kafka and Spark Structured Streaming in order to encourage them to start playing with these technologies. Description: In Big Data era, massive amount of data is generated at high speed by various types of devices. Stream processing technology plays an important role so that such data can be consumed by realtime application. In this talk, Takanori will present how to implement stream data pipeline and its application by using Apache Kafka and Spark Structured Streaming with Python. He will be elaborating on how to develop application rather than explaining system architectural design in order to help audience be familiar with stream processing implementation by Python. Takanori will introduce examples of application using Tweet data and pseudo-data of mobile device. In addition, he will also explain how to integrate streaming data into other data store technologies such as Apache Cassandra and Elasticsearch. Note: - Python codes to build these applications will be uploaded on GitHub.

About the speaker:

Produced by Engineers.SG

Objective: Main purpose of this session is to help audience be familiar with how to develop stream data processing application by Apache Kafka and Spark Structured Streaming in order to encourage them to start playing with these technologies. Description: In Big Data era, massive amount of data is generated at high speed by various types of devices. Stream processing technology plays an important role so that such data can be consumed by realtime application. In this talk, Takanori will present how to implement stream data pipeline and its application by using Apache Kafka and Spark Structured Streaming with Python. He will be elaborating on how to develop application rather than explaining system architectural design in order to help audience be familiar with stream processing implementation by Python. Takanori will introduce examples of application using Tweet data and pseudo-data of mobile device. In addition, he will also explain how to integrate streaming data into other data store technologies such as Apache Cassandra and Elasticsearch. Note: - Python codes to build these applications will be uploaded on GitHub.

About the speaker:

Produced by Engineers.SG

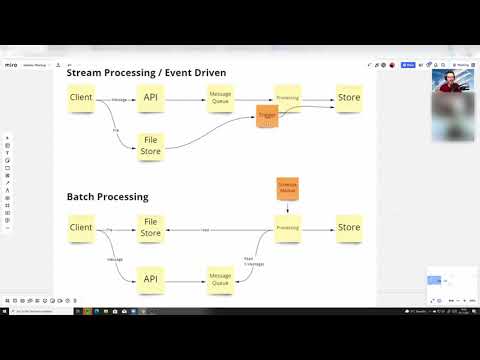

Stream Processing System Design Architecture

How to build stream data pipeline with Apache Kafka and Spark Structured Streaming - PyCon SG 2019

Building stream processing pipelines with Dataflow

Stream vs Batch processing explained with examples

Building stream processing applications with Apache Kafka using ksql

Azure Stream Analytics Tutorial | Processing stream data with SQL

Build a Real-time Stream Processing Pipeline with Apache Flink on AWS - Steffen Hausmann

Stream Data Processing for Fun and Profit - David Ostrovsky

Jeff Denworth, VAST Data | VAST Presents Enter the COSMOS

How to build a stream and batch processing Job On GCP Dataflow || DataFlow Tutorial

Creating Stream processing application using Spark and Kafka in Scala | Spark Streaming Course

What is Stream Processing? | Batch vs Stream Processing | Data Pipelines | Real-Time Data Processing

Moving back and forth between batch and stream processing (Google Cloud Next '17)

Stream Processing Pipeline - Using Pub/Sub, Dataflow & BigQuery

How to build a modern stream processor: The science behind Apache Flink - Stefan Richter

Kinesis Stream Tutorial | Kinesis Data Stream to S3 demo | Firehose | AWS Kafka

Create a Kafka Cluster Using AWS MSK And Stream Data - Full Coding Demo

Process Real-Time Data Streams in Minutes using Azure Stream Analytics' No-Code Editor Experien...

Creating Kafka Streams Application | Kafka Stream Quick Start | Introduction to Kafka Streams API

Create a data stream on AWS w/ Kinesis!

Stream API in Java

Apache Kafka and the Rise of Stream Processing by Guozhang Wang | DataEngConf NYC '16

Stream Designer | The Visual Builder for Kafka Pipelines in Confluent Cloud

Heron: Real-time Stream Data Processing at Twitter

Комментарии

0:11:24

0:11:24

0:47:21

0:47:21

0:15:17

0:15:17

0:09:02

0:09:02

0:31:07

0:31:07

0:24:18

0:24:18

0:44:19

0:44:19

1:01:19

1:01:19

0:31:59

0:31:59

0:16:09

0:16:09

0:17:41

0:17:41

0:06:20

0:06:20

0:51:52

0:51:52

0:05:23

0:05:23

0:37:42

0:37:42

0:09:28

0:09:28

0:23:43

0:23:43

0:06:36

0:06:36

0:13:12

0:13:12

0:05:50

0:05:50

0:26:04

0:26:04

0:35:26

0:35:26

0:06:21

0:06:21

0:50:57

0:50:57