filmov

tv

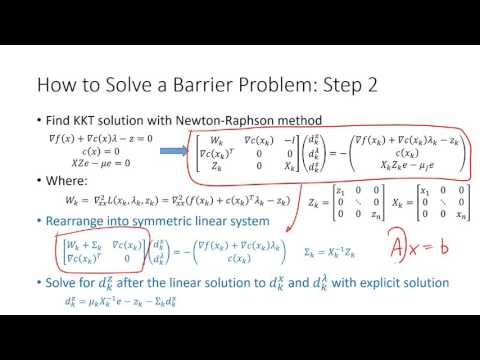

Interior Point Method for Optimization

Показать описание

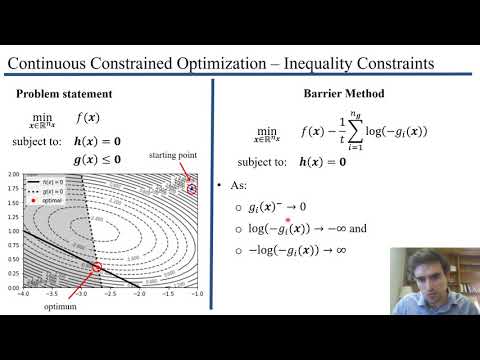

Interior point methods or barrier methods are a certain class of algorithms to solve linear and nonlinear convex optimization problems. Violation of inequality constraints are prevented by augmenting the objective function with a barrier term that causes the optimal unconstrained value to be in the feasible space.

Interior Point Method for Optimization

Interior-point methods for constrained optimization (Logarithmic barrier function and central path)

The Karush–Kuhn–Tucker (KKT) Conditions and the Interior Point Method for Convex Optimization

Linear Programming 37: Interior point methods

3.3 Optimization Methods - The Interior Point Method

Interior Point Method Optimization Example in MATLAB

A Faster Interior Point Method for Semidefinite Programming

An Interior Point Solver for Large Block Angular Optimization Problems

Aaron Sidford: Introduction to interior point methods for discrete optimization, lecture I

Optimal Control Example 2 using Interior Point method

A geodesic interior-point method for linear optimization over symmetric cones

What Is Mathematical Optimization?

Lecture 12 Interior point methods

Lecture 23: Interior Point Method

Interior point method

Interior Point Methods 4

Interior Point Method: Theory

A primal-dual interior-point algorithm for nonsymmetric conic optimization

An Interior Point Method Solving Motion Planning Problems with Narrow Passages

Lecture 54 : Interior-Point Method for QPP

Constraints and Barrier Method

Visually Explained: Newton's Method in Optimization

JuMP-dev 2019 | Mathieu Tanneau | Tulip.jl: An interior-point solver with abstract linear algebra

Interior Point Method: Log-Barrier

Комментарии

0:18:12

0:18:12

0:15:43

0:15:43

0:21:58

0:21:58

0:07:48

0:07:48

0:36:14

0:36:14

0:25:00

0:25:00

0:14:42

0:14:42

0:53:43

0:53:43

1:15:00

1:15:00

0:02:14

0:02:14

0:35:36

0:35:36

0:11:35

0:11:35

1:47:53

1:47:53

0:20:45

0:20:45

0:06:47

0:06:47

1:08:29

1:08:29

0:08:59

0:08:59

1:21:36

1:21:36

0:06:52

0:06:52

1:31:49

1:31:49

0:06:33

0:06:33

0:11:26

0:11:26

0:24:14

0:24:14

0:07:19

0:07:19