filmov

tv

An Introduction to Graph Neural Networks: Models and Applications

Показать описание

MSR Cambridge, AI Residency Advanced Lecture Series

An Introduction to Graph Neural Networks: Models and Applications

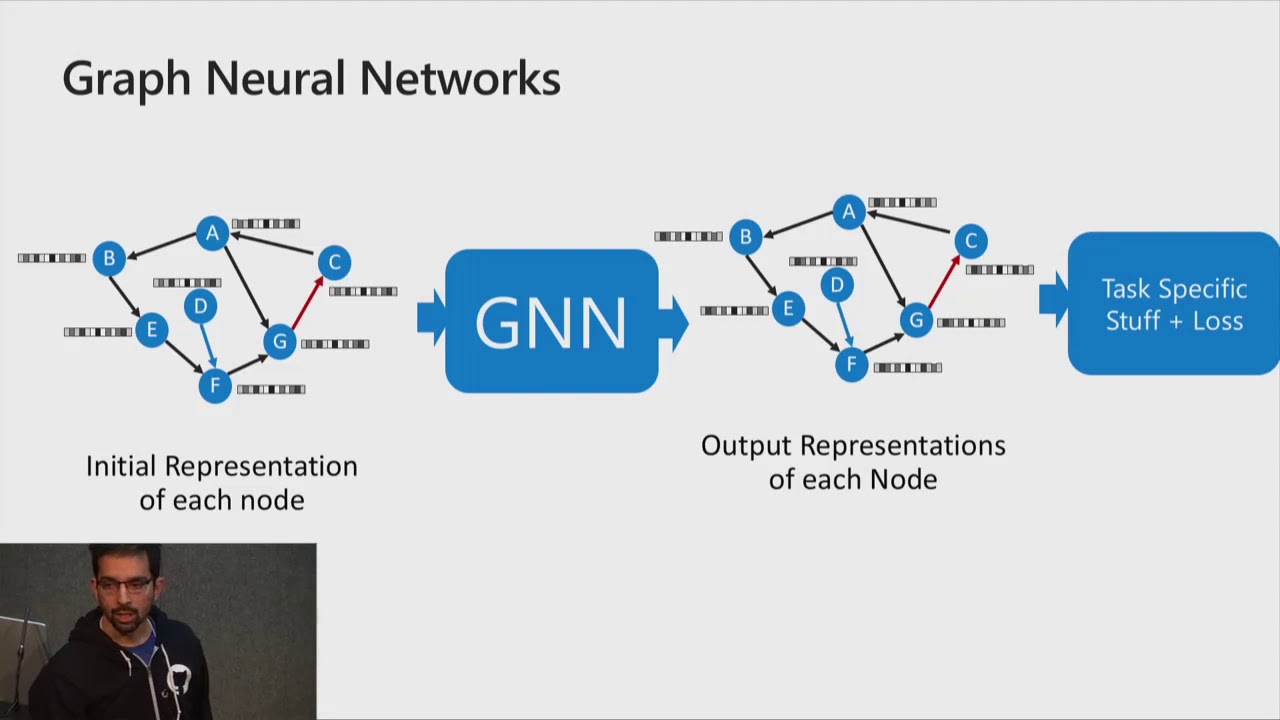

Got it now: "Graph Neural Networks (GNN) are a general class of networks that work over graphs. By representing a problem as a graph — encoding the information of individual elements as nodes and their relationships as edges — GNNs learn to capture patterns within the graph. These networks have been successfully used in applications such as chemistry and program analysis. In this introductory talk, I will do a deep dive in the neural message-passing GNNs, and show how to create a simple GNN implementation. Finally, I will illustrate how GNNs have been used in applications.

An Introduction to Graph Neural Networks: Models and Applications

Got it now: "Graph Neural Networks (GNN) are a general class of networks that work over graphs. By representing a problem as a graph — encoding the information of individual elements as nodes and their relationships as edges — GNNs learn to capture patterns within the graph. These networks have been successfully used in applications such as chemistry and program analysis. In this introductory talk, I will do a deep dive in the neural message-passing GNNs, and show how to create a simple GNN implementation. Finally, I will illustrate how GNNs have been used in applications.

An Introduction to Graph Neural Networks: Models and Applications

Graph Neural Networks - a perspective from the ground up

An Introduction to Graph Neural Networks

The ultimate intro to Graph Neural Networks. Maybe.

Introduction to graph neural networks (made easy!)

Understanding Graph Neural Networks | Part 1/3 - Introduction

Intro to graph neural networks (ML Tech Talks)

AI Explained - Graph Neural Networks | How AI Uses Graphs to Accelerate Innovation

Introduction To Graph Neural Network | What Are Graph Neural Networks ? | GNN | Simplilearn

Graph Neural Networks: A gentle introduction

Graph Attention Networks (GAT) in 5 minutes

Webinar 'Introduction to Graph Neural Networks'

Intro to Graph Neural Networks

Introduction to Graph Neural Networks and What We Can Implement in the Wolfram Language

Workshop: Introduction to Graph Neural Network and Its Application in Drug Discovery

Kefei Hu - Applying ML on graph-structured data - an introduction to Graph Neural Networks

Understanding Graph Attention Networks

Graph Neural Networks, Session 1: Introduction to Graphs

an introduction to graph neural networks

Ritambhara Singh | An introduction to graph neural networks | CGSI 2024

Workshop Introduction to Graph Neural Network and Its Application in Drug Discovery

Graph neural networks: Variations and applications

Knowledge Graphs and Graph Neural Networks

Graph Neural Networks - Introductions & Basics

Комментарии

0:59:00

0:59:00

0:14:28

0:14:28

0:05:44

0:05:44

0:08:35

0:08:35

0:11:38

0:11:38

0:08:18

0:08:18

0:51:06

0:51:06

0:03:24

0:03:24

0:09:39

0:09:39

0:29:15

0:29:15

0:05:10

0:05:10

0:47:52

0:47:52

0:54:23

0:54:23

0:24:01

0:24:01

3:09:56

3:09:56

0:39:41

0:39:41

0:15:00

0:15:00

0:02:10

0:02:10

0:01:26

0:01:26

0:24:24

0:24:24

1:51:57

1:51:57

0:18:07

0:18:07

0:20:25

0:20:25

0:20:15

0:20:15