filmov

tv

The cost of Hash Tables | The Backend Engineering Show

Показать описание

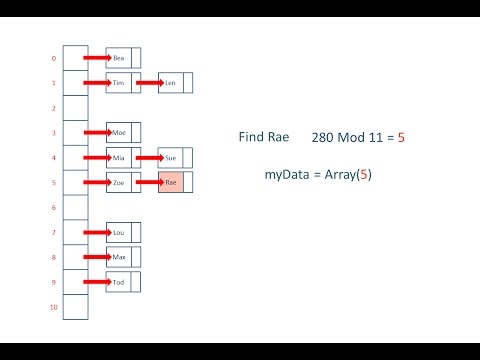

Hash tables are effective for caching, database joins, sets to check if something is in a list and even load balancing, partitioning and sharding and many other applications. Most programming languages support hash tables. However they don’t come without their cost and limitations lets discuss this.

0:00 Intro

1:50 Arrays

3:50 CPU Cost (NUMA/M1 Ultra)

6:50 Hash Tables

10:00 Hash Join

16:00 Cost of Hash Tables

20:00 Remapping Cost Hash Tables

22:30 Consistent hashing

Fundamentals of Database Engineering udemy course (link redirects to udemy with coupon)

Introduction to NGINX (link redirects to udemy with coupon)

Python on the Backend (link redirects to udemy with coupon)

Become a Member on YouTube

🔥 Members Only Content

🏭 Backend Engineering Videos in Order

💾 Database Engineering Videos

🎙️Listen to the Backend Engineering Podcast

Gears and tools used on the Channel (affiliates)

🖼️ Slides and Thumbnail Design

Canva

Stay Awesome,

Hussein

0:00 Intro

1:50 Arrays

3:50 CPU Cost (NUMA/M1 Ultra)

6:50 Hash Tables

10:00 Hash Join

16:00 Cost of Hash Tables

20:00 Remapping Cost Hash Tables

22:30 Consistent hashing

Fundamentals of Database Engineering udemy course (link redirects to udemy with coupon)

Introduction to NGINX (link redirects to udemy with coupon)

Python on the Backend (link redirects to udemy with coupon)

Become a Member on YouTube

🔥 Members Only Content

🏭 Backend Engineering Videos in Order

💾 Database Engineering Videos

🎙️Listen to the Backend Engineering Podcast

Gears and tools used on the Channel (affiliates)

🖼️ Slides and Thumbnail Design

Canva

Stay Awesome,

Hussein

The cost of Hash Tables | The Backend Engineering Show

Hash Tables and Hash Functions

Faster than Rust and C++: the PERFECT hash table

Load Factor in Hashing | Hash table with 25 slots that stores 2000 elements..

Hashtables

Hash Tables, Associative Arrays, and Dictionaries (Data Structures and Optimization)

Hash Table Basics

Hash Tables - Data Structures and Algorithms

Hash Tables - A Simple Introduction

Hash tables are brilliant and simple

AQA A’Level Hash tables - Part 1

CppCon 2017: Matt Kulukundis “Designing a Fast, Efficient, Cache-friendly Hash Table, Step by Step”...

Hash tables: Samuel's tutorial

Hash Table Data Structure | Illustrated Data Structures

Hash Tables (part 1): Introduction

Hashing and hash tables crash course (+ face reveal) - Inside code

Amortized Analysis of Hash Tables | OCaml Programming | Chapter 8 Video 22

A&DS S01E14. Hash tables

Python Hash Sets Explained & Demonstrated - Computerphile

Chord - A Distributed Hash Table

Hash Tables (Algorithms)

Efficiency Analysis of Rehashing | OCaml Programming | Chapter 8 Video 21

Hash Tables Explained in Simple Terms

data structures - hash tables

Комментарии

0:25:26

0:25:26

0:13:56

0:13:56

0:33:52

0:33:52

0:01:41

0:01:41

0:05:31

0:05:31

0:12:45

0:12:45

0:08:10

0:08:10

0:21:00

0:21:00

0:24:39

0:24:39

0:00:53

0:00:53

0:09:21

0:09:21

0:59:34

0:59:34

0:23:28

0:23:28

0:08:29

0:08:29

0:19:42

0:19:42

0:36:53

0:36:53

0:06:59

0:06:59

1:34:38

1:34:38

0:18:39

0:18:39

0:26:54

0:26:54

0:33:24

0:33:24

0:05:26

0:05:26

0:08:19

0:08:19

1:28:17

1:28:17