filmov

tv

152 - How to visualize convolutional filter outputs in your deep learning model?

Показать описание

This tutorial explains the few lines of code to visualize outputs of convolutional layers in any deep learning model.

Code generated in the video can be downloaded from here:

Code generated in the video can be downloaded from here:

152 - How to visualize convolutional filter outputs in your deep learning model?

CS 152 NN—14: Convolutional Neural Network: Visualizing how they work

Neural Plot - A Technic to Visualizing Neural Networks of TensorFlow/Keras model

CS 152 NN—15: Visualizing CNNs: Displaying Activations

CS 152 NN—15: Visualizing CNNs: Filter Excitation

I want to visualize my convolutional layer activations or weights….

Challenge 152| POWERBI – Peaks Cycles, Data Visualization

ResNet (actually) explained in under 10 minutes

What is ResNet? (with 3D Visualizations)

CS 152 NN—15: Visualizing CNNs: Heat Maps

4 Ways To Visualize Neural Networks in Python

The Power of Visualization | Joe Rogan and Sean Brady #jre

CS 152 NN—9: Neural Networks—Visualizing the network

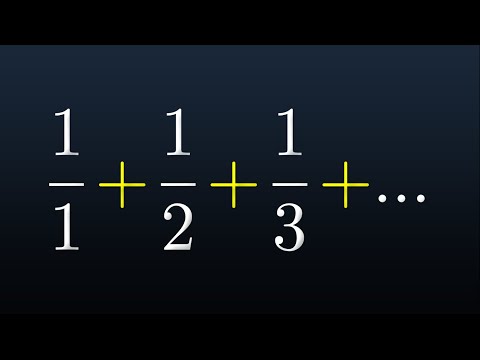

Math 152: Section 2.3 A Visualization

Every Student Should See This

Visualizing Neural Networks

How to Generate any types of Modulated Waveforms & Visualize with Spectrum Analyzer_Using Matlab...

Squeeze Theorem Visualization + Example | RU CALC 152

How REAL Men Integrate Functions

3D Visualization- Bathroom-Cream Theme 152

Lecture 14: Visualizing and Understanding

#Python | Visualize what ConvNets see! 🐶 | #Convolutional #Tensorflow #DataVisualization

visualize heatmaps through seaborn in PyTorch

How To Visualize Attention Heads

Комментарии

0:11:04

0:11:04

0:06:37

0:06:37

0:01:05

0:01:05

0:03:55

0:03:55

0:07:23

0:07:23

0:00:57

0:00:57

0:09:45

0:09:45

0:09:47

0:09:47

0:09:22

0:09:22

0:10:08

0:10:08

0:25:13

0:25:13

0:00:16

0:00:16

0:09:00

0:09:00

0:00:48

0:00:48

0:00:58

0:00:58

0:09:08

0:09:08

0:00:41

0:00:41

0:03:28

0:03:28

0:00:35

0:00:35

0:00:45

0:00:45

1:12:04

1:12:04

0:06:57

0:06:57

0:01:00

0:01:00

1:24:08

1:24:08