filmov

tv

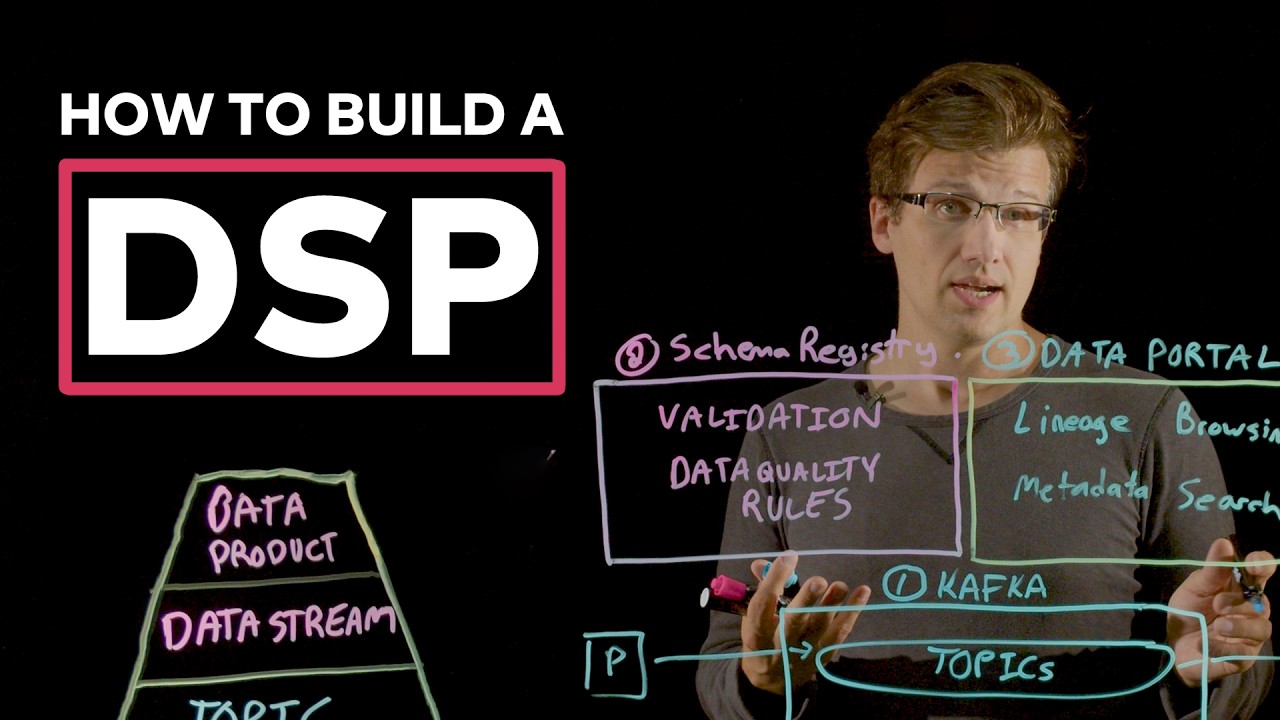

What is a Data Streaming Platform?

Показать описание

A Data Streaming Platform (DSP) is composed of six major inter-related components, covered in detail in this video. Each component has evolved over time to servce specific needs in the data streaming platform, but together form a whole that is greater than its sum.

A DSP provides a complete solution for unified end-to-end data streaming. It provides all the necessary capabilities to succeed in data streaming, incuding data connectivity, integration, discovery, security, and management. Ultimately, a DSP makes it easy for you to build, use, and share data all across your organization for any use case.

RELATED RESOURCES

CHAPTERS

00:00 - Introduction, a DSP’s Six Parts

00:30 - Pyramid Model of Data Streaming Evolution

00:46 - Apache Kafka (Part 1)

1:55 - Schema Registry (Part 2)

3:53 - Data Portal (Part 3)

5:44 - Connectors (Part 4)

7:30 - Stream Processor Flink (Part 5)

8:40 - Tableflow (Part 6)

10:33 - Conclusion

–

ABOUT CONFLUENT

#datastreaming #dsp #apachekafka #kafka #confluent

A DSP provides a complete solution for unified end-to-end data streaming. It provides all the necessary capabilities to succeed in data streaming, incuding data connectivity, integration, discovery, security, and management. Ultimately, a DSP makes it easy for you to build, use, and share data all across your organization for any use case.

RELATED RESOURCES

CHAPTERS

00:00 - Introduction, a DSP’s Six Parts

00:30 - Pyramid Model of Data Streaming Evolution

00:46 - Apache Kafka (Part 1)

1:55 - Schema Registry (Part 2)

3:53 - Data Portal (Part 3)

5:44 - Connectors (Part 4)

7:30 - Stream Processor Flink (Part 5)

8:40 - Tableflow (Part 6)

10:33 - Conclusion

–

ABOUT CONFLUENT

#datastreaming #dsp #apachekafka #kafka #confluent

Data Streaming, Explained

What is Data Streaming?

What is Data Streaming?

What is a Data Streaming Platform?

Batching VS Streaming Data Explained

Data Streaming Explained: Unleashing the Power of Real-Time Data

What is data streaming?

What is Streaming Data? II Difference between Stream processing and Batch processing

Copilot Data Analysis | Charts | Word Cloud

Introduction to Big Data Streams | Characteristics of Big Data Streams | Examples of Stream Sources

What is Data Pipeline? | Why Is It So Popular?

Get started with real-time Data Streaming | What is Data Streaming?

Batch Processing vs Stream Processing | System Design Primer | Tech Primers

Data Stream Management System ( DSMS ) 🔥

Data Pipelines: Introduction to Streaming Data Pipelines

Data Streaming in Real Life: Banking - Real-Time Data Processing in the Financial Services Industry

Introducing the Data Streaming Nanodegree Program from Udacity

What is Real Time Data Streaming? Kafka and Kinesis

Find the Right Data Streaming Architecture for Your Data Needs - AWS Online Tech Talks

What is the Future of Streaming Data?

Stream Quality | Streaming Data Governance

Event Streaming in 3 Minutes

Realtime Data Streaming | End To End Data Engineering Project

Sketching Streaming Data: Efficient Collection & Processing | Lectures On-Demand

Комментарии

0:11:55

0:11:55

0:01:33

0:01:33

0:06:48

0:06:48

0:11:50

0:11:50

0:00:49

0:00:49

0:07:14

0:07:14

0:03:54

0:03:54

0:02:48

0:02:48

0:03:29

0:03:29

0:08:55

0:08:55

0:05:25

0:05:25

0:23:55

0:23:55

0:13:37

0:13:37

0:09:02

0:09:02

0:09:37

0:09:37

0:03:39

0:03:39

0:01:25

0:01:25

0:01:41

0:01:41

0:54:39

0:54:39

0:41:30

0:41:30

0:03:04

0:03:04

0:03:23

0:03:23

1:27:48

1:27:48

0:19:50

0:19:50