filmov

tv

Parallel table ingestion with a Spark Notebook (PySpark + Threading)

Показать описание

If we want to kick off a single Apache Spark notebook to process a list of tables we can write the code easily. The simple code to loop through the list of tables ends up running one table after another (sequentially). If none of these tables are very big, it is quicker to have Spark load tables concurrently (in parallel) using multithreading. There are some different options of how to do this, but I am sharing the easiest way I have found when working with a PySpark notebook in Databricks, Azure Synapse Spark, Jupyter, or Zeppelin.

Written tutorial and links to code:

More from Dustin:

CHAPTERS:

0:00 Intro and Use Case

1:05 Code example single thread

4:36 Code example multithreaded

7:15 Demo run - Databricks

8:46 Demo run - Azure Synapse

11:48 Outro

Written tutorial and links to code:

More from Dustin:

CHAPTERS:

0:00 Intro and Use Case

1:05 Code example single thread

4:36 Code example multithreaded

7:15 Demo run - Databricks

8:46 Demo run - Azure Synapse

11:48 Outro

Parallel table ingestion with a Spark Notebook (PySpark + Threading)

Azure Data Factory | Copy multiple tables in Bulk with Lookup & ForEach

Master Databricks and Apache Spark Step by Step: Lesson 31 - PySpark: Parallel Database Queries

AWS Tutorials - AWS Glue Pipeline to Ingest Multiple SQL Tables

SQL indexing best practices | How to make your database FASTER!

18. Copy multiple tables in bulk by using Azure Data Factory

How We Optimize Spark SQL Jobs With parallel and sync IO

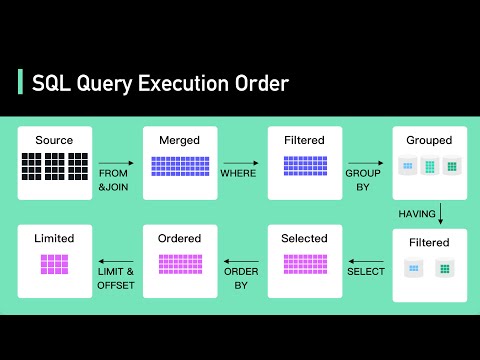

Secret To Optimizing SQL Queries - Understand The SQL Execution Order

10 ETL Design Patterns (Data Architecture | Data Warehouse)

Data Ingestion Performance Optimizations | Azure SQL and ADF Event | Data Exposed Special

Pyspark Scenarios 14 : How to implement Multiprocessing in Azure Databricks - #pyspark #databricks

Learn Apache Spark in 10 Minutes | Step by Step Guide

PySpark | Tutorial-8 | Reading data from Rest API | Realtime Use Case | Bigdata Interview Questions

Azure Data Factory - Partition a large table and create files in ADLS using copy activity

What's an FPGA?

FlightAware and Ray: Scaling Distributed XGBoost and Parallel Data Ingestion

ADF metadata driven copy activity , how to process multiple tables dynamically in ADF copy activity

How REST APIs support upload of huge data and long running processes | Asynchronous REST API

Parallelization of Structured Streaming Jobs Using Delta Lake

Machine Learning in Azure Databricks

Why is my Power BI refresh so SLOW?!? 3 Bottlenecks for refresh performance

Efficient Data Ingestion with Glue Concurrency and Hudi Data Lake

Parallel Processing in Azure Data Factory

Fast, Cheap and Easy Data Ingestion with AWS Lambda and Delta Lake

Комментарии

0:12:33

0:12:33

0:23:16

0:23:16

0:07:39

0:07:39

0:33:29

0:33:29

0:04:08

0:04:08

0:18:27

0:18:27

0:21:25

0:21:25

0:05:57

0:05:57

0:04:48

0:04:48

0:21:01

0:21:01

0:09:59

0:09:59

0:10:47

0:10:47

0:17:17

0:17:17

0:09:05

0:09:05

0:01:26

0:01:26

0:29:50

0:29:50

0:22:45

0:22:45

0:09:20

0:09:20

0:27:31

0:27:31

0:55:33

0:55:33

0:08:09

0:08:09

0:07:25

0:07:25

0:02:24

0:02:24

0:30:38

0:30:38