filmov

tv

Linear Classification Basics - Episode 14

Показать описание

Thanks for watching, turn on notificatREACTions (🔔) to receive every new video 😍

🔰 MY INSTAGRAM 🔰

Laying the Foundation: Linear Classification Basics

Welcome to the realm of Linear Classification, a fundamental concept in machine learning that forms the bedrock for understanding how machines can make decisions based on data. In this overview, we'll delve into the essential principles of linear classification and its significance in various applications.

1. Binary Decision at its Core:

At the heart of linear classification lies the binary decision-making process. It involves classifying data points into two distinct classes based on features extracted from the data.

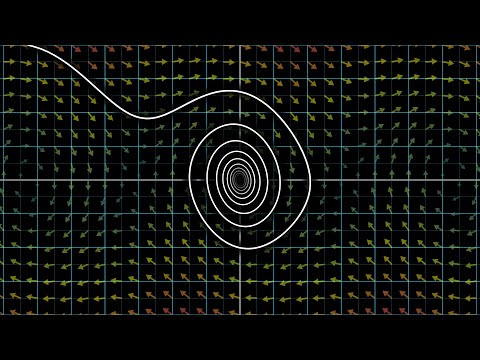

2. Linear Decision Boundaries:

Linear classification utilizes linear decision boundaries to separate data points belonging to different classes. These boundaries are defined by linear equations that take into account the features of the data.

3. Feature Space:

In a linear classification scenario, data points are represented in a feature space, where each dimension corresponds to a feature. The position of a data point within this space determines its classification.

4. Linear Classifier Equation:

A linear classifier equation typically takes the form of: w * x + b = 0, where w represents the weight vector, x is the feature vector of a data point, and b is the bias term.

5. Perceptron Algorithm:

The Perceptron algorithm is one of the earliest linear classification algorithms. It aims to adjust the weight and bias values iteratively to find a decision boundary that accurately classifies the training data.

6. Logistic Regression:

Contrary to its name, logistic regression is a classification algorithm. It employs the logistic function to map linear values into probabilities, making it suitable for binary classification tasks.

7. Softmax Regression for Multiclass Classification:

Softmax regression is an extension of logistic regression for multiclass classification. It assigns probabilities to each class and selects the class with the highest probability as the prediction.

8. Loss Functions:

To train a linear classifier effectively, appropriate loss functions are used. Common choices include the hinge loss for linear SVM and the cross-entropy loss for logistic regression.

9. Gradient Descent:

Gradient descent is a key optimization technique in linear classification. It adjusts the weight and bias values iteratively to minimize the chosen loss function and find the optimal decision boundary.

10. Feature Importance and Interpretability:

Linear classifiers provide interpretable insights into feature importance. The magnitude of the weight values indicates how much a feature contributes to the classification decision.

11. Limitations of Linearity:

While linear classifiers are powerful and widely applicable, they have limitations in capturing complex patterns that require non-linear decision boundaries.

12. Applications:

Linear classification finds application in various domains, including spam email detection, sentiment analysis, medical diagnosis, and image classification.

Embracing the Linear Realm:

Linear Classification Basics lay the foundation for understanding how machines can make decisions based on patterns in data. As you embark on your journey into the world of machine learning, remember that while linear classifiers may seem simplistic, they are the building blocks for more advanced techniques that tackle complex classification challenges.

🔰 MY INSTAGRAM 🔰

Laying the Foundation: Linear Classification Basics

Welcome to the realm of Linear Classification, a fundamental concept in machine learning that forms the bedrock for understanding how machines can make decisions based on data. In this overview, we'll delve into the essential principles of linear classification and its significance in various applications.

1. Binary Decision at its Core:

At the heart of linear classification lies the binary decision-making process. It involves classifying data points into two distinct classes based on features extracted from the data.

2. Linear Decision Boundaries:

Linear classification utilizes linear decision boundaries to separate data points belonging to different classes. These boundaries are defined by linear equations that take into account the features of the data.

3. Feature Space:

In a linear classification scenario, data points are represented in a feature space, where each dimension corresponds to a feature. The position of a data point within this space determines its classification.

4. Linear Classifier Equation:

A linear classifier equation typically takes the form of: w * x + b = 0, where w represents the weight vector, x is the feature vector of a data point, and b is the bias term.

5. Perceptron Algorithm:

The Perceptron algorithm is one of the earliest linear classification algorithms. It aims to adjust the weight and bias values iteratively to find a decision boundary that accurately classifies the training data.

6. Logistic Regression:

Contrary to its name, logistic regression is a classification algorithm. It employs the logistic function to map linear values into probabilities, making it suitable for binary classification tasks.

7. Softmax Regression for Multiclass Classification:

Softmax regression is an extension of logistic regression for multiclass classification. It assigns probabilities to each class and selects the class with the highest probability as the prediction.

8. Loss Functions:

To train a linear classifier effectively, appropriate loss functions are used. Common choices include the hinge loss for linear SVM and the cross-entropy loss for logistic regression.

9. Gradient Descent:

Gradient descent is a key optimization technique in linear classification. It adjusts the weight and bias values iteratively to minimize the chosen loss function and find the optimal decision boundary.

10. Feature Importance and Interpretability:

Linear classifiers provide interpretable insights into feature importance. The magnitude of the weight values indicates how much a feature contributes to the classification decision.

11. Limitations of Linearity:

While linear classifiers are powerful and widely applicable, they have limitations in capturing complex patterns that require non-linear decision boundaries.

12. Applications:

Linear classification finds application in various domains, including spam email detection, sentiment analysis, medical diagnosis, and image classification.

Embracing the Linear Realm:

Linear Classification Basics lay the foundation for understanding how machines can make decisions based on patterns in data. As you embark on your journey into the world of machine learning, remember that while linear classifiers may seem simplistic, they are the building blocks for more advanced techniques that tackle complex classification challenges.

0:15:29

0:15:29

0:12:57

0:12:57

0:00:20

0:00:20

0:09:33

0:09:33

0:00:11

0:00:11

0:00:11

0:00:11

0:00:19

0:00:19

1:35:13

1:35:13

0:09:38

0:09:38

0:00:15

0:00:15

0:21:00

0:21:00

0:00:34

0:00:34

0:01:00

0:01:00

0:03:47

0:03:47

0:00:25

0:00:25

0:11:19

0:11:19

0:00:25

0:00:25

0:24:38

0:24:38

0:56:47

0:56:47

0:00:47

0:00:47

0:00:14

0:00:14

0:26:09

0:26:09

0:27:16

0:27:16

0:00:57

0:00:57