filmov

tv

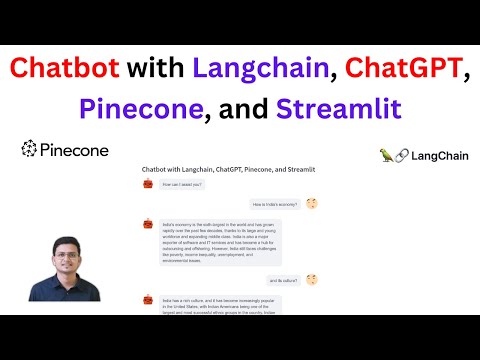

Langchain + ChatGPT + Pinecone: A Question Answering Streamlit App

Показать описание

In this exciting video tutorial, I walk you through creating a Streamlit application that allows you to search and query PDF documents effortlessly. Using cutting-edge technologies such as Pinecone and LLM (OpenAI's ChatGPT), I guide you step-by-step in harnessing the potential of these tools.

By leveraging Pinecone as a vector database and search engine, we enable lightning-fast search capabilities for PDF documents. Additionally, we employ LLM to enhance the search functionality with question-answering capabilities, making your app even more versatile and intelligent.

To ensure smooth data preprocessing, chains, and other essential tasks, we utilize the incredible Langchain framework. With its powerful features, Langchain simplifies and streamlines the development process, enabling you to focus on building an exceptional PDF query search app.

Whether a beginner or an experienced developer, this tutorial provides a comprehensive guide to building your own Streamlit app with Pinecone, LLM, and Langchain. Join me as we dive deep into natural language processing and create a game-changing application together!

Don't forget to like, share, and subscribe to stay updated on the latest advancements in AI/ML.

#ai #python #coding

Your Queries:-

pinecone ai tutorial

pinecone ai memory

embeddings from language models

langchain

langchain tutorial

langchain agent

langchain chatbot

langchain tutorial python

chatgpt

chatgpt explained

chat gpt

chatgpt how to use

chatgpt tutorial

question answering in artificial intelligence

question answering nlp

question answering app

streamlit tutorial

streamlit python

streamlit web app

Langchain + ChatGPT + Pinecone: A Question Answering Streamlit App

By leveraging Pinecone as a vector database and search engine, we enable lightning-fast search capabilities for PDF documents. Additionally, we employ LLM to enhance the search functionality with question-answering capabilities, making your app even more versatile and intelligent.

To ensure smooth data preprocessing, chains, and other essential tasks, we utilize the incredible Langchain framework. With its powerful features, Langchain simplifies and streamlines the development process, enabling you to focus on building an exceptional PDF query search app.

Whether a beginner or an experienced developer, this tutorial provides a comprehensive guide to building your own Streamlit app with Pinecone, LLM, and Langchain. Join me as we dive deep into natural language processing and create a game-changing application together!

Don't forget to like, share, and subscribe to stay updated on the latest advancements in AI/ML.

#ai #python #coding

Your Queries:-

pinecone ai tutorial

pinecone ai memory

embeddings from language models

langchain

langchain tutorial

langchain agent

langchain chatbot

langchain tutorial python

chatgpt

chatgpt explained

chat gpt

chatgpt how to use

chatgpt tutorial

question answering in artificial intelligence

question answering nlp

question answering app

streamlit tutorial

streamlit python

streamlit web app

Langchain + ChatGPT + Pinecone: A Question Answering Streamlit App

Комментарии

0:38:57

0:38:57

0:30:22

0:30:22

0:11:32

0:11:32

0:32:48

0:32:48

0:38:08

0:38:08

0:05:20

0:05:20

0:09:51

0:09:51

0:01:32

0:01:32

0:00:43

0:00:43

0:00:17

0:00:17

0:18:41

0:18:41

0:15:53

0:15:53

0:16:29

0:16:29

0:12:44

0:12:44

0:35:53

0:35:53

0:14:20

0:14:20

0:23:56

0:23:56

0:02:06

0:02:06

6:18:02

6:18:02

0:25:37

0:25:37

0:21:50

0:21:50

1:07:30

1:07:30

0:03:22

0:03:22

0:06:57

0:06:57