filmov

tv

Linear Regression Machine Learning (tutorial)

Показать описание

I'll perform linear regression from scratch in Python using a method called 'Gradient Descent' to determine the relationship between student test scores & amount of hours studied. This will be about 50 lines of code and I'll deep dive into the math behind this.

Code for this video:

Please subscribe! And like. And comment. That's what keeps me going. And yes, this video is apart of my 'Intro to Deep Learning series'

More learning resources:

Join us in the Wizards Slack Channel:

Please support me on Patreon:

Follow me:

Signup for my newsletter for exciting updates in the field of AI:

Code for this video:

Please subscribe! And like. And comment. That's what keeps me going. And yes, this video is apart of my 'Intro to Deep Learning series'

More learning resources:

Join us in the Wizards Slack Channel:

Please support me on Patreon:

Follow me:

Signup for my newsletter for exciting updates in the field of AI:

Linear Regression Analysis | Linear Regression in Python | Machine Learning Algorithms | Simplilearn

Linear Regression in 2 minutes

Linear Regression From Scratch in Python (Mathematical)

Machine Learning in Python: Building a Linear Regression Model

Machine Learning Tutorial Python - 2: Linear Regression Single Variable

Linear Regression, Clearly Explained!!!

Linear Regression Machine Learning (tutorial)

Python Machine Learning Tutorial #2 - Linear Regression p.1

48. One Way Multivariate Analysis of Variance (MANOVA) in IBM SPSS || Dr. Dhaval Maheta

Lec-4: Linear Regression📈 with Real life examples & Calculations | Easiest Explanation

Simple Linear Regression in Python - sklearn

Video 1: Introduction to Simple Linear Regression

Linear Regression in Machine Learning Explained in 5 Minutes

Linear Regression Algorithm | Linear Regression in Python | Machine Learning Algorithm | Edureka

Linear Regression Python Sklearn [FROM SCRATCH]

Machine Learning Tutorial Python - 3: Linear Regression Multiple Variables

Machine Learning Foundations Course – Regression Analysis

Machine Learning for Everybody – Full Course

Linear Regression in Python - Full Project for Beginners

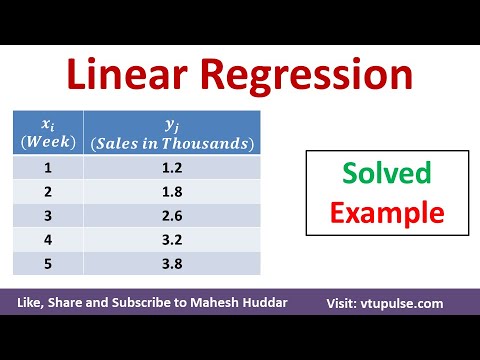

Linear Regression Algorithm – Solved Numerical Example in Machine Learning by Mahesh Huddar

Build Your First Machine Learning Project [Full Beginner Walkthrough]

Linear Regression with Python | Sklearn Machine Learning Tutorial

Linear Regression Single Variable | Machine Learning Tutorial

Tutorial 26- Linear Regression Indepth Maths Intuition- Data Science

Комментарии

0:35:46

0:35:46

0:02:34

0:02:34

0:24:38

0:24:38

0:17:46

0:17:46

0:15:14

0:15:14

0:27:27

0:27:27

0:48:41

0:48:41

0:14:48

0:14:48

0:24:28

0:24:28

0:11:01

0:11:01

0:12:48

0:12:48

0:13:29

0:13:29

0:04:29

0:04:29

0:28:36

0:28:36

0:06:58

0:06:58

0:14:08

0:14:08

9:33:29

9:33:29

3:53:53

3:53:53

0:50:52

0:50:52

0:05:30

0:05:30

0:37:05

0:37:05

2:29:45

2:29:45

0:18:30

0:18:30

0:24:15

0:24:15