filmov

tv

Blender 2.7 Tutorial #70: Speeding Up Cycles & GPU Rendering with CUDA #b3d

Показать описание

Thanks for watching! Please don't forget to subscribe to this channel for more Blender & technology tutorials like this one! :)

In this Blender 2.7 Tutorial #70 I cover:

- How to Speed up rendering on your computer, whether you use your CPU (processor) as your render device, or your NVIDIA Video Card.

- Settings including the number of samples, light bounces, tile size and tile order.

-How to best utilize your nvidia geforce gtx video card with its proprietary CUDA technology in Blender to greatly speed up your renders.

****************

*****************

*****************

Thanks for watching, and don't forget to Like & Subscribe to help the channel! =)

**********************************

Visit my Blender 2.7 Tutorial Series playlist for more Blender Tutorials:

Also check out my Blender Game Engine Basics Series playlist:

My Blender Video Effects Playlist:

In this Blender 2.7 Tutorial #70 I cover:

- How to Speed up rendering on your computer, whether you use your CPU (processor) as your render device, or your NVIDIA Video Card.

- Settings including the number of samples, light bounces, tile size and tile order.

-How to best utilize your nvidia geforce gtx video card with its proprietary CUDA technology in Blender to greatly speed up your renders.

****************

*****************

*****************

Thanks for watching, and don't forget to Like & Subscribe to help the channel! =)

**********************************

Visit my Blender 2.7 Tutorial Series playlist for more Blender Tutorials:

Also check out my Blender Game Engine Basics Series playlist:

My Blender Video Effects Playlist:

Blender 2.7 Tutorial #70: Speeding Up Cycles & GPU Rendering with CUDA #b3d

This can happen in Thailand

This is not sped up.

Blender GPU Render tutorial 2016 (NVidia)

NASIB JADI OJOL

Speed up Blender Renders by using the command line

Describe your perfect vacation. #philippines #angelescity #expat #travel #filipina #phillipines

How To Speed Up Rendering In Blender - 2016 - Best Ways Ever (Voice Tutorial)

India's first hoverboard 😨

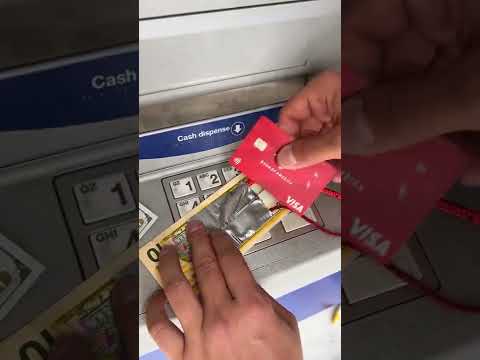

He made a trick in the atm #shorts

The Single Best Way in Blender to Speed Up Rendering with Cycles!

Blender 2.8 cpu+gpu rendering speed test [CYCLES]

Best reaction so far😂 #supercar #exhaust #carsound #fun #engine #prank #public #reaction #revking

⚠️ TRIGGER WARNING TRYPOPHOBIA ⚠️

Blender 2.7 Tutorial #69: Particles: Falling Snow #b3d

The Surgery To Reveal More Teeth 😨

Evening in Monte Carlo #monaco #lifestyle #luxury #money #style #rich #millionaire #life #shorts

Dança speakerman Skibidi Toilet 61

His reaction when he sees her FEET for the first time…😳 #Shorts

Creating Upgraded Titan TV Man #shorts #skibiditoilet

Blender + RTX - How to speed up Blender rendering with GeForce RTX

Will Tesla window break my hand?

Speed up your Blender Cycles Render in One Minute! ZOMG!

Your country, your cameraman, speakerman and tv man

Комментарии

0:20:16

0:20:16

0:00:28

0:00:28

0:00:20

0:00:20

0:00:46

0:00:46

0:00:24

0:00:24

0:07:25

0:07:25

0:00:16

0:00:16

0:03:26

0:03:26

0:00:26

0:00:26

0:01:00

0:01:00

0:13:59

0:13:59

0:02:27

0:02:27

0:00:28

0:00:28

0:00:22

0:00:22

0:35:29

0:35:29

0:00:20

0:00:20

0:00:08

0:00:08

0:00:12

0:00:12

0:01:00

0:01:00

0:00:37

0:00:37

0:05:46

0:05:46

0:00:39

0:00:39

0:02:07

0:02:07

0:00:22

0:00:22