filmov

tv

DistilBERT Revisited smaller,lighter,cheaper and faster BERT Paper explained

Показать описание

DistilBERT Revisited :smaller,lighter,cheaper and faster BERT Paper explained

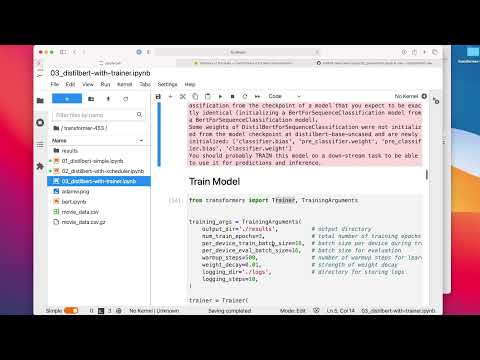

In this video I will be explaining about DistillBERT. The DistilBERT model was proposed in the blog post Smaller, faster, cheaper, lighter: Introducing DistilBERT, a distilled version of BERT, and the paper DistilBERT, a distilled version of BERT: smaller, faster, cheaper and lighter. DistilBERT is a small, fast, cheap and light Transformer model trained by distilling BERT base. It has 40% less parameters than bert-base-uncased, runs 60% faster while preserving over 95% of BERT’s performances as measured on the GLUE language understanding benchmark.

If you like such content please subscribe to the channel here:

If you like to support me financially, It is totally optional and voluntary.

Relevant links:

Knowledge Distillation:

BERT:

DistillBERT:

GLUE benchmarks:

In this video I will be explaining about DistillBERT. The DistilBERT model was proposed in the blog post Smaller, faster, cheaper, lighter: Introducing DistilBERT, a distilled version of BERT, and the paper DistilBERT, a distilled version of BERT: smaller, faster, cheaper and lighter. DistilBERT is a small, fast, cheap and light Transformer model trained by distilling BERT base. It has 40% less parameters than bert-base-uncased, runs 60% faster while preserving over 95% of BERT’s performances as measured on the GLUE language understanding benchmark.

If you like such content please subscribe to the channel here:

If you like to support me financially, It is totally optional and voluntary.

Relevant links:

Knowledge Distillation:

BERT:

DistillBERT:

GLUE benchmarks:

Комментарии

0:20:07

0:20:07

0:12:12

0:12:12

0:05:20

0:05:20

0:00:20

0:00:20

0:17:58

0:17:58

0:20:37

0:20:37

0:22:23

0:22:23

0:08:17

0:08:17

0:28:08

0:28:08

0:17:22

0:17:22

0:40:06

0:40:06

1:04:10

1:04:10

1:04:17

1:04:17

0:19:14

0:19:14

0:55:01

0:55:01