filmov

tv

Understanding Gated Recurrent Units (GRUs) in Deep Learning with Python

Показать описание

Understanding Gated Recurrent Units (GRUs) in Deep Learning with Python

💥💥 GET FULL SOURCE CODE AT THIS LINK 👇👇

Gated Recurrent Units (GRUs) are a type of Recurrent Neural Network (RNN) designed to overcome the Vanishing Gradient Problem in long sequence modeling. In this post, we'll explore the theory behind GRUs and learn how to implement them using Python and the popular Keras library.

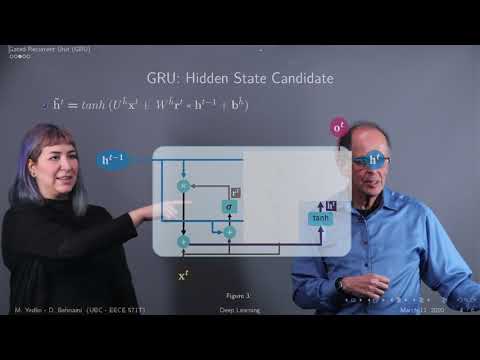

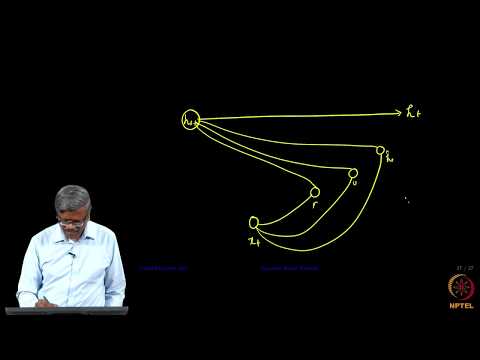

First, let's discuss how GRUs handle the hidden state. GRU cells consist of a reset gate, an update gate, and a candidate state. The reset gate determines which hidden state information to keep or discard, the update gate decides how much to update the current state based on new input, and the candidate state suggests a new hidden state. The final hidden state is a weighted sum of the candidate state and the updated hidden state.

Now, let's dive into building a GRU model using Python and Keras. We'll start by importing the necessary libraries and defining the GRU model architecture. Next, we'll prep our dataset for training and implement the training loop. You'll learn how to interpret the loss, accuracy, and other key metrics during training. After the training process is complete, we'll discuss potential applications of GRUs and suggest further study materials to deepen your understanding.

Additional Resources:

#STEM #Python #DeepLearning #MachineLearning #GRU #RNN #NeuralNetworks #NeurIPS #Keras #TensorFlow #AI #DataScience #Neuroscience #MachineIntelligence #Technology #WomenWhoCode #PyData #CodeNewbie #DataSource

Find this and all other slideshows for free on our website:

💥💥 GET FULL SOURCE CODE AT THIS LINK 👇👇

Gated Recurrent Units (GRUs) are a type of Recurrent Neural Network (RNN) designed to overcome the Vanishing Gradient Problem in long sequence modeling. In this post, we'll explore the theory behind GRUs and learn how to implement them using Python and the popular Keras library.

First, let's discuss how GRUs handle the hidden state. GRU cells consist of a reset gate, an update gate, and a candidate state. The reset gate determines which hidden state information to keep or discard, the update gate decides how much to update the current state based on new input, and the candidate state suggests a new hidden state. The final hidden state is a weighted sum of the candidate state and the updated hidden state.

Now, let's dive into building a GRU model using Python and Keras. We'll start by importing the necessary libraries and defining the GRU model architecture. Next, we'll prep our dataset for training and implement the training loop. You'll learn how to interpret the loss, accuracy, and other key metrics during training. After the training process is complete, we'll discuss potential applications of GRUs and suggest further study materials to deepen your understanding.

Additional Resources:

#STEM #Python #DeepLearning #MachineLearning #GRU #RNN #NeuralNetworks #NeurIPS #Keras #TensorFlow #AI #DataScience #Neuroscience #MachineIntelligence #Technology #WomenWhoCode #PyData #CodeNewbie #DataSource

Find this and all other slideshows for free on our website:

0:08:15

0:08:15

0:11:18

0:11:18

0:09:17

0:09:17

0:03:15

0:03:15

0:17:07

0:17:07

![[GRU] Applying and](https://i.ytimg.com/vi/rdz0UqQz5Sw/hqdefault.jpg) 0:21:11

0:21:11

0:04:53

0:04:53

0:14:59

0:14:59

0:02:17

0:02:17

1:26:22

1:26:22

0:13:52

0:13:52

0:00:48

0:00:48

0:07:33

0:07:33

0:12:24

0:12:24

0:12:25

0:12:25

0:18:18

0:18:18

0:01:58

0:01:58

0:03:10

0:03:10

0:03:23

0:03:23

1:20:00

1:20:00

0:25:42

0:25:42

0:00:36

0:00:36

0:10:56

0:10:56

0:09:26

0:09:26