filmov

tv

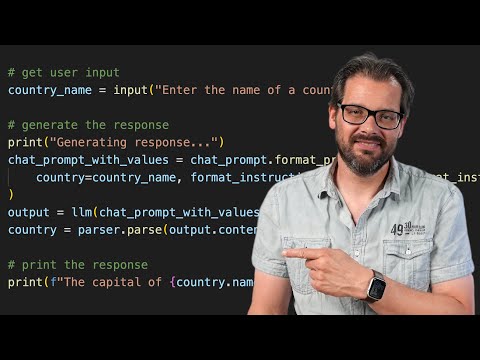

LLaMA2 with LangChain - Basics | LangChain TUTORIAL

Показать описание

LLaMA2 with LangChain - Basics | LangChain TUTORIAL

For more tutorials on using LLMs and building Agents, check out my Patreon:

My Links:

Github:

Timestamps:

00:00 Intro

04:47 Translation English to French

05:40 Summarization of an Article

07:08 Simple Chatbot

10:38 Chatbot using LLaMA-13B Model

For more tutorials on using LLMs and building Agents, check out my Patreon:

My Links:

Github:

Timestamps:

00:00 Intro

04:47 Translation English to French

05:40 Summarization of an Article

07:08 Simple Chatbot

10:38 Chatbot using LLaMA-13B Model

LLaMA2 with LangChain - Basics | LangChain TUTORIAL

Getting to Know Llama 2: Everything You Need to Start Building

Llama2 Chat with Multiple Documents Using LangChain

End To End LLM Project Using LLAMA 2- Open Source LLM Model From Meta

'I want Llama3 to perform 10x with my private knowledge' - Local Agentic RAG w/ llama3

Getting Started with LangChain and Llama 2 in 15 Minutes | Beginner's Guide to LangChain

Llama 2 in LangChain — FIRST Open Source Conversational Agent!

How to build a Llama 2 chatbot

Build a Large Language Model AI Chatbot using Retrieval Augmented Generation

RAG + Langchain Python Project: Easy AI/Chat For Your Docs

What is LangChain?

LangChain Basics Tutorial #1 - LLMs & PromptTemplates with Colab

LLM Project | End to End Gen AI Project Using LangChain, Google Palm In Ed-Tech Industry

What is LangChain? 101 Beginner's Guide Explained with Animations

Python RAG Tutorial (with Local LLMs): AI For Your PDFs

How to use the Llama 2 LLM in Python

Ollama meets LangChain

'okay, but I want Llama 3 for my specific use case' - Here's how

Gen AI Project Using Llama3.1 | End to End Gen AI Project

Create a LOCAL Python AI Chatbot In Minutes Using Ollama

Meta just release Llama 2, a true ChatGPT Rival!

Reliable, fully local RAG agents with LLaMA3.2-3b

LangChain is AMAZING | Quick Python Tutorial

Create a ChatBot in Python Using Llama2 and LangChain - Ask Questions About Your Own Data

Комментарии

0:12:14

0:12:14

0:33:33

0:33:33

0:15:22

0:15:22

0:36:02

0:36:02

0:24:02

0:24:02

0:15:47

0:15:47

0:26:51

0:26:51

0:16:28

0:16:28

0:02:53

0:02:53

0:16:42

0:16:42

0:08:08

0:08:08

0:19:39

0:19:39

0:44:00

0:44:00

0:11:01

0:11:01

0:21:33

0:21:33

0:04:51

0:04:51

0:06:30

0:06:30

0:24:20

0:24:20

0:40:51

0:40:51

0:13:17

0:13:17

0:00:35

0:00:35

0:31:04

0:31:04

0:17:42

0:17:42

0:12:02

0:12:02