filmov

tv

Chris Manning - Meaning and Intelligence in Language Models (COLM 2024)

Показать описание

Meaning and Intelligence in Language Models: From Philosophy to Agents in a World

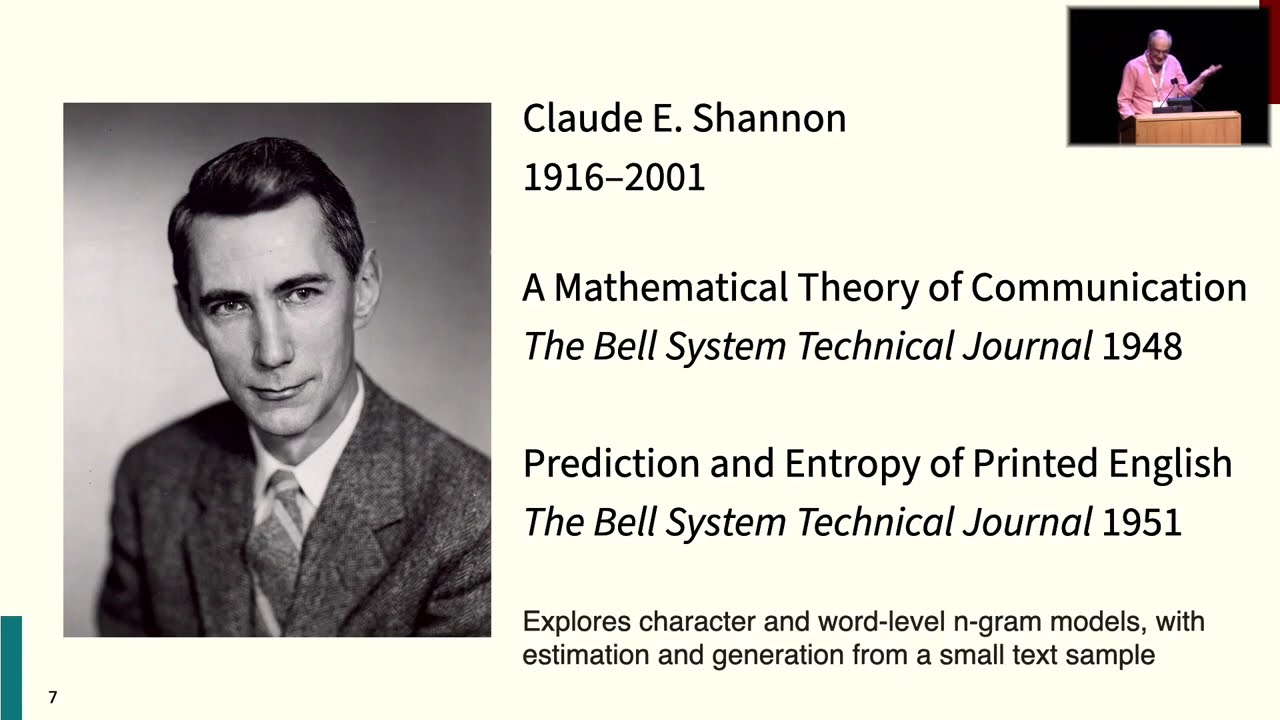

Language Models have been around for decades but have suddenly taken the world by storm. In a surprising third act for anyone doing NLP in the 70s, 80s, 90s, or 2000s, in much of the popular media, artificial intelligence is now synonymous with language models. In this talk, I want to take a look backward at where language models came from and why they were so slow to emerge, a look inward to give my thoughts on meaning, intelligence, and what language models understand and know, and a look forward at what we need to build intelligent language-using agents in a world. I will argue that material beyond language is not necessary to having meaning and understanding, but it is very useful in most cases, and that adaptability and learning are vital to intelligence, and so the current strategy of building from huge curated data will not truly get us there, even though LLMs have so many good uses. For a web agent, I look at how it can learn through interactions and make good use of the hierarchical structure of language to make exploration tractable. I will show recent work with Shikhar Murty about how an interaction-first learning approach for web agents can work very effectively, giving gains of 20 percent on MiniWoB++ over either a zero-shot language model agent or an instruction-first fine-tuned agent.

Language Models have been around for decades but have suddenly taken the world by storm. In a surprising third act for anyone doing NLP in the 70s, 80s, 90s, or 2000s, in much of the popular media, artificial intelligence is now synonymous with language models. In this talk, I want to take a look backward at where language models came from and why they were so slow to emerge, a look inward to give my thoughts on meaning, intelligence, and what language models understand and know, and a look forward at what we need to build intelligent language-using agents in a world. I will argue that material beyond language is not necessary to having meaning and understanding, but it is very useful in most cases, and that adaptability and learning are vital to intelligence, and so the current strategy of building from huge curated data will not truly get us there, even though LLMs have so many good uses. For a web agent, I look at how it can learn through interactions and make good use of the hierarchical structure of language to make exploration tractable. I will show recent work with Shikhar Murty about how an interaction-first learning approach for web agents can work very effectively, giving gains of 20 percent on MiniWoB++ over either a zero-shot language model agent or an instruction-first fine-tuned agent.

Комментарии

0:58:18

0:58:18

0:47:28

0:47:28

0:06:26

0:06:26

0:02:30

0:02:30

0:46:32

0:46:32

0:25:54

0:25:54

0:55:41

0:55:41

0:43:02

0:43:02

0:31:04

0:31:04

0:18:00

0:18:00

0:12:15

0:12:15

0:06:24

0:06:24

0:10:42

0:10:42

0:22:33

0:22:33

0:16:29

0:16:29

0:46:23

0:46:23

0:21:52

0:21:52

0:18:21

0:18:21

0:55:41

0:55:41

0:08:03

0:08:03

0:00:43

0:00:43

0:46:37

0:46:37

0:56:20

0:56:20

0:46:14

0:46:14