filmov

tv

Streaming data from BigQuery to Datastore using Dataflow

Показать описание

🚀 Today, I'm eager to discuss a method for using a Dataflow streaming pipeline to move data from BigQuery to Cloud Datastore.. At first glance, this approach might seem unconventional. Why? Because BigQuery isn't typically associated with streaming capabilities. However, I believe this strategy has immense potential.

🔍 Here's the context: a significant portion of our data now resides in BigQuery, structured through tools like DBT or other data transformation frameworks. This shift means much of the information we need isn't just in event-based message queues anymore. And here's an interesting observation: in most real-world scenarios, near real-time data transfer (ranging from 30 seconds to an hour) is often more than sufficient.

💡 This leads me to propose a versatile, reusable solution for moving data from BigQuery to Cloud Datastore, or perhaps to other target databases. The potential value this could add, especially in terms of real-time data processing and analytics, is substantial.

🤔 I'm eager to hear your thoughts on this. And for the Apache Beam experts out there, I'd particularly value your insights. Are there any blind spots in this approach? Could there be reliability issues under certain conditions? Your expert critique is invaluable!

#Dataflow #BigQuery #CloudDatastore #StreamingData #DataEngineering #InnovationInTech #PracticalGCP #ApacheBeam

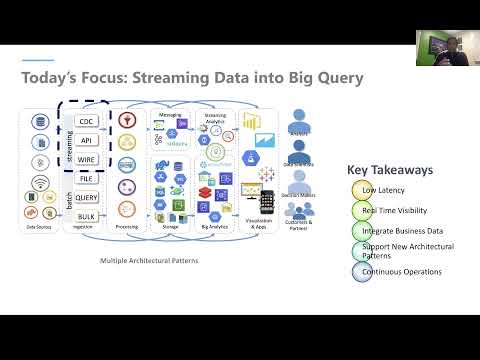

00:39 - Solutions we talked about so far

06:16 - How about a batch Dataflow job?

08:08 - How about a streaming Dataflow job?

09:32 - It's a bit complex, but there is a way

10:37 - Key design considerations

14:18 - Detailed Design - The flow

16:32 - Detailed Design - Impulse window & checkpointing

20:32 - Detailed Design - Checkpointing logic

26:18 - Code & Demo

38:47 - Pros & cons plus ideas

Corrections: the BETWEEN filter shown in the video has a bug caused by all inclusive filters, I've since replaced it with greater than / equals to make sure there are no overlaps.

🔍 Here's the context: a significant portion of our data now resides in BigQuery, structured through tools like DBT or other data transformation frameworks. This shift means much of the information we need isn't just in event-based message queues anymore. And here's an interesting observation: in most real-world scenarios, near real-time data transfer (ranging from 30 seconds to an hour) is often more than sufficient.

💡 This leads me to propose a versatile, reusable solution for moving data from BigQuery to Cloud Datastore, or perhaps to other target databases. The potential value this could add, especially in terms of real-time data processing and analytics, is substantial.

🤔 I'm eager to hear your thoughts on this. And for the Apache Beam experts out there, I'd particularly value your insights. Are there any blind spots in this approach? Could there be reliability issues under certain conditions? Your expert critique is invaluable!

#Dataflow #BigQuery #CloudDatastore #StreamingData #DataEngineering #InnovationInTech #PracticalGCP #ApacheBeam

00:39 - Solutions we talked about so far

06:16 - How about a batch Dataflow job?

08:08 - How about a streaming Dataflow job?

09:32 - It's a bit complex, but there is a way

10:37 - Key design considerations

14:18 - Detailed Design - The flow

16:32 - Detailed Design - Impulse window & checkpointing

20:32 - Detailed Design - Checkpointing logic

26:18 - Code & Demo

38:47 - Pros & cons plus ideas

Corrections: the BETWEEN filter shown in the video has a bug caused by all inclusive filters, I've since replaced it with greater than / equals to make sure there are no overlaps.

Комментарии

0:46:19

0:46:19

0:08:18

0:08:18

0:05:43

0:05:43

0:05:40

0:05:40

0:05:23

0:05:23

0:04:25

0:04:25

0:18:15

0:18:15

0:30:00

0:30:00

1:17:46

1:17:46

0:17:09

0:17:09

0:19:39

0:19:39

0:45:42

0:45:42

0:02:04

0:02:04

0:10:12

0:10:12

0:16:32

0:16:32

0:02:12

0:02:12

![[NEW] Streaming Analytics](https://i.ytimg.com/vi/hZoqPlLEt5Y/hqdefault.jpg) 0:05:55

0:05:55

0:01:20

0:01:20

0:20:11

0:20:11

![[TASK 4] Streaming](https://i.ytimg.com/vi/jTirNcvCAzI/hqdefault.jpg) 0:01:35

0:01:35

0:15:17

0:15:17

0:15:58

0:15:58

0:04:03

0:04:03

0:09:26

0:09:26