filmov

tv

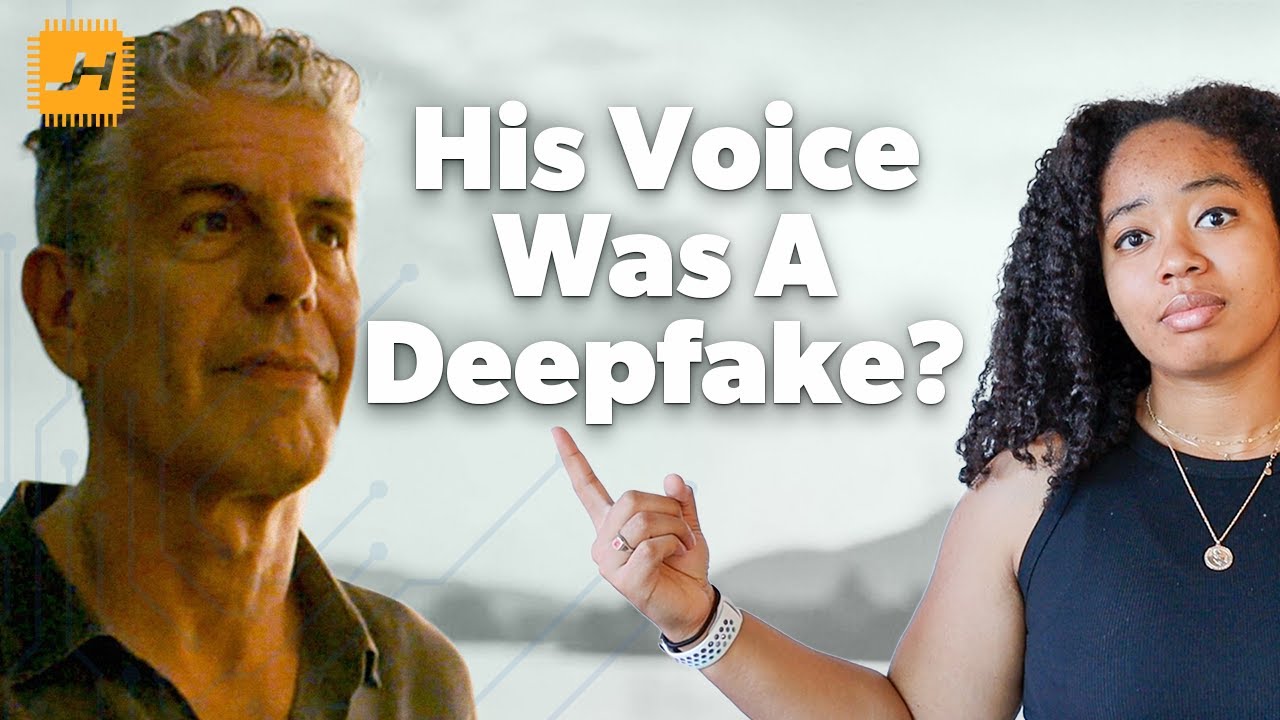

Anthony Bourdain and The Ethics of Synthetic Media

Показать описание

How would you feel if you found out that someone had used AI to generate your voice saying something you'd never said aloud?

Me on Other Parts of the Internet:

Sources:

Corredoira, Loreto, Ignacio Bel Mallen, and Rodrigo Cetina Presuel. 2021. The Handbook of Communication Rights, Law, and Ethics. John Wiley & Sons.

Anthony Bourdain and The Ethics of Synthetic Media

Anthony Bourdain's 'voice' from beyond the grave raises ethical questions

Anthony Bourdain Documentary ‘Roadrunner’ Sparks A Strange Ethics Debate

Filmmaker reveals Anthony Bourdain's voice recreated at times using AI in new documentary

Critics INFURIATED Over Anthony Bourdain DEEPFAKE In New Documentary

Anthony Bourdain, Leadership Lessons From the Kitchen, 2006

Anthony Bourdain documentary recreates chef’s voice with artificial intelligence

Anthony Bourdain Video Game

Roadrunner: A Film About Anthony Bourdain - Official Trailer (2021)

Anthony Bourdain - Telling Stories Through Food on “Parts Unknown” | The Daily Show

Anthony Bourdain’s Blanquette De Veau. #veal #ethics #cooking #anthonybourdain

Anthony Bourdain on food, travel and politics

Roadrunner: A Film about Anthony Bourdain - Special Q&A

Anthony Bourdain Doc Under Fire for Using A I to Mimic the Late Chef’s

Anthony Bourdain's Secrets to Success: Why Cleanliness and Punctuality Matter in the Kitchen

Anthony Bourdain about traveling and the Human Race

How to Write a Book, with Chef Dan Barber (and Anthony Bourdain...Sort of)

Anthony Bourdain talks about Palestinians

Ottavia Bourdain's Harsh Response To Roadrunner Director's Claim

Anthony Bourdain Artificial Voice Reads Emails In Documentary

Anthony Bourdain's hatred of vegans inspired me to do THIS

Anthony Bourdain’s Foie Gras 🪿 #food #anthonybourdain #liver

Donald Trump Jr., Anthony Bourdain Immortalized as 'Zombies' Outside PETA's D.C. Offi...

Bourdain and Beyond

Комментарии

0:08:15

0:08:15

0:04:20

0:04:20

0:02:44

0:02:44

0:02:33

0:02:33

0:07:10

0:07:10

0:46:51

0:46:51

0:01:45

0:01:45

0:01:41

0:01:41

0:02:21

0:02:21

0:11:14

0:11:14

0:00:45

0:00:45

0:07:50

0:07:50

0:30:52

0:30:52

0:02:15

0:02:15

0:00:51

0:00:51

0:01:26

0:01:26

0:01:38

0:01:38

0:01:01

0:01:01

0:05:33

0:05:33

0:02:31

0:02:31

0:08:52

0:08:52

0:00:30

0:00:30

0:00:39

0:00:39

1:14:54

1:14:54