filmov

tv

Deploy AI Models to Production with NVIDIA NIM

Показать описание

In this video, we will look at NVIDIA Inference Microservice (NIM). NIM offers pre-configured AI models optimized for NVIDIA hardware, streamlining the transition from prototype to production. The key benefits, including cost efficiency, improved latency, and scalability. Learn how to get started with NIM for both serverless and local deployments, and see live demonstrations of models like Llama 3 and Google’s Polygama in action. Don’t miss out on this powerful tool that can transform your enterprise applications.

LINKS:

#deployment #nvidia #llms

RAG Beyond Basics Course:

TIMESTAMP:

00:00 Deploying LLMs is hard!

00:30 Challenges in Productionizing AI Models

01:20 Introducing NVIDIA Inference Microservice (NIM)

02:17 Features and Benefits of NVIDIA NIM

03:33 Getting Started with NVIDIA NIM

05:25 Hands-On with NVIDIA NIM

07:15 Integrating NVIDIA NIM into Your Projects

09:50 Local Deployment of NVIDIA NIM

11:04 Advanced Features and Customization

11:39 Conclusion and Future Content

All Interesting Videos:

LINKS:

#deployment #nvidia #llms

RAG Beyond Basics Course:

TIMESTAMP:

00:00 Deploying LLMs is hard!

00:30 Challenges in Productionizing AI Models

01:20 Introducing NVIDIA Inference Microservice (NIM)

02:17 Features and Benefits of NVIDIA NIM

03:33 Getting Started with NVIDIA NIM

05:25 Hands-On with NVIDIA NIM

07:15 Integrating NVIDIA NIM into Your Projects

09:50 Local Deployment of NVIDIA NIM

11:04 Advanced Features and Customization

11:39 Conclusion and Future Content

All Interesting Videos:

How to Deploy Machine Learning Models (ft. Runway)

Deploy AI Models to Production with NVIDIA NIM

Deploy ML model in 10 minutes. Explained

The Best Way to Deploy AI Models (Inference Endpoints)

How to Deploy Machine Learning Models into Production Easily

How To Deploy Machine Learning Models in Production

Deploy ML models with FastAPI, Docker, and Heroku | Tutorial

Build and Deploy a Machine Learning App in 2 Minutes

How To Switch to Tech From a Non-Tech Background- AlmaBetter Free Masterclass

How to deploy machine learning models into production

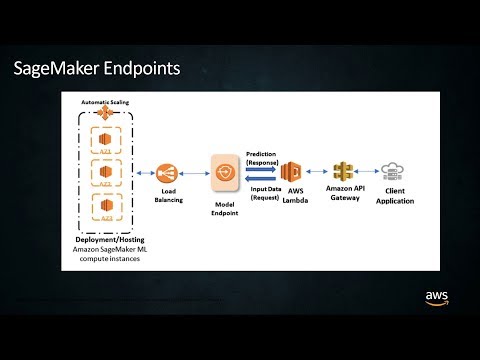

Deploy Your ML Models to Production at Scale with Amazon SageMaker

How to deploy LLMs (Large Language Models) as APIs using Hugging Face + AWS

How to Deploy Diffusion Models

Deploy ML model quickly and easily | Deploying machine learning models quickly and easily

How to Deploy Keras Models to Production

How To Deploy Perplexity AI Models to Production

How to Deploy ML Solutions with FastAPI, Docker, & AWS

Back to Basics: Deploy Your Machine Learning Model for Real-Time Predictions

How to Deploy a Tensorflow Model to Production

How to Deploy TensorFlow model to Production in 5 min

Model deployment and inferencing with Azure Machine Learning | Machine Learning Essentials

How To Deploy Machine Learning Models Using Docker And Github Action In Heroku

The EASIEST Way to Deploy AI Models from Hugging Face (No Code)

Model Trained? You're half way there, my friend! #shorts #machinelearning #deployment

Комментарии

0:13:12

0:13:12

0:12:08

0:12:08

0:12:41

0:12:41

0:05:48

0:05:48

0:04:30

0:04:30

1:01:10

1:01:10

0:18:45

0:18:45

0:02:12

0:02:12

1:40:01

1:40:01

0:35:39

0:35:39

0:07:53

0:07:53

0:09:29

0:09:29

0:37:42

0:37:42

0:10:42

0:10:42

0:33:14

0:33:14

0:02:03

0:02:03

0:28:48

0:28:48

0:03:40

0:03:40

0:38:10

0:38:10

0:05:09

0:05:09

0:14:50

0:14:50

0:21:57

0:21:57

0:10:28

0:10:28

0:00:16

0:00:16