filmov

tv

6. Regression Analysis

Показать описание

MIT 18.S096 Topics in Mathematics with Applications in Finance, Fall 2013

Instructor: Peter Kempthorne

This lecture introduces the mathematical and statistical foundations of regression analysis, particularly linear regression.

License: Creative Commons BY-NC-SA

Instructor: Peter Kempthorne

This lecture introduces the mathematical and statistical foundations of regression analysis, particularly linear regression.

License: Creative Commons BY-NC-SA

6. Regression Analysis

Regression Analysis | Full Course

Video 1: Introduction to Simple Linear Regression

Using Excel for Regression Analysis

Regression analysis

Finding the Regression Equation/Regression Line by Hand (Formula)

How To... Perform Simple Linear Regression by Hand

Correlation Vs Regression: Difference Between them with definition & Comparison Chart

Plus Two Commerce - Statistics | Correlation Analysis, Regression Analysis |Xylem Plus Two Commerce

How to do a linear regression on excel

Discussion 6: Using Multiple Regression in Excel for Predictive Analysis

Assumptions of Linear Regression

Regression (4 of 6) - Measures of Variation

2.2.11 An Introduction to Linear Regression - Video 6: Correlation and Multicollinearity

Demand Forecasting Regression method problem

Regression (5 of 6) - F and t Tests

6. Regression Recap

How to check relatedness through Multiple Regression Analysis (Dependent and Independent)

Simple Regression Analysis | Two Variable Linear Regression Equation | Econometrics in Economics

GLM Part 6: Interaction effects: How to interpret and identify them

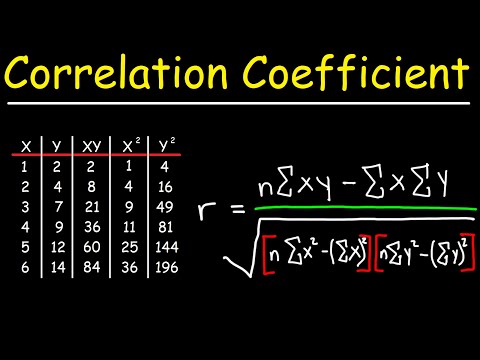

Correlation Coefficient

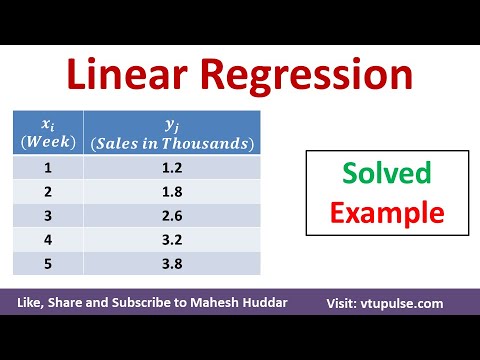

Linear Regression Algorithm – Solved Numerical Example in Machine Learning by Mahesh Huddar

Interpreting Linear Regression Output (14-6)

Multicollinearity (in Regression Analysis)

Комментарии

1:22:13

1:22:13

0:45:17

0:45:17

0:13:29

0:13:29

0:05:46

0:05:46

0:03:51

0:03:51

0:06:22

0:06:22

0:10:55

0:10:55

0:07:51

0:07:51

1:22:20

1:22:20

0:05:18

0:05:18

0:13:55

0:13:55

0:10:33

0:10:33

0:12:52

0:12:52

0:07:26

0:07:26

0:13:15

0:13:15

0:12:48

0:12:48

0:02:27

0:02:27

0:06:37

0:06:37

0:08:22

0:08:22

0:07:23

0:07:23

0:12:57

0:12:57

0:05:30

0:05:30

0:04:31

0:04:31

0:05:57

0:05:57