filmov

tv

Neural Networks using Lux.jl and Zygote.jl Autodiff in Julia

Показать описание

-------

-------

-------

-------

Timestamps:

00:00 Intro

01:23 Imports

02:33 Constants/Hyperparameters

03:27 Instantiating the random number generator

03:57 Generate a toy dataset of noisy sine samples

06:11 Define neural architecture

10:13 Initialize network parameters (and layer states)

11:39 Network prediction with initial parameter state

14:25 Forward function: parameters to loss mapping

16:12 Preparing the optimizer

16:53 Train loop start

17:10 Transformed forward pass

18:51 Using vjp/back function for reverse pass

21:03 Update parameters with the gradient (parameter cotangent)

21:35 Finish training loop

22:21 Run training loop & investigate loss history

23:17 Prediction with trained parameters

24:42 Summary

25:53 Outro

Neural Networks using Lux.jl and Zygote.jl Autodiff in Julia

Lux.jl: Explicit Parameterization of Neural Networks in Julia | Avik Pal | JuliaCon 2022

What is (scientific) machine learning? An introduction through Julia's Lux.jl

Building Deep Learning Models in Flux.jl (4 minute tour)

Simple Chains: Fast CPU Neural Networks | Chris Elrod | JuliaCon 2022

Scaling up Training of any Flux.jl Model Made Easy | Dhairya Gandhi | JuliaCon 2022

Deep Neural Networks in Julia With Flux.jl | Talk Julia #10

What is a Pullback in Zygote.jl? | vector-Jacobian products in Julia

Learning Smoothly: Machine Learning With RobustNeuralNetworks.jl | Nicholas Barbara | JuliaCon 2023

Physics-Informed Neural Networks in Julia

Ignite.jl: A Brighter Way to Train Neural Networks | Jon Doucette | JuliaCon 2023

Implementing Neural Network in Mid Night from Scratch using JAX with Power Level quite fun 😁😁🐱🏍...

Using NeuralODEs in Real Life Applications | JuliaCon 2023

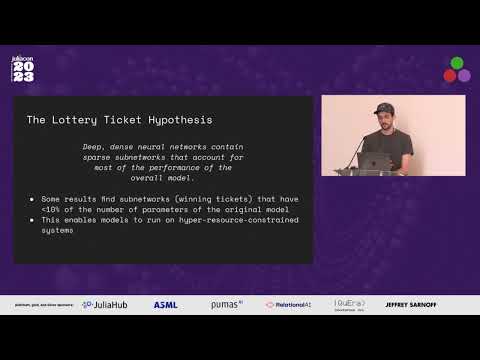

LotteryTickets.jl: Sparsify Your Flux Models | Marco Cognetta | JuliaCon 2023

`do block` Considered Harmless | Nicolau Leal Werneck | JuliaCon 2022

Neural Networks in Equinox (JAX DL framework) with Optax

Deep Reinforcement Learning | Decision Making Under Uncertainty using POMDPs.jl

2- Object Tracking in Julia(VideoIO.jl, Makie.jl, Images.jl)

Automating the composition of ML interatomic potentials in Julia | Emmanuel Lujan | JuliaCon 2023

Mixing Implicit and Explicit Deep Learning with Skip DEQs | Avik Pal | SciMLCon 2022

Physics-Informed Neural Networks in JAX (with Equinox & Optax)

[06x12] How to use your GPU for Machine Learning using CUDA.jl and Flux.jl (CUDA.jl 101 Part 3 of 3)

ChainRules.jl Meets Unitful.jl: Autodiff via Unit Analysis | Sam Buercklin | JuliaCon 2022

[82] Intro to FluxML and Machine Learning in Julia (Kyle Daruwalla)

Комментарии

0:26:50

0:26:50

0:08:33

0:08:33

1:18:56

1:18:56

0:04:16

0:04:16

0:08:07

0:08:07

0:25:49

0:25:49

0:36:58

0:36:58

0:13:23

0:13:23

0:29:51

0:29:51

0:43:36

0:43:36

0:29:36

0:29:36

0:01:43

0:01:43

2:59:32

2:59:32

0:10:07

0:10:07

0:08:13

0:08:13

0:27:08

0:27:08

0:11:49

0:11:49

0:02:02

0:02:02

0:07:38

0:07:38

0:17:23

0:17:23

0:38:51

0:38:51

![[06x12] How to](https://i.ytimg.com/vi/4PmcxUKSRww/hqdefault.jpg) 0:20:45

0:20:45

0:08:16

0:08:16

![[82] Intro to](https://i.ytimg.com/vi/WPMEILeh1Q8/hqdefault.jpg) 1:05:20

1:05:20