filmov

tv

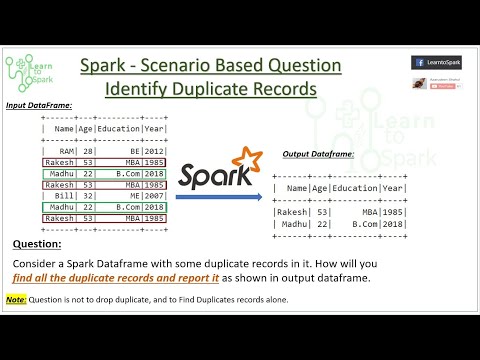

Spark Scenario Based Question | Deal with Ambiguous Column in Spark | Using PySpark | LearntoSpark

Показать описание

In this video, we will learn how to solve the Ambiguous column issue while the reading the file in Spark.

Fb page:

Dataset:

Fb page:

Dataset:

question 2 : spark scenario based interview question and answer | spark architecture?

49. Databricks & Spark: Interview Question(Scenario Based) - How many spark jobs get created?

question 3 (part - 1) : spark scenario based interview question and answer | spark terminologies

10 PySpark Product Based Interview Questions

Spark Interview Question | Scenario Based Question | Multi Delimiter | LearntoSpark

Spark Scenario Based Interview Question | Missing Code

1. Merge two Dataframes using PySpark | Top 10 PySpark Scenario Based Interview Question|

pyspark scenario based interview questions and answers | #pyspark | #interview | #data

Azure Data Engineering + Azure Data Bricks Demo by Abhishek Agarwal at Raj Cloud technologies

Spark Scenario Based Question | Window - Ranking Function in Spark | Using PySpark | LearntoSpark

Spark Interview Question | Scenario Based | Data Masking Using Spark Scala | With Demo| LearntoSpark

40 Scenario based pyspark interview question | pyspark interview

Coalesce in Spark SQL | Scala | Spark Scenario based question

Spark SQL Greatest and Least Function - Apache Spark Scenario Based Questions | Using PySpark

Spark Scenario Based Question | Use Case on Drop Duplicate and Window Functions | LearntoSpark

Spark Scenario Based Question | Best Way to Find DataFrame is Empty or Not | with Demo| learntospark

Spark Scenario Based Interview Question | out of memory

Spark Scenario Based Question | ClickStream Analytics

question 1 : spark scenario based interview question and answer | spark vs hadoop mapreduce

Comparing Lists in Scala | Spark Interview Questions | Realtime scenario

day 3 | consecutive days | pyspark scenario based interview questions and answers

Spark Scenario Based Question | SET Operation Vs Joins in Spark | Using PySpark | LearntoSpark

Spark Scenario Based Question | Alternative to df.count() | Use Case For Accumulators | learntospark

Spark Scenario Based Question | Handle JSON in Apache Spark | Using PySpark | LearntoSpark

Комментарии

0:14:16

0:14:16

0:06:01

0:06:01

0:20:59

0:20:59

0:39:46

0:39:46

0:08:13

0:08:13

0:07:01

0:07:01

0:03:58

0:03:58

0:24:17

0:24:17

1:21:06

1:21:06

0:06:56

0:06:56

0:05:09

0:05:09

0:07:05

0:07:05

0:06:43

0:06:43

0:07:51

0:07:51

0:13:03

0:13:03

0:05:19

0:05:19

0:06:53

0:06:53

0:13:59

0:13:59

0:08:54

0:08:54

0:05:55

0:05:55

0:18:11

0:18:11

0:10:23

0:10:23

0:04:30

0:04:30

0:07:09

0:07:09