filmov

tv

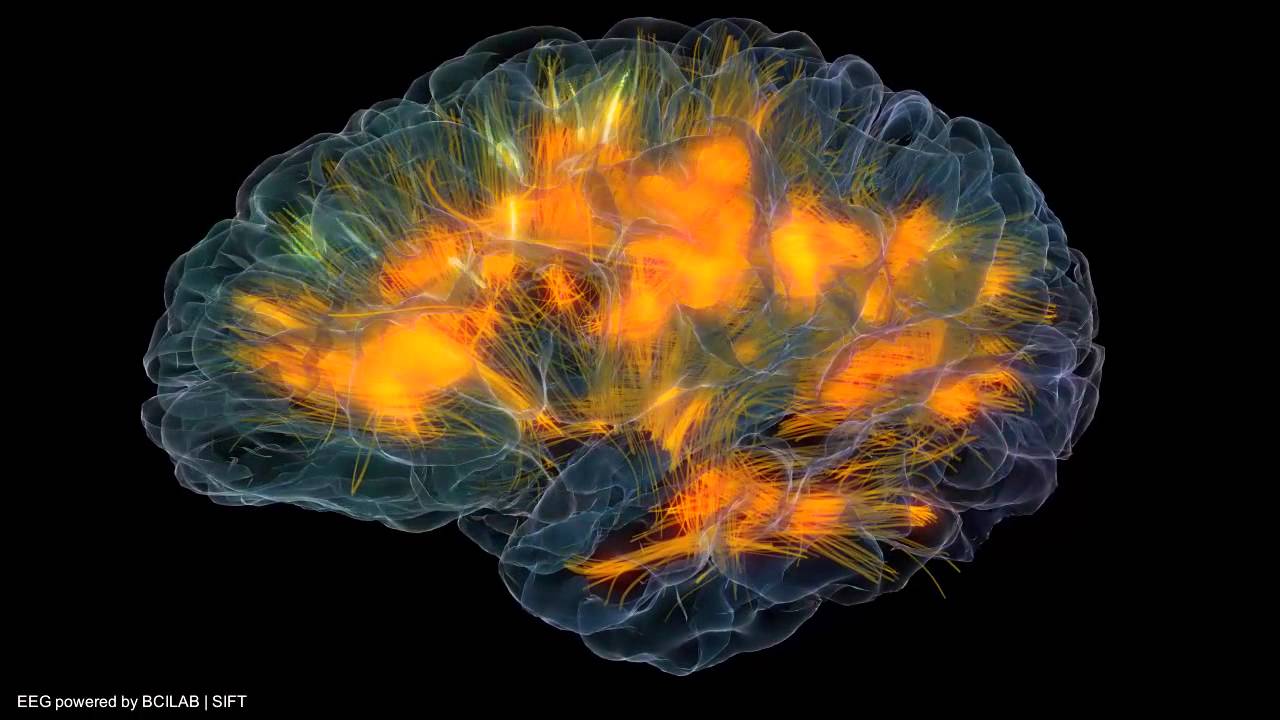

Glass brain flythrough - Gazzaleylab / SCCN / Neuroscapelab

Показать описание

This is an anatomically-realistic 3D brain visualization depicting real-time source-localized activity (power and "effective" connectivity) from EEG (electroencephalographic) signals. Each color represents source power and connectivity in a different frequency band (theta, alpha, beta, gamma) and the golden lines are white matter anatomical fiber tracts. Estimated information transfer between brain regions is visualized as pulses of light flowing along the fiber tracts connecting the regions.

The final visualization is done in Unity and allows the user to fly around and through the brain with a gamepad while seeing real-time live brain activity from someone wearing an EEG cap.

Team:

- Gazzaley Lab / Neuroscape lab, UCSF: Adam Gazzaley, Roger Anguera, Rajat Jain, David Ziegler, John Fesenko, Morgan Hough

- Swartz Center for Computational Neuroscience, UCSD: Tim Mullen & Christian Kothe

- Matt Omernick, Oleg Konings

Комментарии

0:01:15

0:01:15

0:01:25

0:01:25

0:01:15

0:01:15

0:01:25

0:01:25

0:00:51

0:00:51

0:01:08

0:01:08

0:04:34

0:04:34

0:00:54

0:00:54

0:03:49

0:03:49

0:01:53

0:01:53

0:00:20

0:00:20

0:01:51

0:01:51

0:01:26

0:01:26

0:03:09

0:03:09

0:02:14

0:02:14

0:01:25

0:01:25

0:00:08

0:00:08

0:00:33

0:00:33

0:02:25

0:02:25

0:01:16

0:01:16

0:00:18

0:00:18

0:00:27

0:00:27

0:02:58

0:02:58

0:00:28

0:00:28